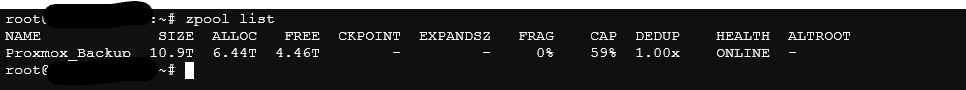

Before reboot, there was an I/O failure reported. I attempted to fix the pool with: zpool clear <pool> but the system hung, so I rebooted after about 30 minutes.

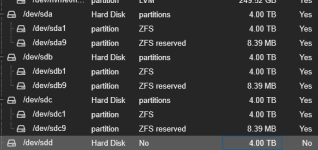

I've attached relevant logs that I could find since I noticed the I/O failure. Only one drive (sdd) is showing errors.

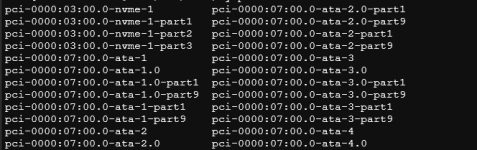

All drives are still there, shown in LSBLK screenshot. The pool in question was constructed in (I believe) RAID 5, as the three disks were all in use and the total storage was capacity of two.

Is there any way for me to revive the zpool?

I've attached relevant logs that I could find since I noticed the I/O failure. Only one drive (sdd) is showing errors.

All drives are still there, shown in LSBLK screenshot. The pool in question was constructed in (I believe) RAID 5, as the three disks were all in use and the total storage was capacity of two.

Is there any way for me to revive the zpool?