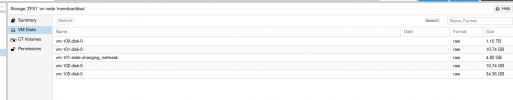

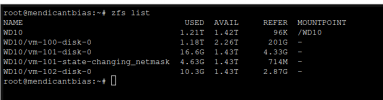

Long story short: My drive hosting proxmox died, reinstalled proxmox on a new drive and have that back up and running. Next step is to remount the zfs pool that had my 3 vm's on that. That was on two Western Digital Drives that are still healthy. I've done that but well I'm kind stuck on what I do next?

Do I need more mountpoints for the other VMs? New to ZFS so trying to figure this out. Pretty sure all my data is still safe, just trying to figure out to get proxmox to see the VM's again

Do I need more mountpoints for the other VMs? New to ZFS so trying to figure this out. Pretty sure all my data is still safe, just trying to figure out to get proxmox to see the VM's again