10.0.0.1 - VPS outside LAN

10.0.0.2 - VM inside proxmox with wireguard (within LAN)

192.168.1.112 - NAS without wireguard (within LAN)

Video: https://reddit.com/link/wp26ec/video/85rm42pn7wh91/player

10.0.0.1 <--> 192.168.1.112 bandwidth is throttling as show in my video (traffic routes through 10.0.0.2)

10.0.0.1 <--> 10.0.0.2 bandwidth is OK (LAN to VPS, wireguard to wireguard)

10.0.0.2 <--> 192.168.1.112 bandwidth is OK (both within LAN)

I tried setting up a new VM outside proxmox (virtual box on a windows machine) and installed wireguard and used that as the tunnel, and the bandwidth speed is OK. The problem is when VM with wireguard is within proxmox.

What could be the causing the bandwidth to throttle down?

10.0.0.2 - VM inside proxmox with wireguard (within LAN)

192.168.1.112 - NAS without wireguard (within LAN)

Video: https://reddit.com/link/wp26ec/video/85rm42pn7wh91/player

10.0.0.1 <--> 192.168.1.112 bandwidth is throttling as show in my video (traffic routes through 10.0.0.2)

10.0.0.1 <--> 10.0.0.2 bandwidth is OK (LAN to VPS, wireguard to wireguard)

10.0.0.2 <--> 192.168.1.112 bandwidth is OK (both within LAN)

I tried setting up a new VM outside proxmox (virtual box on a windows machine) and installed wireguard and used that as the tunnel, and the bandwidth speed is OK. The problem is when VM with wireguard is within proxmox.

What could be the causing the bandwidth to throttle down?

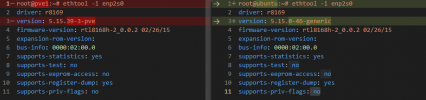

Code:

root@pve1:~# pveversion -v

proxmox-ve: 7.2-1 (running kernel: 5.15.39-3-pve)

pve-manager: 7.2-7 (running version: 7.2-7/d0dd0e85)

pve-kernel-5.15: 7.2-8

pve-kernel-helper: 7.2-8

pve-kernel-5.15.39-3-pve: 5.15.39-3

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 15.2.16-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-3

libpve-storage-perl: 7.2-7

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.5-1

proxmox-backup-file-restore: 2.2.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-2

pve-container: 4.2-2

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.5-1

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 6.2.0-11

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.5-pve1