We have similar errors in our Environment, but we have two different servers where this kind of behaviour occurs.

Both servers are have two zfs pools with striped mirror-vdevs:

INI:

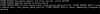

root@prodnode1:~# zpool list -v

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

pool_spinning 3.62T 1.04T 2.59T - - 12% 28% 1.00x ONLINE -

mirror 928G 220G 708G - - 11% 23.7% - ONLINE

sdm - - - - - - - - ONLINE

sdn - - - - - - - - ONLINE

mirror 928G 266G 662G - - 13% 28.7% - ONLINE

sdo - - - - - - - - ONLINE

sdp - - - - - - - - ONLINE

mirror 928G 290G 638G - - 13% 31.2% - ONLINE

sdq - - - - - - - - ONLINE

sdr - - - - - - - - ONLINE

mirror 928G 284G 644G - - 14% 30.6% - ONLINE

sds - - - - - - - - ONLINE

sdt - - - - - - - - ONLINE

pool_ssd 2.60T 1.67T 955G - - 30% 64% 1.00x ONLINE -

mirror 444G 285G 159G - - 29% 64.2% - ONLINE

sda - - - - - - - - ONLINE

sdb - - - - - - - - ONLINE

mirror 444G 285G 159G - - 30% 64.2% - ONLINE

sdc - - - - - - - - ONLINE

sdd - - - - - - - - ONLINE

mirror 444G 285G 159G - - 30% 64.1% - ONLINE

sde - - - - - - - - ONLINE

sdf - - - - - - - - ONLINE

mirror 444G 285G 159G - - 31% 64.1% - ONLINE

sdg - - - - - - - - ONLINE

sdh - - - - - - - - ONLINE

mirror 444G 285G 159G - - 31% 64.2% - ONLINE

sdi - - - - - - - - ONLINE

sdj - - - - - - - - ONLINE

mirror 444G 285G 159G - - 32% 64.2% - ONLINE

sdk - - - - - - - - ONLINE

sdl - - - - - - - - ONLINE

It seems that there has to be more than average io load on the pool in order for the error to occur.

When the error occurs, the vms switch to Readonly-FS. ( See attached picture1)

We even had vms whose filesystem was riddled with errors, so restore from backup was the only option.

Furthermore we had vms whose partition table became unreadable, restoring with testdisk was possible...

The servers in question are both Supermicro Machines, one is a SC216BE1C-R920LPB with a X10-DRi-T Board and a 9361-8i RAID-Controller in JBOD mode and the other is a SC216BE1C-R920LPB with a X11-DPi-NT Board and a Broadcom SAS III HBA 9300-8i

One might say that the 9361-8i is the problem, as it is a raid-controller running in jbod-mode, and if the error would occur only on this node, i would totally agree. But the error happens on both Nodes, the 9300-8i should be a perfectly viable HBA for ZFS...

Both servers have the Backplane ( BPN-SAS3-216EL1 ) in common, the drives used are:

- INTEL_SSDSC2KB240G8

- HGST_HTE721010A9E630