VirtIO Block still first choice for disk performance?

- Thread starter aPollO

- Start date

-

- Tags

- ceph performance virtio

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

From the docs:

So no, we'd recommend using VirtIO SCSI single with IO Thread enabled.

A SCSI controller of type VirtIO SCSI is the recommended setting if you aim for performance and is automatically selected for newly created Linux VMs since Proxmox VE 4.3. Linux distributions have support for this controller since 2012, and FreeBSD since 2014. For Windows OSes, you need to provide an extra iso containing the drivers during the installation. If you aim at maximum performance, you can select a SCSI controller of type VirtIO SCSI single which will allow you to select the IO Thread option. When selecting VirtIO SCSI single Qemu will create a new controller for each disk, instead of adding all disks to the same controller.

- Chapter 10, Virtual Machine Settings, Hard Disk

So no, we'd recommend using VirtIO SCSI single with IO Thread enabled.

We've done a pretty comprehensive analyses that may be of interest to you:Maybe somebody can show benchmarks (from 2023) to backup this claim? Just wondering.

https://kb.blockbridge.com/technote/proxmox-aio-vs-iouring/

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

I see. But you didn't test against "VirtIO Block" with IOThread?We've done a pretty comprehensive analyses that may be of interest to you:

https://kb.blockbridge.com/technote/proxmox-aio-vs-iouring/

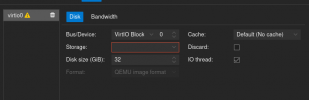

Your article mentions, but I can also enable IOThreads with VirtIO Block now:

You must use the virtio-scsi-single SCSI controller to enable IOThreads.

Last edited:

I believe You will get a non-fatal error on VM startYour article mentions, but I can also enable IOThreads with VirtIO Block now:

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

I believe You will get a non-fatal error on VM start

No? Everything goes fine when selecting VirtIO Block, which doesn't need to emulate SCSI as far as I know. I didn't get any errors or warning in the Proxmo web-GUI.

Some start-up output of the VM (also no errors in the logging):

Mar 26 23:19:15 server pvedaemon[29063]: <root@pam> starting task UPID:server:00007FC5:00145C60:6420B6D3:qmstart:100:root@pam:

Mar 26 23:19:15 server pvedaemon[32709]: start VM 100: UPID:server:00007FC5:00145C60:6420B6D3:qmstart:100:root@pam:

Mar 26 23:19:15 server systemd[1]: Started 100.scope.

Mar 26 23:19:15 server systemd-udevd[32726]: Using default interface naming scheme 'v247'.

Mar 26 23:19:15 server systemd-udevd[32726]: ethtool: autonegotiation is unset or enabled, the speed and duplex are not writable.

Mar 26 23:19:16 server kernel: [13344.263841] device tap100i0 entered promiscuous mode

Mar 26 23:19:16 server systemd-udevd[32726]: ethtool: autonegotiation is unset or enabled, the speed and duplex are not writable.

Mar 26 23:19:16 server systemd-udevd[32726]: ethtool: autonegotiation is unset or enabled, the speed and duplex are not writable.

Mar 26 23:19:16 server systemd-udevd[32725]: ethtool: autonegotiation is unset or enabled, the speed and duplex are not writable.

Mar 26 23:19:16 server systemd-udevd[32725]: Using default interface naming scheme 'v247'.

Mar 26 23:19:16 server kernel: [13344.292164] vmbr0: port 2(fwpr100p0) entered blocking state

Mar 26 23:19:16 server kernel: [13344.292167] vmbr0: port 2(fwpr100p0) entered disabled state

Mar 26 23:19:16 server kernel: [13344.292227] device fwpr100p0 entered promiscuous mode

Mar 26 23:19:16 server kernel: [13344.292257] vmbr0: port 2(fwpr100p0) entered blocking state

Mar 26 23:19:16 server kernel: [13344.292258] vmbr0: port 2(fwpr100p0) entered forwarding state

Mar 26 23:19:16 server kernel: [13344.298504] fwbr100i0: port 1(fwln100i0) entered blocking state

Mar 26 23:19:16 server kernel: [13344.298506] fwbr100i0: port 1(fwln100i0) entered disabled state

Mar 26 23:19:16 server kernel: [13344.298541] device fwln100i0 entered promiscuous mode

Mar 26 23:19:16 server kernel: [13344.298573] fwbr100i0: port 1(fwln100i0) entered blocking state

Mar 26 23:19:16 server kernel: [13344.298574] fwbr100i0: port 1(fwln100i0) entered forwarding state

Mar 26 23:19:16 server kernel: [13344.304746] fwbr100i0: port 2(tap100i0) entered blocking state

Mar 26 23:19:16 server kernel: [13344.304748] fwbr100i0: port 2(tap100i0) entered disabled state

Mar 26 23:19:16 server kernel: [13344.304794] fwbr100i0: port 2(tap100i0) entered blocking state

Mar 26 23:19:16 server kernel: [13344.304795] fwbr100i0: port 2(tap100i0) entered forwarding state

Mar 26 23:19:16 server pvedaemon[29063]: <root@pam> end task UPID:server:00007FC5:00145C60:6420B6D3:qmstart:100:root@pam: OK

Mar 26 23:19:16 server pvedaemon[32824]: starting vnc proxy UPID:server:00008038:00145CE7:6420B6D4:vncproxy:100:root@pam:

Mar 26 23:19:16 server pvedaemon[29063]: <root@pam> starting task UPID:server:00008038:00145CE7:6420B6D4:vncproxy:100:root@pam:

And other snippets from dmesg and syslog from within the VM, also looking fine no errors or warnings:

[ 3.212844] systemd[1]: Started Journal Service.

[ 3.228211] Adding 8388604k swap on /swap.img. Priority:-2 extents:9 across:8962044k FS

[ 3.232563] alua: device handler registered

[ 3.233155] systemd-journald[500]: Received client request to flush runtime journal.

[ 3.234212] emc: device handler registered

[ 3.235263] rdac: device handler registered

[ 3.499451] loop0: detected capacity change from 0 to 18352

[ 3.500917] loop1: detected capacity change from 0 to 239128

[ 3.502863] loop2: detected capacity change from 0 to 129600

[ 3.503698] loop3: detected capacity change from 0 to 229272

[ 3.678779] kvm: Nested Virtualization enabled

[ 3.678782] SVM: kvm: Nested Paging enabled

[ 3.783897] loop4: detected capacity change from 0 to 102072

[ 4.322723] EXT4-fs (vda2): mounted filesystem with ordered data mode. Opts: (null). Quota mode: none.

...

Mar 26 21:27:56 ubuntu-server systemd-udevd[564]: vda: Process '/usr/bin/unshare -m /usr/bin/snap auto-import --mount=/dev/vda' failed with exit code 1.

Mar 26 21:27:56 ubuntu-server kernel: [ 0.001390] kvm-clock: cpu 14, msr c10201381, secondary cpu clock

Mar 26 21:27:56 ubuntu-server kernel: [ 0.334978] kvm-guest: setup async PF for cpu 14

Mar 26 21:27:56 ubuntu-server kernel: [ 0.334978] kvm-guest: stealtime: cpu 14, msr fffdb2080

Mar 26 21:27:56 ubuntu-server kernel: [ 0.334978] #15

Mar 26 21:27:56 ubuntu-server kernel: [ 0.001390] kvm-clock: cpu 15, msr c102013c1, secondary cpu clock

Mar 26 21:27:56 ubuntu-server kernel: [ 0.334978] kvm-guest: setup async PF for cpu 15

Mar 26 21:27:56 ubuntu-server systemd-udevd[555]: dm-0: Process '/usr/bin/unshare -m /usr/bin/snap auto-import --mount=/dev/dm-0' failed with exit code 1.

Mar 26 21:27:56 ubuntu-server kernel: [ 0.334978] kvm-guest: stealtime: cpu 15, msr fffdf2080

Mar 26 21:27:56 ubuntu-server kernel: [ 0.334978] smp: Brought up 1 node, 16 CPUs

Mar 26 21:27:56 ubuntu-server kernel: [ 0.334978] smpboot: Max logical packages: 1

Mar 26 21:27:56 ubuntu-server systemd-udevd[600]: vda3: Process '/usr/bin/unshare -m /usr/bin/snap auto-import --mount=/dev/vda3' failed with exit code 1.

Mar 26 21:27:56 ubuntu-server kernel: [ 0.334978] smpboot: Total of 16 processors activated (115200.51 BogoMIPS)

Mar 26 21:27:56 ubuntu-server kernel: [ 0.340917] devtmpfs: initialized

Mar 26 21:27:56 ubuntu-server kernel: [ 0.340917] x86/mm: Memory block size: 1024MB

Mar 26 21:27:56 ubuntu-server systemd-udevd[586]: vda1: Process '/usr/bin/unshare -m /usr/bin/snap auto-import --mount=/dev/vda1' failed with exit code 1.

Mar 26 21:27:56 ubuntu-server kernel: [ 0.340917] clocksource: jiffies: mask: 0xffffffff max_cycles: 0xffffffff, max_idle_ns: 7645041785100000 ns

Mar 26 21:27:56 ubuntu-server kernel: [ 0.340917] futex hash table entries: 4096 (order: 6, 262144 bytes, linear)

Mar 26 21:27:56 ubuntu-server systemd-udevd[589]: vda2: Process '/usr/bin/unshare -m /usr/bin/snap auto-import --mount=/dev/vda2' failed with exit code 1.

Mar 26 21:27:56 ubuntu-server systemd[1]: Found device /dev/disk/by-uuid/18f23523-7394-4f05-a729-82512019199e.

Mar 26 21:27:56 ubuntu-server systemd[1]: modprobe@chromeos_pstore.service: Deactivated successfully.

Mar 26 21:27:56 ubuntu-server systemd[1]: Finished Load Kernel Module chromeos_pstore.

Mar 26 21:27:56 ubuntu-server systemd[1]: Created slice Slice /system/lvm2-pvscan.

Mar 26 21:27:56 ubuntu-server systemd[1]: Starting LVM event activation on device 252:3...

Mar 26 21:27:56 ubuntu-server lvm[671]: pvscan[671] PV /dev/vda3 online, VG ubuntu-vg is complete.

Mar 26 21:27:56 ubuntu-server lvm[671]: pvscan[671] VG ubuntu-vg skip autoactivation.

Mar 26 21:27:56 ubuntu-server systemd[1]: Condition check resulted in Platform Persistent Storage Archival being skipped.

Mar 26 21:27:56 ubuntu-server systemd[1]: Finished Monitoring of LVM2 mirrors, snapshots etc. using dmeventd or progress polling.

Mar 26 21:27:56 ubuntu-server systemd[1]: Reached target Preparation for Local File Systems.

Mar 26 21:27:56 ubuntu-server systemd[1]: Mounting Mount unit for canonical-livepatch, revision 164...

Mar 26 21:27:56 ubuntu-server systemd[1]: Mounting Mount unit for core, revision 14946...

Mar 26 21:27:56 ubuntu-server systemd[1]: Mounting Mount unit for core20, revision 1822...

Mar 26 21:27:56 ubuntu-server systemd[1]: Mounting Mount unit for core20, revision 1852...

Mar 26 21:27:56 ubuntu-server systemd[1]: Mounting Mount unit for lxd, revision 24322...

Mar 26 21:27:56 ubuntu-server systemd[1]: Mounting Mount unit for snapd, revision 18357...

....

I also would like to point out the Qemu documentation: https://www.qemu.org/2021/01/19/virtio-blk-scsi-configuration/

Comparing virtio-blk and virtio-scsi

Key points

- Prefer virtio-blk in performance-critical use cases.

- Prefer virtio-scsi for attaching more than 28 disks or for full SCSI support.

- With virtio-scsi, use scsi-block for SCSI passthrough and otherwise use scsi-hd.

The virtio-blk device offers high performance thanks to a thin software stack and is therefore a good choice when performance is a priority. I

Last edited: