I have a pair of HPE DL360 Gen8

dual Xeon, 64GB RAM, 2 hdd 10k sas for system (ZFS RAID1) and 4 consumer sata SSD

They're for internal use, and show absymal performances.

At first I had ceph on those SSD (with a third node), then I had to move everything to NAS temporarily.

Now I reinstalled one of the two DL360, replaced the RAID controller P420 with an HBA H240 and created a ZFS RAID10 with the 4 SSD.

pveperf on the SSD RAID10:

zpool status

lsblk

pveperf on the root mirror (2x hdd 10k sas) is much better, but slow anyway, as pveperf man page says I should expect at least 200 FSYNC/SECOND

this is smartctl -all for one SSD

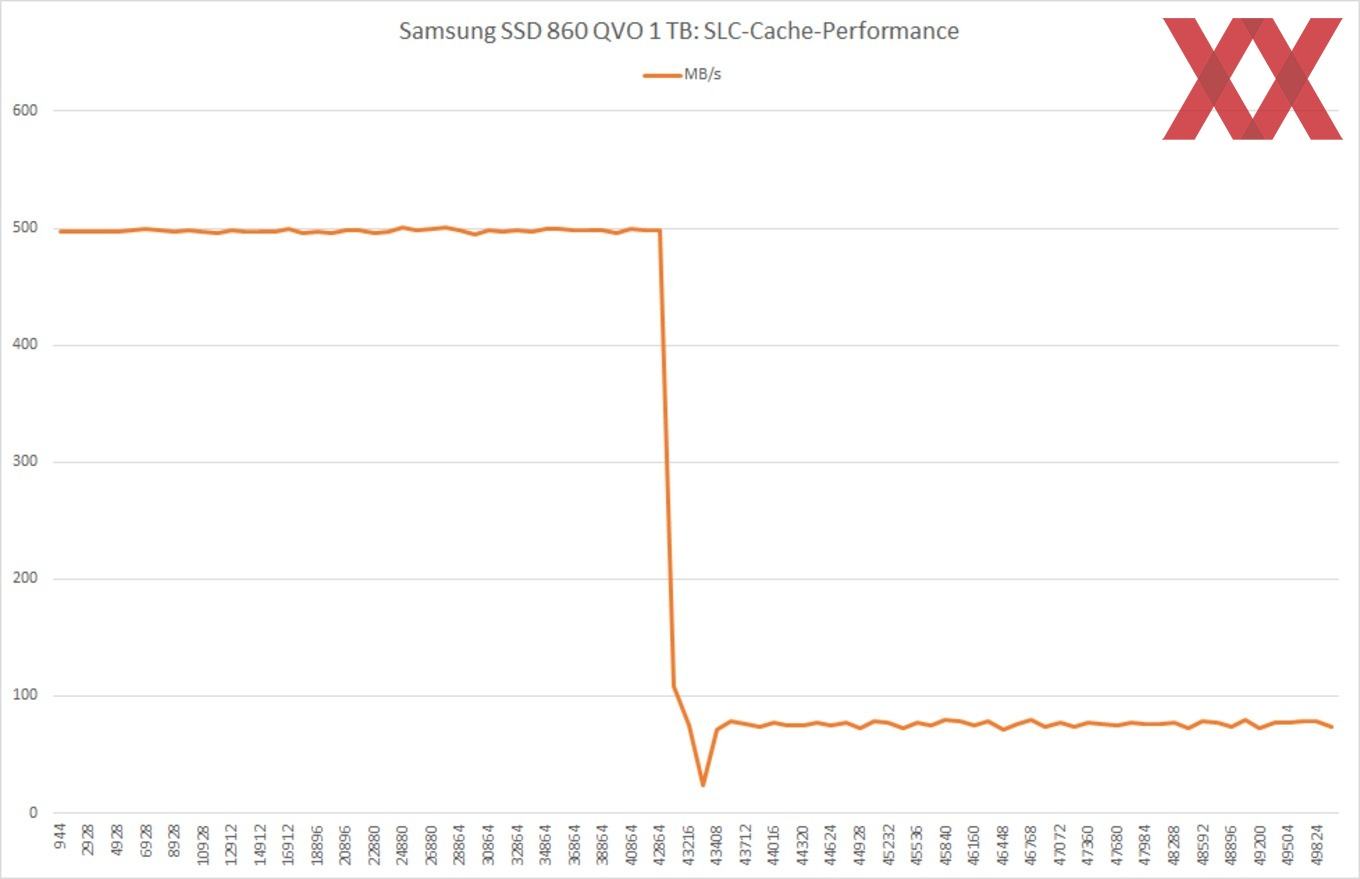

Is this really the performance I should expect with consumer SSD on server hardware?

dual Xeon, 64GB RAM, 2 hdd 10k sas for system (ZFS RAID1) and 4 consumer sata SSD

They're for internal use, and show absymal performances.

At first I had ceph on those SSD (with a third node), then I had to move everything to NAS temporarily.

Now I reinstalled one of the two DL360, replaced the RAID controller P420 with an HBA H240 and created a ZFS RAID10 with the 4 SSD.

pveperf on the SSD RAID10:

Code:

CPU BOGOMIPS: 115003.44

REGEX/SECOND: 1596032

HD SIZE: 86.68 GB (ZFS1)

FSYNCS/SECOND: 17.44

DNS EXT: 78.57 ms

DNS INT: 11.33 mszpool status

Code:

pool: ZFS1

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

ZFS1 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

scsi-330014380432d76c9 ONLINE 0 0 0

scsi-330014380432d76c8 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

scsi-330014380432d76ca ONLINE 0 0 0

scsi-330014380432d76cb ONLINE 0 0 0

errors: No known data errors

pool: rpool

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

scsi-35000c50096bdfc13-part3 ONLINE 0 0 0

scsi-35000c50059636ee7-part3 ONLINE 0 0 0

errors: No known data errorslsblk

Code:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 279.4G 0 disk

├─sda1 8:1 0 1007K 0 part

├─sda2 8:2 0 512M 0 part

└─sda3 8:3 0 278.9G 0 part

sdb 8:16 0 279.4G 0 disk

├─sdb1 8:17 0 1007K 0 part

├─sdb2 8:18 0 512M 0 part

└─sdb3 8:19 0 278.9G 0 part

sdc 8:32 0 931.5G 0 disk

├─sdc1 8:33 0 931.5G 0 part

└─sdc9 8:41 0 8M 0 part

sdd 8:48 0 931.5G 0 disk

├─sdd1 8:49 0 931.5G 0 part

└─sdd9 8:57 0 8M 0 part

sde 8:64 0 894.3G 0 disk

├─sde1 8:65 0 894.3G 0 part

└─sde9 8:73 0 8M 0 part

sdf 8:80 0 894.3G 0 disk

├─sdf1 8:81 0 894.3G 0 part

└─sdf9 8:89 0 8M 0 part

zd0 230:0 0 40G 0 disk

zd16 230:16 0 80G 0 disk

zd32 230:32 0 40.7G 0 disk

zd48 230:48 0 10G 0 disk

zd64 230:64 0 32G 0 disk

zd80 230:80 0 20G 0 disk

zd96 230:96 0 50G 0 disk

zd112 230:112 0 10G 0 disk

zd128 230:128 0 10G 0 disk

zd144 230:144 0 50G 0 disk

zd160 230:160 0 10G 0 disk

zd176 230:176 0 20G 0 disk

zd192 230:192 0 50G 0 disk

zd208 230:208 0 50G 0 disk

zd224 230:224 0 32G 0 disk

zd240 230:240 0 32G 0 disk

zd256 230:256 0 250G 0 disk

zd272 230:272 0 200G 0 disk

zd288 230:288 0 250G 0 disk

zd304 230:304 0 200G 0 diskpveperf on the root mirror (2x hdd 10k sas) is much better, but slow anyway, as pveperf man page says I should expect at least 200 FSYNC/SECOND

Code:

CPU BOGOMIPS: 115003.44

REGEX/SECOND: 1407690

HD SIZE: 219.30 GB (rpool/ROOT/pve-1)

FSYNCS/SECOND: 145.32

DNS EXT: 43.81 ms

DNS INT: 1.60 msthis is smartctl -all for one SSD

Code:

smartctl 7.2 2020-12-30 r5155 [x86_64-linux-5.4.143-1-pve] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Device Model: Samsung SSD 860 QVO 1TB

Serial Number: S4CZNF0N280968K

LU WWN Device Id: 5 002538 e90234834

Firmware Version: RVQ02B6Q

User Capacity: 1,000,204,886,016 bytes [1.00 TB]

Sector Size: 512 bytes logical/physical

Rotation Rate: Solid State Device

Form Factor: 2.5 inches

TRIM Command: Available, deterministic, zeroed

Device is: Not in smartctl database [for details use: -P showall]

ATA Version is: ACS-4 T13/BSR INCITS 529 revision 5

SATA Version is: SATA 3.2, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Wed Nov 3 22:04:16 2021 CET

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART Status not supported: Incomplete response, ATA output registers missing

SMART overall-health self-assessment test result: PASSED

Warning: This result is based on an Attribute check.

General SMART Values:

Offline data collection status: (0x00) Offline data collection activity

was never started.

Auto Offline Data Collection: Disabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 0) seconds.

Offline data collection

capabilities: (0x53) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

No Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 85) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 1

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0

9 Power_On_Hours 0x0032 098 098 000 Old_age Always - 9143

12 Power_Cycle_Count 0x0032 099 099 000 Old_age Always - 28

177 Wear_Leveling_Count 0x0013 088 088 000 Pre-fail Always - 96

179 Used_Rsvd_Blk_Cnt_Tot 0x0013 100 100 010 Pre-fail Always - 0

181 Program_Fail_Cnt_Total 0x0032 100 100 010 Old_age Always - 0

182 Erase_Fail_Count_Total 0x0032 100 100 010 Old_age Always - 0

183 Runtime_Bad_Block 0x0013 100 100 010 Pre-fail Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

190 Airflow_Temperature_Cel 0x0032 069 053 000 Old_age Always - 31

195 Hardware_ECC_Recovered 0x001a 200 200 000 Old_age Always - 0

199 UDMA_CRC_Error_Count 0x003e 100 100 000 Old_age Always - 0

235 Unknown_Attribute 0x0012 099 099 000 Old_age Always - 25

241 Total_LBAs_Written 0x0032 099 099 000 Old_age Always - 55058693454

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

No self-tests have been logged. [To run self-tests, use: smartctl -t]

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

256 0 65535 Read_scanning was never started

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.Is this really the performance I should expect with consumer SSD on server hardware?