Hi,

Just now I did a refresh in the update tab of Proxmox 7.2.7 and pressed update.

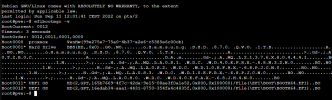

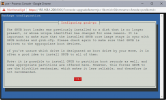

Now half way I get the following messages:

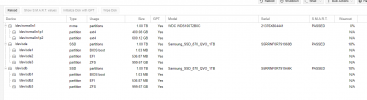

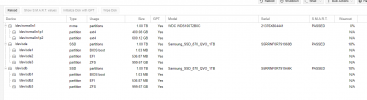

Weird part is, I have Proxmox running on ZFS mirror

2x SSD 1tb, as described in below picture

SDA + SDB are combined the zfs mirror. one of them is boot in bios.

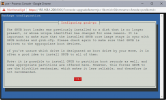

I am at a loss, I am kind of worried I will mess up the data on the ZFS mirrors selecting GRUB to install on both SSD's (which make up the ZFS rpool)

what to do next, how should I proceed?

When I do not select any disk, I get this response:

So I pressed no again, and I am waiting in the disk selection screen.

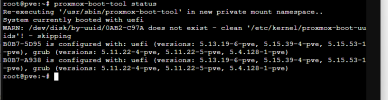

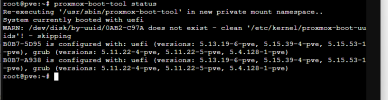

I can still open shell. I did

This is the result

Kindly your advice

Just now I did a refresh in the update tab of Proxmox 7.2.7 and pressed update.

Now half way I get the following messages:

Weird part is, I have Proxmox running on ZFS mirror

2x SSD 1tb, as described in below picture

SDA + SDB are combined the zfs mirror. one of them is boot in bios.

I am at a loss, I am kind of worried I will mess up the data on the ZFS mirrors selecting GRUB to install on both SSD's (which make up the ZFS rpool)

what to do next, how should I proceed?

When I do not select any disk, I get this response:

So I pressed no again, and I am waiting in the disk selection screen.

I can still open shell. I did

proxmox-boot-tool statusThis is the result

Kindly your advice

Last edited: