EDIT:

A lot of rant...

PBS markets as an enterprise product, but are limited to only use SSDs (the only setup "supported") and to setup something else you (like a remote NFS share for example) isn't as straight forward as you would expect from an "enterprise" product. I come from Veeam and I've used NFS through 10 GBe to backup my ~30 linux VMs for the past 7 years without any issues. Now I still stuggle to get it to do what I want - 1 week after deployment of PBS (read below).

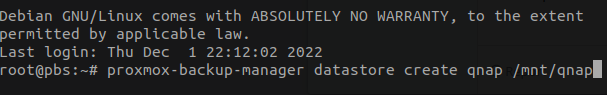

Not making it possible to mount NFS or CIFS locally on the PBS server and then use that as a datastorage is like buying a car without wheels. Yes, permissions are correct, it mounts, I can back up, but it took me forever to get there, and it's still having issues with just dying. PVE works without a hitch, but PBS - nope!

Basically, there are a lot of room for improvement.

A lot of rant...

PBS markets as an enterprise product, but are limited to only use SSDs (the only setup "supported") and to setup something else you (like a remote NFS share for example) isn't as straight forward as you would expect from an "enterprise" product. I come from Veeam and I've used NFS through 10 GBe to backup my ~30 linux VMs for the past 7 years without any issues. Now I still stuggle to get it to do what I want - 1 week after deployment of PBS (read below).

Not making it possible to mount NFS or CIFS locally on the PBS server and then use that as a datastorage is like buying a car without wheels. Yes, permissions are correct, it mounts, I can back up, but it took me forever to get there, and it's still having issues with just dying. PVE works without a hitch, but PBS - nope!

Basically, there are a lot of room for improvement.

Last edited: