Hello!

@tuxis , thanks again for providing a free plan for this service. Getting it up and running was, admittedly, a bit trickier than I expected, but that was entirely because it's been long enough that I kind of forgot all the steps to configure a new Proxmox Backup Server instance.

The Tuxis web UI and necessary steps there were dead simple and really intuitive. The only thing I would have had to look up if I hadn't absorbed it though this thread is that the replication/pull-based backup service is a paid feature.

One thing I was curious about: when I'm ready to upgrade to a paid plan, is my existing PBS instance upgraded, or do I have to set up a new instance (and migrate my data)? Or do I end up with two instances?

Sort of in the same vein: I noticed that you also offer a hosting option for a dedicated PBS server. Which customers would you recommend that for? I feel like the shared-hosting-based PBS is a great fit for my home/home office set up.

Performance and Ping. There was discussion about this up-thread, some of which is a few years old at this point, so I wanted to provide some additional (non-scientifically collected) benchark data.

I'm in Dallas, Texas, US, and am seeing a pretty consistent 120-125 ms ping to my assigned PBS server in NL, which seems excellent for a server in Amsterdam (~4902 km / 3046 miles away, across an ocean). I have 1 Gbps symmetrical fiber, and can consistently hit full-speed (940 Mbps both ways) to a well-equipped speed test server.

ETA: Client-side encryption is enabled.

ETA 2: The Proxmox host is an HP EliteMini G9 600 with an i5-12700T and 96 GiB of RAM, running the latest version of Proxmox 8.2.

Here's the log of my first backup, in case anyone is curious. Right now, I have it set to just back up a Debian 12 LXC where my Unifi controller lives. The LXC is running, and I'd never backed up anything, so I felt like this was a worst-case for an LXC on my end.

Aside: PBS' default settings just doing compression so seamlessly and so well is just awesome.

~20 MB/s (160 Mbps) for free from Dallas to Amsterdam is pretty spectacular.

Caveat: That's an average speed, not an exact number. I'm sure it fluctuates.

@tuxis , one thing I'm curious about: it mentions reusing 1.158 GiB. I'm assuming that's because there's other datastores on the shared PBS instance that I can't see, and someone's running a similar enough Debian LXC that it could reuse some of it? If so, you might want to mention that somewhere in the docs for the free version, as an advantage. Deduping across user datastores looks like it maximizes that 150 GB.

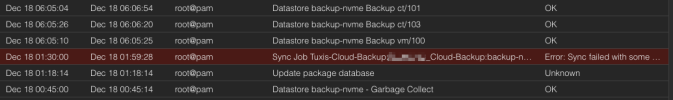

Garbage collection, pruning, and verifying: Do you have recommended settings for these? I've used my settings from my own PBS just to have something set up, but I realize that might not be ideal for a remote instance.

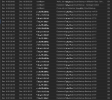

Here's the full output of the backup, for anyone curious what it looks like:

EDIT -- VM Backup Performance seems to be better than LXC Performance?

I decided to add a VM, as well. This is my MariaDB VM, so it's got a number of virtual SCSI disks (getting actual physical remote storage in my LAN for the VM data storage like this is on the list).

The backup for the VM seems to have gone much faster than for the LXC--I assume VM incremental backup/compression is just faster. I'm still pretty new at all this...

I have 1 Gbps upload, so this is approaching theoretical max for me. Though, I think part of why it looks so good is that 95 percent of the total backup for that VM is empty, since it's a sparse back up of thinly provisioned VirtIO SCSI drives.

@tuxis , thanks again for providing a free plan for this service. Getting it up and running was, admittedly, a bit trickier than I expected, but that was entirely because it's been long enough that I kind of forgot all the steps to configure a new Proxmox Backup Server instance.

The Tuxis web UI and necessary steps there were dead simple and really intuitive. The only thing I would have had to look up if I hadn't absorbed it though this thread is that the replication/pull-based backup service is a paid feature.

One thing I was curious about: when I'm ready to upgrade to a paid plan, is my existing PBS instance upgraded, or do I have to set up a new instance (and migrate my data)? Or do I end up with two instances?

Sort of in the same vein: I noticed that you also offer a hosting option for a dedicated PBS server. Which customers would you recommend that for? I feel like the shared-hosting-based PBS is a great fit for my home/home office set up.

Performance and Ping. There was discussion about this up-thread, some of which is a few years old at this point, so I wanted to provide some additional (non-scientifically collected) benchark data.

I'm in Dallas, Texas, US, and am seeing a pretty consistent 120-125 ms ping to my assigned PBS server in NL, which seems excellent for a server in Amsterdam (~4902 km / 3046 miles away, across an ocean). I have 1 Gbps symmetrical fiber, and can consistently hit full-speed (940 Mbps both ways) to a well-equipped speed test server.

ETA: Client-side encryption is enabled.

ETA 2: The Proxmox host is an HP EliteMini G9 600 with an i5-12700T and 96 GiB of RAM, running the latest version of Proxmox 8.2.

Here's the log of my first backup, in case anyone is curious. Right now, I have it set to just back up a Debian 12 LXC where my Unifi controller lives. The LXC is running, and I'd never backed up anything, so I felt like this was a worst-case for an LXC on my end.

Code:

INFO: root.pxar: had to backup 2.535 GiB of 3.693 GiB (compressed 1.167 GiB) in 120.44 s (average 21.554 MiB/s)

INFO: root.pxar: backup was done incrementally, reused 1.158 GiB (31.4%)

INFO: Uploaded backup catalog (859.361 KiB)

INFO: Duration: 127.35s

INFO: End Time: Wed Dec 11 15:16:03 2024Aside: PBS' default settings just doing compression so seamlessly and so well is just awesome.

~20 MB/s (160 Mbps) for free from Dallas to Amsterdam is pretty spectacular.

Caveat: That's an average speed, not an exact number. I'm sure it fluctuates.

@tuxis , one thing I'm curious about: it mentions reusing 1.158 GiB. I'm assuming that's because there's other datastores on the shared PBS instance that I can't see, and someone's running a similar enough Debian LXC that it could reuse some of it? If so, you might want to mention that somewhere in the docs for the free version, as an advantage. Deduping across user datastores looks like it maximizes that 150 GB.

Garbage collection, pruning, and verifying: Do you have recommended settings for these? I've used my settings from my own PBS just to have something set up, but I realize that might not be ideal for a remote instance.

Here's the full output of the backup, for anyone curious what it looks like:

Code:

NFO: starting new backup job: vzdump 99901 --all 0 --node andromeda2 --storage Tuxis150GB --fleecing 0 --notes-template '{{guestname}}' --mode snapshot

INFO: Starting Backup of VM 99901 (lxc)

INFO: Backup started at 2024-12-11 15:13:51

INFO: status = running

INFO: CT Name: subspace

INFO: including mount point rootfs ('/') in backup

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: create storage snapshot 'vzdump'

INFO: creating Proxmox Backup Server archive 'ct/99901/2024-12-11T21:13:51Z'

INFO: set max number of entries in memory for file-based backups to 1048576

INFO: run: lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client backup --crypt-mode=none pct.conf:/var/tmp/vzdumptmp2308863_99901/etc/vzdump/pct.conf root.pxar:/mnt/vzsnap0 --include-dev /mnt/vzsnap0/./ --skip-lost-and-found --exclude=/tmp/?* --exclude=/var/tmp/?* --exclude=/var/run/?*.pid --backup-type ct --backup-id 99901 --backup-time 1733951631 --entries-max 1048576 --repository DB2685@pbs!AndromedaClusterToken@pbs005.tuxis.nl:DB2685_AndromedaCluster150

INFO: Starting backup: ct/99901/2024-12-11T21:13:51Z

INFO: Client name: andromeda2

INFO: Starting backup protocol: Wed Dec 11 15:13:55 2024

INFO: No previous manifest available.

INFO: Upload config file '/var/tmp/vzdumptmp2308863_99901/etc/vzdump/pct.conf' to '$TARGET' as pct.conf.blob

INFO: Upload directory '/mnt/vzsnap0' to '$TARGET' as root.pxar.didx

INFO: root.pxar: had to backup 2.535 GiB of 3.693 GiB (compressed 1.167 GiB) in 120.44 s (average 21.554 MiB/s)

INFO: root.pxar: backup was done incrementally, reused 1.158 GiB (31.4%)

INFO: Uploaded backup catalog (859.361 KiB)

INFO: Duration: 127.35s

INFO: End Time: Wed Dec 11 15:16:03 2024

INFO: adding notes to backup

INFO: cleanup temporary 'vzdump' snapshot

INFO: Finished Backup of VM 99901 (00:02:13)

INFO: Backup finished at 2024-12-11 15:16:04

INFO: Backup job finished successfully

TASK OKEDIT -- VM Backup Performance seems to be better than LXC Performance?

I decided to add a VM, as well. This is my MariaDB VM, so it's got a number of virtual SCSI disks (getting actual physical remote storage in my LAN for the VM data storage like this is on the list).

The backup for the VM seems to have gone much faster than for the LXC--I assume VM incremental backup/compression is just faster. I'm still pretty new at all this...

Code:

NFO: starting new backup job: vzdump 99901 99902 --notes-template '{{guestname}}' --storage Tuxis150GB --node andromeda2 --all 0 --fleecing 0 --mode snapshot

INFO: Starting Backup of VM 99901 (lxc)

INFO: Backup started at 2024-12-11 17:45:24

INFO: status = running

< . . . LXC backup not shown . . . >

INFO: Starting backup: ct/99901/2024-12-11T23:45:24Z

INFO: Client name: andromeda2

INFO: Starting backup protocol: Wed Dec 11 17:45:24 2024

INFO: Downloading previous manifest (Wed Dec 11 15:13:51 2024)

INFO: Upload config file '/var/tmp/vzdumptmp2392935_99901/etc/vzdump/pct.conf' to $TARGET as pct.conf.blob

INFO: Upload directory '/mnt/vzsnap0' to $TARGET as root.pxar.didx

INFO: root.pxar: had to backup 175.948 MiB of 3.694 GiB (compressed 35.632 MiB) in 8.45 s (average 20.834 MiB/s)

INFO: root.pxar: backup was done incrementally, reused 3.522 GiB (95.3%)

INFO: Uploaded backup catalog (859.361 KiB)

INFO: Duration: 10.85s

INFO: End Time: Wed Dec 11 17:45:35 2024

INFO: adding notes to backup

INFO: cleanup temporary 'vzdump' snapshot

INFO: Finished Backup of VM 99901 (00:00:13)

INFO: Backup finished at 2024-12-11 17:45:37

INFO: Starting Backup of VM 99902 (qemu)

INFO: Backup started at 2024-12-11 17:45:37

INFO: status = running

INFO: VM Name: memory-alpha2

INFO: include disk 'scsi0' 'vmStore64k:vm-99902-disk-1' 64G

INFO: include disk 'scsi1' 'vmStore16k:vm-99902-disk-0' 16G

INFO: include disk 'scsi2' 'vmStore64k:vm-99902-disk-2' 16G

INFO: include disk 'scsi3' 'vmStore64k:vm-99902-disk-3' 2G

INFO: include disk 'efidisk0' 'vmStore64k:vm-99902-disk-0' 1M

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: snapshots found (not included into backup)

INFO: creating Proxmox Backup Server archive 'vm/99902/2024-12-11T23:45:37Z'

INFO: issuing guest-agent 'fs-freeze' command

INFO: issuing guest-agent 'fs-thaw' command

INFO: started backup task '716dd2c2-38cc-4c1e-82d2-e8d13b56611c'

INFO: resuming VM again

INFO: efidisk0: dirty-bitmap status: existing bitmap was invalid and has been cleared

INFO: scsi0: dirty-bitmap status: existing bitmap was invalid and has been cleared

INFO: scsi1: dirty-bitmap status: existing bitmap was invalid and has been cleared

INFO: scsi2: dirty-bitmap status: existing bitmap was invalid and has been cleared

INFO: scsi3: dirty-bitmap status: existing bitmap was invalid and has been cleared

INFO: 9% (8.9 GiB of 98.0 GiB) in 3s, read: 3.0 GiB/s, write: 80.0 MiB/s

INFO: 19% (19.4 GiB of 98.0 GiB) in 6s, read: 3.5 GiB/s, write: 24.0 MiB/s

INFO: 29% (29.2 GiB of 98.0 GiB) in 9s, read: 3.3 GiB/s, write: 36.0 MiB/s

INFO: 35% (34.4 GiB of 98.0 GiB) in 12s, read: 1.7 GiB/s, write: 30.7 MiB/s

INFO: 36% (35.3 GiB of 98.0 GiB) in 25s, read: 68.6 MiB/s, write: 54.5 MiB/s

INFO: 37% (36.7 GiB of 98.0 GiB) in 42s, read: 88.0 MiB/s, write: 36.5 MiB/s

INFO: 39% (38.6 GiB of 98.0 GiB) in 45s, read: 648.0 MiB/s, write: 61.3 MiB/s

INFO: 41% (40.4 GiB of 98.0 GiB) in 48s, read: 597.3 MiB/s, write: 42.7 MiB/s

INFO: 45% (44.9 GiB of 98.0 GiB) in 51s, read: 1.5 GiB/s, write: 54.7 MiB/s

INFO: 49% (48.9 GiB of 98.0 GiB) in 54s, read: 1.3 GiB/s, write: 14.7 MiB/s

INFO: 51% (50.9 GiB of 98.0 GiB) in 57s, read: 681.3 MiB/s, write: 124.0 MiB/s

INFO: 52% (51.1 GiB of 98.0 GiB) in 1m, read: 84.0 MiB/s, write: 60.0 MiB/s

INFO: 53% (52.8 GiB of 98.0 GiB) in 1m 5s, read: 338.4 MiB/s, write: 93.6 MiB/s

INFO: 56% (54.9 GiB of 98.0 GiB) in 1m 8s, read: 718.7 MiB/s, write: 42.7 MiB/s

INFO: 58% (56.9 GiB of 98.0 GiB) in 1m 11s, read: 674.7 MiB/s, write: 16.0 MiB/s

INFO: 66% (64.9 GiB of 98.0 GiB) in 1m 14s, read: 2.7 GiB/s, write: 85.3 MiB/s

INFO: 70% (69.5 GiB of 98.0 GiB) in 1m 17s, read: 1.5 GiB/s, write: 20.0 MiB/s

INFO: 80% (78.5 GiB of 98.0 GiB) in 1m 20s, read: 3.0 GiB/s, write: 58.7 MiB/s

INFO: 86% (84.9 GiB of 98.0 GiB) in 1m 23s, read: 2.1 GiB/s, write: 17.3 MiB/s

INFO: 93% (91.4 GiB of 98.0 GiB) in 1m 26s, read: 2.2 GiB/s, write: 45.3 MiB/s

INFO: 98% (96.1 GiB of 98.0 GiB) in 1m 29s, read: 1.6 GiB/s, write: 92.0 MiB/s

INFO: 100% (98.0 GiB of 98.0 GiB) in 1m 36s, read: 284.1 MiB/s, write: 50.9 MiB/s

INFO: backup is sparse: 93.19 GiB (95%) total zero data

INFO: backup was done incrementally, reused 93.25 GiB (95%)

INFO: transferred 98.00 GiB in 101 seconds (993.6 MiB/s)

INFO: adding notes to backup

INFO: Finished Backup of VM 99902 (00:01:45)

INFO: Backup finished at 2024-12-11 17:47:22

INFO: Backup job finished successfully

TASK OKI have 1 Gbps upload, so this is approaching theoretical max for me. Though, I think part of why it looks so good is that 95 percent of the total backup for that VM is empty, since it's a sparse back up of thinly provisioned VirtIO SCSI drives.

Last edited: