I'm having issues mounting a NFS share on a PVE cluster.

The initial mapping works but after some time I get connection issues and multiple retries.

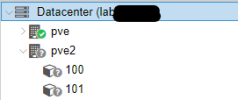

My PVE cluster is running with two nodes both with the same version and having 172.16.10.2 and 172.16.10.6 IP addresses.

The TrueNas is configured with NFS service on 172.16.20.3, and I access it via IP not via domain name.

I'm able to mount the NFS service to other clients without any issue.

PVE versions:

I mapped it via GUI and using storage.cfg, sample storage.cfg is below:

I tried modifying options parameters will NFS v4 and other attributes but the outcome is always the same:

I have these logs on /var/log/syslog, can you please provide support to fix this issue?

The initial mapping works but after some time I get connection issues and multiple retries.

My PVE cluster is running with two nodes both with the same version and having 172.16.10.2 and 172.16.10.6 IP addresses.

The TrueNas is configured with NFS service on 172.16.20.3, and I access it via IP not via domain name.

I'm able to mount the NFS service to other clients without any issue.

PVE versions:

Code:

proxmox-ve: 7.3-1 (running kernel: 5.15.85-1-pve)

pve-manager: 7.3-6 (running version: 7.3-6/723bb6ec)

pve-kernel-helper: 7.3-5

pve-kernel-5.15: 7.3-2

pve-kernel-5.15.85-1-pve: 5.15.85-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.3.3-1

proxmox-backup-file-restore: 2.3.3-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.5

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-3

pve-qemu-kvm: 7.2.0-5

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

Code:

rpcinfo -p 172.16.20.3

program vers proto port service

100000 4 tcp 111 portmapper

100000 3 tcp 111 portmapper

100000 2 tcp 111 portmapper

100000 4 udp 111 portmapper

100000 3 udp 111 portmapper

100000 2 udp 111 portmapper

100024 1 udp 37857 status

100005 3 udp 60795 mountd

100024 1 tcp 36025 status

100005 3 tcp 55845 mountd

100003 3 tcp 2049 nfs

100003 4 tcp 2049 nfs

100227 3 tcp 2049

100003 3 udp 2049 nfs

100227 3 udp 2049

100021 1 udp 58758 nlockmgr

100021 3 udp 58758 nlockmgr

100021 4 udp 58758 nlockmgr

100021 1 tcp 35399 nlockmgr

100021 3 tcp 35399 nlockmgr

100021 4 tcp 35399 nlockmgr

Code:

showmount -e 172.16.20.3

Export list for 172.16.20.3:

/mnt/storagepoolssd/test-speed 172.16.20.0/24,172.16.10.0/24

/mnt/storagepool/securestorage 172.16.50.3/32,172.16.20.0/24

/mnt/storagepool/proxmox-storage 172.16.10.2,172.16.10.6I mapped it via GUI and using storage.cfg, sample storage.cfg is below:

Code:

nfs: test-speed

path /mnt/pve/backup

server 172.16.20.3

export /mnt/storagepoolssd/test-speed

options vers=3,soft

content iso,vztmplI tried modifying options parameters will NFS v4 and other attributes but the outcome is always the same:

Code:

Mar 6 17:01:07 pve pvestatd[1181]: unable to activate storage 'backup' - directory '/mnt/pve/backup' does not exist or is unreachable

Mar 6 17:01:16 pve pvestatd[1181]: got timeout

Mar 6 17:01:16 pve pvestatd[1181]: unable to activate storage 'backup' - directory '/mnt/pve/backup' does not exist or is unreachable

Mar 6 17:01:25 pve pvestatd[1181]: got timeout

Mar 6 17:01:25 pve pvestatd[1181]: unable to activate storage 'backup' - directory '/mnt/pve/backup' does not exist or is unreachable

Mar 6 17:01:36 pve pvestatd[1181]: got timeout

Mar 6 17:01:36 pve pvestatd[1181]: unable to activate storage 'backup' - directory '/mnt/pve/backup' does not exist or is unreachable

Mar 6 17:01:45 pve pvestatd[1181]: got timeout

Mar 6 17:01:45 pve pvestatd[1181]: unable to activate storage 'backup' - directory '/mnt/pve/backup' does not exist or is unreachable

[...]

Mar 6 17:24:28 pve kernel: [ 4230.410991] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:24:33 pve kernel: [ 4235.530165] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:24:38 pve kernel: [ 4240.650104] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:24:44 pve kernel: [ 4245.770071] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:24:49 pve kernel: [ 4250.890089] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:24:54 pve kernel: [ 4256.010351] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:24:59 pve kernel: [ 4261.130289] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:26:03 pve kernel: [ 4324.879658] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:26:08 pve kernel: [ 4329.994727] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:26:13 pve kernel: [ 4335.114681] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:26:18 pve kernel: [ 4340.234822] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:26:23 pve kernel: [ 4345.354947] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:26:28 pve kernel: [ 4350.475137] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:26:33 pve kernel: [ 4355.595057] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:28:10 pve kernel: [ 4451.852338] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:28:15 pve kernel: [ 4456.971910] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:28:20 pve kernel: [ 4462.091911] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:28:25 pve kernel: [ 4467.211986] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:28:30 pve kernel: [ 4472.332195] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:28:35 pve kernel: [ 4477.451952] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93

Mar 6 17:28:40 pve kernel: [ 4482.572038] NFS: state manager: check lease failed on NFSv4 server 172.16.20.3 with error 93I have these logs on /var/log/syslog, can you please provide support to fix this issue?

Last edited: