Hello! We are running into an issue when trying to run a Tape backup job of about 9.5TB to our tape library and it fails shortly after starting with the following error:

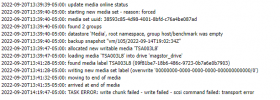

TASK ERROR: write chunk failed - write failed - scsi command failed: transport error

Other smaller jobs (~400GB) have completed with no issue so far. I tried looking in /var/log/syslog and dmesg to find a more descriptive error with no luck.

Our server is a Dell R730XD with a LSI HBA adapter that connects to our tape library via a SFF 8088 mini-SAS direct connection. Other operations such as labeling or moving tapes complete with no error. It is just when we try backing up the 9.5TB datastore that we get the above error. I was wondering if we could get pointed in the right direction to troubleshoot this error.

Thank you in advance!

TASK ERROR: write chunk failed - write failed - scsi command failed: transport error

Other smaller jobs (~400GB) have completed with no issue so far. I tried looking in /var/log/syslog and dmesg to find a more descriptive error with no luck.

Our server is a Dell R730XD with a LSI HBA adapter that connects to our tape library via a SFF 8088 mini-SAS direct connection. Other operations such as labeling or moving tapes complete with no error. It is just when we try backing up the 9.5TB datastore that we get the above error. I was wondering if we could get pointed in the right direction to troubleshoot this error.

Thank you in advance!