TL;DR: pci card comes up as if it simply belongs to the host system despite clearly being set for passthru with a vm.

The situation:

this is a AMD build on a ASRock x570 mobo running PVE 7.1.

I followed the GRUB instructions from https://pve.proxmox.com/wiki/Pci_passthrough since the boot loader is clearly GRUB (v2.04-20)

I went through the manual and BIOS settings and can't find anything else related to virt to enable...

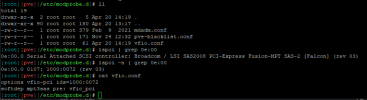

the grub file:

(I tried it with and without pt), and issued update-grub in between with restarts...

my /etc/modules

(also tried before adding these items since the wiki said it might already be in place with kernels later than 5.4)

per "It will not be possible to use PCI passthrough without interrupt remapping.":

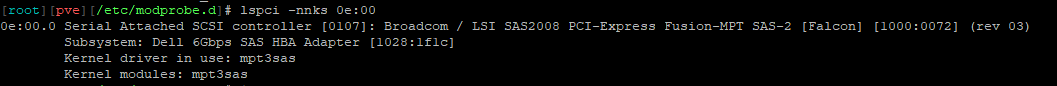

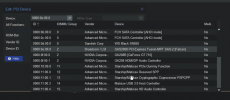

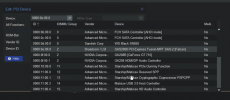

in proxmox:

aaaand I think I may have just rubber duck-ed myself here... I just noticed that the HBA im trying to passthru is in the same IOMMU group as the video card that I'm using in the host... I guess I'll go do some reading on how IOMMU groups work and maybe try moving the graphics card to another spot (the hba wont fit elsewhere)

I'll just leave this post here in the chance it ends up helping someone else...

The situation:

this is a AMD build on a ASRock x570 mobo running PVE 7.1.

I followed the GRUB instructions from https://pve.proxmox.com/wiki/Pci_passthrough since the boot loader is clearly GRUB (v2.04-20)

I went through the manual and BIOS settings and can't find anything else related to virt to enable...

the grub file:

Code:

GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt"

GRUB_CMDLINE_LINUX=""

Code:

dmesg | grep IOMMU

[ 0.851519] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.852470] pci 0000:00:00.2: AMD-Vi: Found IOMMU cap 0x40

[ 0.863068] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).my /etc/modules

Code:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd(also tried before adding these items since the wiki said it might already be in place with kernels later than 5.4)

per "It will not be possible to use PCI passthrough without interrupt remapping.":

Code:

dmesg | grep 'remapping'

[ 0.591109] x2apic: IRQ remapping doesn't support X2APIC mode

[ 0.852473] AMD-Vi: Interrupt remapping enabledin proxmox:

aaaand I think I may have just rubber duck-ed myself here... I just noticed that the HBA im trying to passthru is in the same IOMMU group as the video card that I'm using in the host... I guess I'll go do some reading on how IOMMU groups work and maybe try moving the graphics card to another spot (the hba wont fit elsewhere)

I'll just leave this post here in the chance it ends up helping someone else...