Hi everyone!

I'm having this strange issue with Ceph on my Proxmox cluster.

The

The

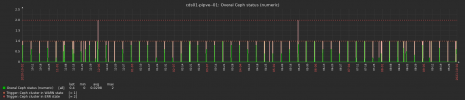

Recently every Saturday exactly at 06:00 (±couple minutes) ceph goes to Warning state.

Logs show that one of the tree monitors goes down and up (not exactly flapping) and other mons constantly calling for election.

And there are slow ops. VMs starts to freeze and lag.

Here is the link to Google Drive with zipped logs from last boot 10-Sep-2021 (covers three Saturdays).

After 6-7 hours cluster fixes itself and everything working as intended. VMs unfreezes.

During this issue ceph commands does not work (timeout) or take VERY long time to execute.

Monitor logs flooded with

Googling it does not help me.

Maybe I misconfigured something, maybe some hidden background job messes things up. I already don't know where to look.

Please help!

P.S. Excuse my poor English.

I'm having this strange issue with Ceph on my Proxmox cluster.

The

*ceph* nodes have OSD, mon, mds and mgr on each of them.The

*pve* nodes have only OSD on them.Recently every Saturday exactly at 06:00 (±couple minutes) ceph goes to Warning state.

Logs show that one of the tree monitors goes down and up (not exactly flapping) and other mons constantly calling for election.

And there are slow ops. VMs starts to freeze and lag.

Here is the link to Google Drive with zipped logs from last boot 10-Sep-2021 (covers three Saturdays).

After 6-7 hours cluster fixes itself and everything working as intended. VMs unfreezes.

During this issue ceph commands does not work (timeout) or take VERY long time to execute.

Monitor logs flooded with

2021-09-11 06:03:22.162 7f8450475700 1 mon.hds01-pipcephn1@0(electing) e11 handle_auth_request failed to assign global_id messages.Googling it does not help me.

Maybe I misconfigured something, maybe some hidden background job messes things up. I already don't know where to look.

Please help!

proxmox-ve: 6.4-1 (running kernel: 5.4.128-1-pve)

pve-manager: 6.4-13 (running version: 6.4-13/9f411e79)

pve-kernel-5.4: 6.4-5

pve-kernel-helper: 6.4-5

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.128-1-pve: 5.4.128-2

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.78-1-pve: 5.4.78-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

ceph: 14.2.22-pve1

ceph-fuse: 14.2.22-pve1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.1.0-1

libpve-access-control: 6.4-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-3

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-3

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.3-1

proxmox-backup-client: 1.1.13-2

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.6-1

pve-cluster: 6.4-1

pve-container: 3.3-6

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-4

pve-firmware: 3.2-4

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.5-pve1~bpo10+1

pve-manager: 6.4-13 (running version: 6.4-13/9f411e79)

pve-kernel-5.4: 6.4-5

pve-kernel-helper: 6.4-5

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.128-1-pve: 5.4.128-2

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.78-2-pve: 5.4.78-2

pve-kernel-5.4.78-1-pve: 5.4.78-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

ceph: 14.2.22-pve1

ceph-fuse: 14.2.22-pve1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.1.0-1

libpve-access-control: 6.4-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-3

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-3

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

openvswitch-switch: 2.12.3-1

proxmox-backup-client: 1.1.13-2

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.6-1

pve-cluster: 6.4-1

pve-container: 3.3-6

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-4

pve-firmware: 3.2-4

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.5-pve1~bpo10+1

JSON:

{

"election_epoch": 300546,

"quorum": [

0,

1,

2

],

"quorum_names": [

"hds01-pipcephn1",

"hds01-pipcephn3",

"hds01-pipcephn2"

],

"quorum_leader_name": "hds01-pipcephn1",

"quorum_age": 16182,

"monmap": {

"epoch": 11,

"fsid": "ebf3a0fb-7ac7-4ee4-b8b0-c9426f497ee0",

"modified": "2021-02-20 16:25:40.801051",

"created": "2020-03-18 00:33:16.936610",

"min_mon_release": 14,

"min_mon_release_name": "nautilus",

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus"

],

"optional": []

},

"mons": [

{

"rank": 0,

"name": "hds01-pipcephn1",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.63.11:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.63.11:6789",

"nonce": 0

}

]

},

"addr": "192.168.63.11:6789/0",

"public_addr": "192.168.63.11:6789/0"

},

{

"rank": 1,

"name": "hds01-pipcephn3",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.63.13:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.63.13:6789",

"nonce": 0

}

]

},

"addr": "192.168.63.13:6789/0",

"public_addr": "192.168.63.13:6789/0"

},

{

"rank": 2,

"name": "hds01-pipcephn2",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.63.12:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.63.12:6789",

"nonce": 0

}

]

},

"addr": "192.168.63.12:6789/0",

"public_addr": "192.168.63.12:6789/0"

}

]

}

}P.S. Excuse my poor English.

Last edited: