Hi! I am currently testing disk performance with different setups, just to find out what is working best, and I am observing strange behavior - or I am simply doing something wrong here

My Setup:

- Proliant ML30 G10 / 64GB Ram (not a rocket but shold do)

- Brodcom HBA 9400-16i with an 4-drive-U3-Enclosure and (after some trouble) with the correct cables.

- 4x Micron 7450 Pro Drives 1920GB

- everything is recognized correctly in Bios/HBA/Proxmox.

- Evaluation Version of MS Server 2025 Standard, VirtIO drivers installed, Updates installed, PVE 8.4.0

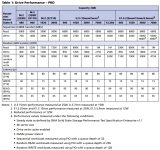

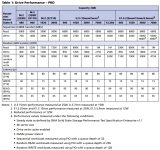

Drive Specs:

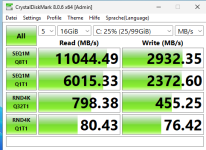

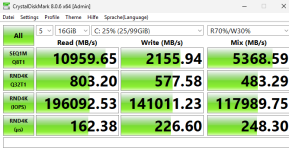

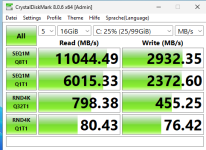

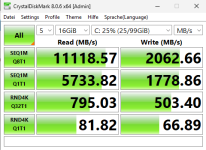

First Test:

- Single Drive, lvm-thin, just to get a feeling for the drive performance:

The reading RND4K-IOPS are far lower than in the specs, the rest is looking like a nice starting point.

Note: Always ensured that the server has completely booted and CPU is staying as low as possible to avoid other processes to interfere.

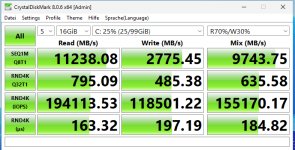

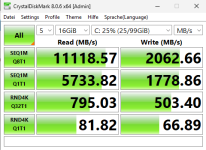

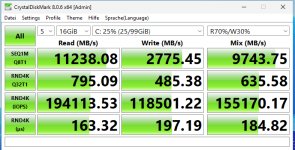

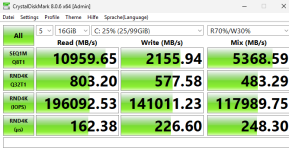

Second Test:

- RAIDZ with all 4 drives

My expectations: Reading up to 2-3 times better, writing the same or a bit less speed

My results:

Am I missing here something, or are my expectation simply to high ?

?

Thank you very much for any feedback / hints / etc.!

My Setup:

- Proliant ML30 G10 / 64GB Ram (not a rocket but shold do)

- Brodcom HBA 9400-16i with an 4-drive-U3-Enclosure and (after some trouble) with the correct cables.

- 4x Micron 7450 Pro Drives 1920GB

- everything is recognized correctly in Bios/HBA/Proxmox.

- Evaluation Version of MS Server 2025 Standard, VirtIO drivers installed, Updates installed, PVE 8.4.0

Drive Specs:

First Test:

- Single Drive, lvm-thin, just to get a feeling for the drive performance:

The reading RND4K-IOPS are far lower than in the specs, the rest is looking like a nice starting point.

Note: Always ensured that the server has completely booted and CPU is staying as low as possible to avoid other processes to interfere.

Second Test:

- RAIDZ with all 4 drives

My expectations: Reading up to 2-3 times better, writing the same or a bit less speed

My results:

Am I missing here something, or are my expectation simply to high

Thank you very much for any feedback / hints / etc.!

Last edited: