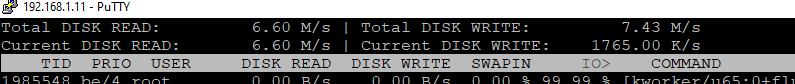

Hello, i have a weird problem i cant understand, i have a couple of cheap 1tb kingston ssds (yes i know that comes with its own problems) that i have ran in SW RAID1, ive been running my VM's of this raid pool, but what i have found out is that after about 6gb of writes, the speed goes all the way down to 8mb/s, and then ofcourse the I/O delay goes through the roof and all my vms slow down

Then i started a bit of testing by moving the vm's temprarly over to a hdd since the ssd was over 80% full, and i know that can destroy performance

So i started testing with different types of copy testing, first on the drives just when emptied with same result, then i broke up the raid and tested the ssds seperate, with still, same "ish" results (no longer rais, so would change a bit), created the raid again (that took almost 24h to rebuild), tested again, same results

Then i got a buddy of mine that also runs proxmox to test on his kingston drives, only difference is that he has 2x 240gb drives, and he got the same results, both of us are running Proxmox 7 with the latest updates that was at the time, dont remember exact kernel, but around 01.03.2022)

So i thought the drives must just be THAT bad, but today i tested them on a seperate windows machine, and to my suprice, both in standalone and in windows 11 SW raid, they both performed EXELENT, even with 40gb file transfer

So then my question is, how can it be a difference of 450mb/s transfer speeds after 6gb copied between Proxmox and Windows 11?

And what els can i try to get them to work properly?

Then i started a bit of testing by moving the vm's temprarly over to a hdd since the ssd was over 80% full, and i know that can destroy performance

So i started testing with different types of copy testing, first on the drives just when emptied with same result, then i broke up the raid and tested the ssds seperate, with still, same "ish" results (no longer rais, so would change a bit), created the raid again (that took almost 24h to rebuild), tested again, same results

Then i got a buddy of mine that also runs proxmox to test on his kingston drives, only difference is that he has 2x 240gb drives, and he got the same results, both of us are running Proxmox 7 with the latest updates that was at the time, dont remember exact kernel, but around 01.03.2022)

So i thought the drives must just be THAT bad, but today i tested them on a seperate windows machine, and to my suprice, both in standalone and in windows 11 SW raid, they both performed EXELENT, even with 40gb file transfer

So then my question is, how can it be a difference of 450mb/s transfer speeds after 6gb copied between Proxmox and Windows 11?

And what els can i try to get them to work properly?