Hello.

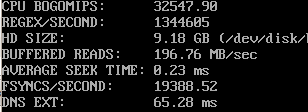

I've been reading about performance, and I think this system IO should be better, but I can't found the problem.

It's a Core i5-2400 with two Toshiba DT01ACA200 SATA hard disks. There is no RAID in use. One HD is for system and VMs and the other for backups.

There are no SMART problems and there is almost no difference with cache on or off.

I think partitions are aligned, as I've read it should be a problem with LVM.

There is ext4 in use, but I don't think it can degrade so much, can it?

But performance, specially fsyncs/second are very low.

At this time there is very low use on this server, but it's gonna change in short, so I would like to get better those values.

Any thoughts, please?

Regards.

I've been reading about performance, and I think this system IO should be better, but I can't found the problem.

It's a Core i5-2400 with two Toshiba DT01ACA200 SATA hard disks. There is no RAID in use. One HD is for system and VMs and the other for backups.

There are no SMART problems and there is almost no difference with cache on or off.

Code:

root@servidor02:~# hdparm -W /dev/sda

/dev/sda:

write-caching = 1 (on)I think partitions are aligned, as I've read it should be a problem with LVM.

Code:

root@servidor02:~# parted /dev/sda u b print

Model: ATA TOSHIBA DT01ACA2 (scsi)

Disk /dev/sda: 2000398934016B

Sector size (logical/physical): 512B/4096B

Partition Table: msdos

Number Start End Size Type File system Flags

1 2097152B 10486809087B 10484711936B primary ext4 boot

2 10487857152B 12584174079B 2096316928B primary linux-swap(v1)

3 12585009152B 2000398934015B 1987813924864B primary lvmThere is ext4 in use, but I don't think it can degrade so much, can it?

Code:

root@servidor02:~# cat /etc/fstab

# <file system> <mount point> <type> <options> <dump> <pass>

/dev/sda1 / ext4 errors=remount-ro 0 1

/dev/sda2 swap swap defaults 0 0

/dev/vg1/lv1 /var/lib/vz ext4 defaults,usrquota 0 2

/dev/sdb1 /backups ext4 defaults 0 3

proc /proc proc defaults 0 0

sysfs /sys sysfs defaults 0 0But performance, specially fsyncs/second are very low.

Code:

root@servidor02:~# pveversion -v

pve-manager: 2.2-32 (pve-manager/2.2/3089a616)

running kernel: 2.6.32-16-pve

proxmox-ve-2.6.32: 2.2-80

pve-kernel-2.6.32-16-pve: 2.6.32-82

lvm2: 2.02.95-1pve2

clvm: 2.02.95-1pve2

corosync-pve: 1.4.4-1

openais-pve: 1.1.4-2

libqb: 0.10.1-2

redhat-cluster-pve: 3.1.93-2

resource-agents-pve: 3.9.2-3

fence-agents-pve: 3.1.9-1

pve-cluster: 1.0-34

qemu-server: 2.0-72

pve-firmware: 1.0-21

libpve-common-perl: 1.0-41

libpve-access-control: 1.0-25

libpve-storage-perl: 2.0-36

vncterm: 1.0-3

vzctl: 4.0-1pve2

vzprocps: 2.0.11-2

vzquota: 3.1-1

pve-qemu-kvm: 1.3-10

ksm-control-daemon: 1.1-1

Code:

root@servidor02:~# pveperf /var/lib/vz

CPU BOGOMIPS: 24743.28

REGEX/SECOND: 1362869

HD SIZE: 984.31 GB (/dev/mapper/vg1-lv1)

BUFFERED READS: 154.23 MB/sec

AVERAGE SEEK TIME: 14.03 ms

FSYNCS/SECOND: 35.92

DNS EXT: 36.25 ms

DNS INT: 3.01 ms (ovh.net)At this time there is very low use on this server, but it's gonna change in short, so I would like to get better those values.

Any thoughts, please?

Regards.