Hi.

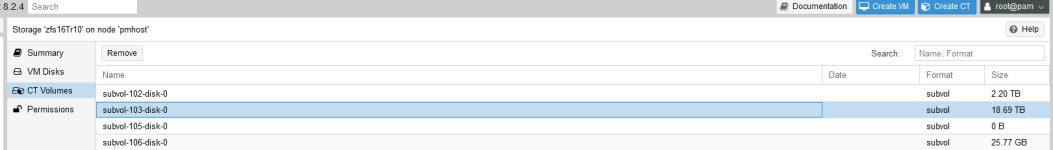

I'm running on Proxmox VE 5.1 on a single node, the filesystem is on ZFS (ZRAID1) and all the VM disks are on local zfs pools.

When trying to expand the vm 100 disk from 80 to 160gb i wrote the dimension in Mb instead of Gb so now i have a 80Tb drive instead of a 160Gb ( on a 240gb drive... ).

).

I tried to edit the config file directly (/etc/pve/local/qemu-server/100.conf) and now it's correct on the VM options but on the actual VM or the storage section is still displayed 80tb.

How can i fix it?

Thanks.

I'm running on Proxmox VE 5.1 on a single node, the filesystem is on ZFS (ZRAID1) and all the VM disks are on local zfs pools.

When trying to expand the vm 100 disk from 80 to 160gb i wrote the dimension in Mb instead of Gb so now i have a 80Tb drive instead of a 160Gb ( on a 240gb drive...

I tried to edit the config file directly (/etc/pve/local/qemu-server/100.conf) and now it's correct on the VM options but on the actual VM or the storage section is still displayed 80tb.

Code:

virtio0: local-zfs:vm-100-disk-1,size=120GHow can i fix it?

Thanks.

Last edited: