[SOLVED] Shared HW RAID / LVM

- Thread starter jm-triuso

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

A bit more information is required. What did you have in mind as the final solution? What should it be able to do for you?

using iscsi-tgt (block) or smbd/nfsd (file.) Might want to read this: https://en.wikipedia.org/wiki/Network-attached_storagehow to share a local disk behind HW Raid (Megaraid) controller

I will specify the problem and my goals as @mariol requested, while I need to discuss it little bit more, but thank you for the info and link anyway.using iscsi-tgt (block) or smbd/nfsd (file.) Might want to read this: https://en.wikipedia.org/wiki/Network-attached_storage

My situation: I have 2 Supermicro servers with PROXMOX PVE installed as nodes in cluster and running multiple VMs and CTs on them. Anytime I need to migrate VM/CT from one to another, I need to use shared disk space (in my case NAS) to transfer the vHDD firstly to that NAS, migrate VM/CT to another cluster node and then transfer the vHDD from NAS to according node.A bit more information is required. What did you have in mind as the final solution? What should it be able to do for you?

The goal: I would like to have at least 3 Supermicro server nodes in cluster and utilize their own HW backed RAIDs for migrations, HA and/or replication. In other words all the nodes should be additionally able to read and write from/to each others RAID volumes.

Additional question: Do I understand that correctly, that should I use iSCSI, only one node would be able to write to the volume at the time?

Thanks for your feedback, I think I now know where the journey should go. Basically, you can see the storage types and which ones can be shared here.

What storage type to you use on your nodes?Anytime I need to migrate VM/CT from one to another, I need to use shared disk space (in my case NAS) to transfer the vHDD firstly to that NAS, migrate VM/CT to another cluster node and then transfer the vHDD from NAS to according node.

Thank you for the link, I have already read something about it there.Thanks for your feedback, I think I now know where the journey should go. Basically, you can see the storage types and which ones can be shared here.

Nodes have configured LVMs in the moment (locally, not via cluster). But obviously (AFAIK) I cannot share them like that.What storage type to you use on your nodes?

Please post the output of "pvesm status".Nodes have configured LVMs in the moment (locally, not via cluster). But obviously (AFAIK) I cannot share them like that.

Please post the output of "pvesm status".

Node 01:

Code:

root@tr-pve-01:~# pvesm status

Name Type Status Total Used Available %

local dir active 13149676 2846440 9613472 21.65%

local-lvm lvmthin active 11366400 0 11366400 0.00%

nas03 nfs active 1596868608 991944704 604383232 62.12%

tr-vmware-05 esxi active 0 0 0 0.00%

trpve01-vg01 lvm active 2339835904 142606336 2197229568 6.09%

trpve02-vg01 lvm disabled 0 0 0 N/A

root@tr-pve-01:~#Node 02:

Code:

root@tr-pve-02:~# pvesm status

Name Type Status Total Used Available %

local dir active 13149676 2852420 9607492 21.69%

local-lvm lvmthin active 11366400 0 11366400 0.00%

nas03 nfs active 1596868608 992190464 604137472 62.13%

tr-vmware-05 esxi active 0 0 0 0.00%

trpve01-vg01 lvm disabled 0 0 0 N/A

trpve02-vg01 lvm active 2339835904 0 2339835904 0.00%

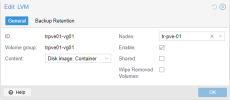

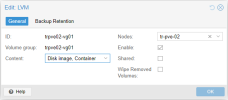

root@tr-pve-02:~#I am not sure, why exactly there are disabled VGs in both nodes. Obviously I have tested, if I can share them to each other, but I already disable the sharing and restrict them to their nodes. Please see the screenshots below:

2 Node Cluster:

You can also migrate live between the nodes using LVM and LVM-Thin. I recommend LVM-Thin as it also allows snapshots.Requirements/Steps LVM-Thin:

- LVM-Thin must have the same name on each node

- On the first node where you create LVM-Thin, you also add the storage (checkbox “Add Storage”)

- Leave out the checkbox for the second node

- Then edit your LVM Thin under Datacenter -> Storage and delete the restriction for Nodes

If you use ZFS [1], this is even more elegant, because you have an additional replication feature [2] where you can, for example, automatically replicate your VMs every 5 minutes. This has the advantage that the VM data is available on your second host and a live migration only has to copy the changed data. You could also add a third node for replication.

3 Node Cluster HA:

One possibility would be a central storage (SAN/NAS) with FC or ISCSI. Whereby you have a single point of failure here. So your central storage.Ceph

[1] https://pve.proxmox.com/pve-docs/pve-admin-guide.html#chapter_zfs

[2] https://pve.proxmox.com/pve-docs/pve-admin-guide.html#chapter_pvesr

[3] https://pve.proxmox.com/pve-docs/pve-admin-guide.html#chapter_pveceph

Thank you for the info. Snapshots are useful for me.2 Node Cluster:

You can also migrate live between the nodes using LVM and LVM-Thin. I recommend LVM-Thin as it also allows snapshots.

This is actually little bit funny. I have that idea (to name the volume the same on both nodes) over a year ago when I started with Proxmox. And then for some reason I have discarded that. But thank you, this is the easiest way of sharing the volumes.Requirements/Steps LVM-Thin:

From now on you are even able to move your VMs between the nodes online. Prerequisite is the same CPU.

- LVM-Thin must have the same name on each node

- On the first node where you create LVM-Thin, you also add the storage (checkbox “Add Storage”)

- Leave out the checkbox for the second node

- Then edit your LVM Thin under Datacenter -> Storage and delete the restriction for Nodes

IINM the ZFS is not recommended for HW backed RAIDs: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_hardware_2If you use ZFS [1], this is even more elegant, because you have an additional replication feature [2] where you can, for example, automatically replicate your VMs every 5 minutes. This has the advantage that the VM data is available on your second host and a live migration only has to copy the changed data. You could also add a third node for replication.

Do not use ZFS on top of a hardware RAID controller which has its own cache management. ZFS needs to communicate directly with the disks. An HBA adapter or something like an LSI controller flashed in “IT” mode is more appropriate.

I would take a look later, when the 3rd node be prepared. Thank you for the info.3 Node Cluster HA:

One possibility would be a central storage (SAN/NAS) with FC or ISCSI. Whereby you have a single point of failure here. So your central storage.

Cephwould be here the choice of the hour, but has completely different requirements. Please take a look at the documentation [3] on this topic.

[1] https://pve.proxmox.com/pve-docs/pve-admin-guide.html#chapter_zfs

[2] https://pve.proxmox.com/pve-docs/pve-admin-guide.html#chapter_pvesr

[3] https://pve.proxmox.com/pve-docs/pve-admin-guide.html#chapter_pveceph

This is actually little bit funny. I have that idea (to name the volume the same on both nodes) over a year ago when I started with Proxmox. And then for some reason I have discarded that. But thank you, this is the easiest way of sharing the volumes.

I'm glad I could help

One possibility would be to either configure the raid controller in HBA mode, or replace it with a real HBA controller.IINM the ZFS is not recommended for HW backed RAIDs: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#_hardware_2