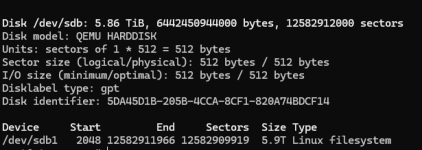

2 nodes, 3rd node does not have access to ZPool, it is just a tiebreaker node for quorum. No snapshotting, backups are handled by Veeam to their own hardware (in other words, no backups in the web-interface of ProxMox). Everything migrated from a HyperV computer, where the 7TB was thick-provisioned, so that's the absolute MAXIMUM the data should be. When converted to RAW with compression, etc... should be roughly 5 TB on disk. The PHYSICAL server these images all came from was 14TB total. Migrated to 2 identical 32TB servers, with ZFS3 enabled. EVEN IF that only left me with 17TB of usable space, I should be under 15TB even if every virtual drive was full (they aren't even close).

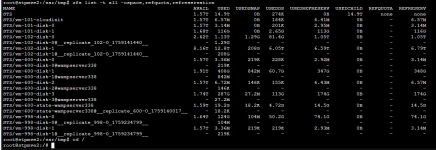

Anyway, I experienced an IO-fault on one of the VMs on node2 ("the big one" with a single 6TB disk). In order to recover after IO-fault on node2, I migrated one of the smallers machines from node2 to node1. I removed all replication jobs / HA settings through the GUI, but one of them kept saying removal pending, so I manually edited /etc/pve/replication.cfg and rebooted the node to get it to stop.

First question: how do I go about cleaning up the mess Replication has left behind?

Next question: what on earth did I do wrong?

Anyway, I experienced an IO-fault on one of the VMs on node2 ("the big one" with a single 6TB disk). In order to recover after IO-fault on node2, I migrated one of the smallers machines from node2 to node1. I removed all replication jobs / HA settings through the GUI, but one of them kept saying removal pending, so I manually edited /etc/pve/replication.cfg and rebooted the node to get it to stop.

First question: how do I go about cleaning up the mess Replication has left behind?

Next question: what on earth did I do wrong?