I have a VM using GPU passthrough - and it works fine. I can see the GPU inside the VM and interact with it generally. In an effort to use this GPU as my primary display, I need to _disable_ the implicit video device that Proxmox firmware supplies to the VM, so I changed `Hardware` > `Display` = `none` in the VM instance settings.

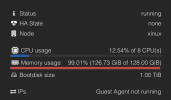

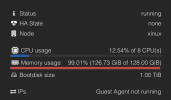

At this point the VM no longer boots. The device spins consuming ~12% CPU and about 100% memory.

This is obviously very bad - so I went through many gyrations of adjusting my GRUB and Linux boot options to attempt to figure out what (clearly) video related setting was causing trouble. I'm doing this headless since, recall, there is no display. But adding a virtual serial port and using `qm terminal` would yield nothing .. so that gave me an idea.

On a whim I tried OVMF firmware by changing `Hardware` > `BIOS` = `OVMF` (previously was `SeaBIOS`, the default). And to my surprise, the _behavior_ was the same as above (~12% CPU and ~100% memory) but the video output on a physical monitor actually worked. The firmware was running, but now it can't read my boot drive?

It clearly can read the _partition table_, but according to OVMF the contents of the drive are empty. This doesn't make much sense.

Can anyone give me insight into what's going on here? SeaBIOS boots only with a valid `Display`, OVMF seems to work with `Display=none` but fails for lack of finding any media on the boot disk. Are these known issues? Can OVMF not boot VirtIO disks?

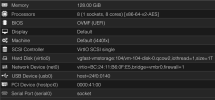

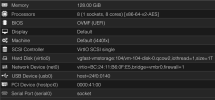

The VM config itself is fairly vanilla.

Recap:

SeaBIOS

- Boots VM fine with valid `Display` setting

- Hangs with high resource usage when `Display=none`

- When `Display=none` during hang, the GPU outputs are not used (altogether not that surprising) so the screen is just black with no feedback regarding what's going on

OVMF

- Also hangs in boot with high resource usage (per summary page)

- Connected GPU outputs are used, showing the UEFI shell as shown above

- VirtIO boot disk reads back with zero file content, but UEFI shell seems to properly reflect the partition table layout in its BLK0,1,2,3 `map` output

- Setting `Display=none` or having a valid Display setting does not change this boot behavior; it fails the same way in either case

VM under test is just latest Debian Bookworm.

At this point the VM no longer boots. The device spins consuming ~12% CPU and about 100% memory.

This is obviously very bad - so I went through many gyrations of adjusting my GRUB and Linux boot options to attempt to figure out what (clearly) video related setting was causing trouble. I'm doing this headless since, recall, there is no display. But adding a virtual serial port and using `qm terminal` would yield nothing .. so that gave me an idea.

On a whim I tried OVMF firmware by changing `Hardware` > `BIOS` = `OVMF` (previously was `SeaBIOS`, the default). And to my surprise, the _behavior_ was the same as above (~12% CPU and ~100% memory) but the video output on a physical monitor actually worked. The firmware was running, but now it can't read my boot drive?

It clearly can read the _partition table_, but according to OVMF the contents of the drive are empty. This doesn't make much sense.

Can anyone give me insight into what's going on here? SeaBIOS boots only with a valid `Display`, OVMF seems to work with `Display=none` but fails for lack of finding any media on the boot disk. Are these known issues? Can OVMF not boot VirtIO disks?

The VM config itself is fairly vanilla.

Recap:

SeaBIOS

- Boots VM fine with valid `Display` setting

- Hangs with high resource usage when `Display=none`

- When `Display=none` during hang, the GPU outputs are not used (altogether not that surprising) so the screen is just black with no feedback regarding what's going on

OVMF

- Also hangs in boot with high resource usage (per summary page)

- Connected GPU outputs are used, showing the UEFI shell as shown above

- VirtIO boot disk reads back with zero file content, but UEFI shell seems to properly reflect the partition table layout in its BLK0,1,2,3 `map` output

- Setting `Display=none` or having a valid Display setting does not change this boot behavior; it fails the same way in either case

VM under test is just latest Debian Bookworm.