PVE version: 8.2.2

Netbox Version: 4.0.1

Hello,

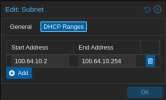

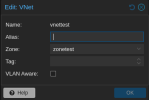

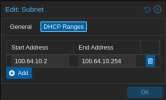

As stated in the title, I'm having issues deploying VMs when they are assigned to an SDN zone that uses Netbox for IPAM. When testing, I'm able to create a zone, vnet, subnet, and dhcp pool just fine and deploy a VM when that zone is set to use the default PVE IPAM. But when I then switch it to use Netbox as IPAM, I'm unable to even start the VM due to errors. Basically, the VM goes to start, finds an IP, allocates that IP in Netbox, then appears to fail to find the bridge with the virtual network interface assigened to the VM. The bridge exists, and I can see the VM interface for about half a second before it disappears from the host. Below is some screenshots and outputs from the Proxmox gui, cli, and Netbox. I followed SDN guide from the pve docs. I do have frr and dnsmasq installed and running as well.

SDN Setup:

VM Setup:

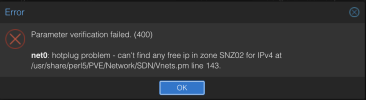

VM Start Errors:

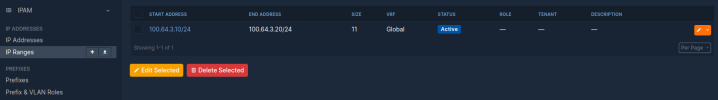

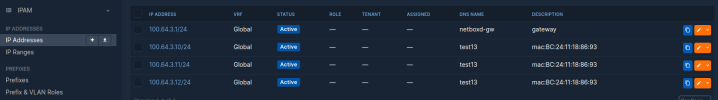

Netbox:

Interfaces:

Extra things we have tried:

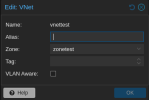

- Enabling VLAN Aware

- Manually turning vnettest bridge to UP

- Removing the DNS Prefix

- Rebuilding the whole stack

- Manually adding the Prefix, IP range, and the IP addresses to a specific VRF in Netbox

- We made sure the API calls to Netbox were correct and permissions were right for the token

- Checked firewall rules to the Netbox VM

- Added node firewall rules to accept DNS and DHCPfwd

- Updated the PVE cluster nodes

- Restarted dnsmasq for the zones

We are pretty new to using Netbox and this is our first attempt at using SDN within Proxmox so I'm hoping maybe we are just missing a simple option somewhere. The Prefix, and IP addresses dynamically configure in Netbox, but we do need to manually add the DHCP range of addresses to get the VM to successfully pull an address. Sorry for the wall of text and pictures, but I wanted to be through.

Thanks!

Netbox Version: 4.0.1

Hello,

As stated in the title, I'm having issues deploying VMs when they are assigned to an SDN zone that uses Netbox for IPAM. When testing, I'm able to create a zone, vnet, subnet, and dhcp pool just fine and deploy a VM when that zone is set to use the default PVE IPAM. But when I then switch it to use Netbox as IPAM, I'm unable to even start the VM due to errors. Basically, the VM goes to start, finds an IP, allocates that IP in Netbox, then appears to fail to find the bridge with the virtual network interface assigened to the VM. The bridge exists, and I can see the VM interface for about half a second before it disappears from the host. Below is some screenshots and outputs from the Proxmox gui, cli, and Netbox. I followed SDN guide from the pve docs. I do have frr and dnsmasq installed and running as well.

SDN Setup:

VM Setup:

VM Start Errors:

Code:

root@test-pve-node1:~# qm start 100

can't find any free ip in zone zonetest for IPv4 at /usr/share/perl5/PVE/Network/SDN/Vnets.pm line 143.

found ip free 100.64.10.3 in range 100.64.10.2-100.64.10.254

kvm: -netdev type=tap,id=net0,ifname=tap100i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on: network script /var/lib/qemu-server/pve-bridge failed with status 6400

start failed: QEMU exited with code 1

Code:

root@test-pve-node1:~# systemctl status frr

● frr.service - FRRouting

Loaded: loaded (/lib/systemd/system/frr.service; enabled; preset: enabled)

Active: active (running) since Fri 2024-05-17 11:24:42 PDT; 2 days ago

Docs: https://frrouting.readthedocs.io/en/latest/setup.html

Main PID: 803 (watchfrr)

Status: "FRR Operational"

Tasks: 15 (limit: 9300)

Memory: 29.6M

CPU: 46.755s

CGroup: /system.slice/frr.service

├─803 /usr/lib/frr/watchfrr -d -F traditional zebra bgpd staticd bfdd

├─821 /usr/lib/frr/zebra -d -F traditional -A 127.0.0.1 -s 90000000

├─849 /usr/lib/frr/bgpd -d -F traditional -A 127.0.0.1

├─867 /usr/lib/frr/staticd -d -F traditional -A 127.0.0.1

└─874 /usr/lib/frr/bfdd -d -F traditional -A 127.0.0.1

May 20 09:05:33 test-pve-node1 bgpd[849]: [VCGF0-X62M1][EC 100663301] INTERFACE_STATE: Cannot find IF tap100i0 in VRF 0

May 20 09:06:59 test-pve-node1 bgpd[849]: [VCGF0-X62M1][EC 100663301] INTERFACE_STATE: Cannot find IF tap100i0 in VRF 0

May 20 10:32:47 test-pve-node1 bgpd[849]: [VCGF0-X62M1][EC 100663301] INTERFACE_STATE: Cannot find IF tap100i0 in VRF 0Netbox:

Interfaces:

Code:

root@test-pve-node1:~# cat /etc/network/interfaces.d/sdn

#version:41

auto vnettest

iface vnettest

address 100.64.10.1/24

post-up iptables -t nat -A POSTROUTING -s '100.64.10.0/24' -o vmbr0 -j SNAT --to-source 172.18.7.201

post-down iptables -t nat -D POSTROUTING -s '100.64.10.0/24' -o vmbr0 -j SNAT --to-source 172.18.7.201

post-up iptables -t raw -I PREROUTING -i fwbr+ -j CT --zone 1

post-down iptables -t raw -D PREROUTING -i fwbr+ -j CT --zone 1

bridge_ports none

bridge_stp off

bridge_fd 0

mtu 1460

ip-forward on

Code:

root@test-pve-node1:~# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master vmbr0 state UP mode DEFAULT group default qlen 1000

link/ether 54:b2:03:0b:b0:8e brd ff:ff:ff:ff:ff:ff

altname enp0s31f6

3: wlp1s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 14:4f:8a:02:72:5e brd ff:ff:ff:ff:ff:ff

4: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 54:b2:03:0b:b0:8e brd ff:ff:ff:ff:ff:ff

13: vnettest: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1460 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 32:31:42:d4:44:ad brd ff:ff:ff:ff:ff:ffExtra things we have tried:

- Enabling VLAN Aware

- Manually turning vnettest bridge to UP

- Removing the DNS Prefix

- Rebuilding the whole stack

- Manually adding the Prefix, IP range, and the IP addresses to a specific VRF in Netbox

- We made sure the API calls to Netbox were correct and permissions were right for the token

- Checked firewall rules to the Netbox VM

- Added node firewall rules to accept DNS and DHCPfwd

- Updated the PVE cluster nodes

- Restarted dnsmasq for the zones

We are pretty new to using Netbox and this is our first attempt at using SDN within Proxmox so I'm hoping maybe we are just missing a simple option somewhere. The Prefix, and IP addresses dynamically configure in Netbox, but we do need to manually add the DHCP range of addresses to get the VM to successfully pull an address. Sorry for the wall of text and pictures, but I wanted to be through.

Thanks!

Last edited: