Hi,

I have two VMs which have cloudinit drives, which are failing to replicate over that drive, when a replication task is created, other VMs with cloudinit drives are replicating over fine but these two aren't. Granted those other VMs replication tasks were created months n months ago. Trying to replicate pve-1 to pve-2

VM 102 for example has this replication log

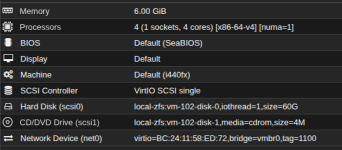

As you can see it's not replicating `vm-102-cloudinit` disk, and this is my vm configuration. Comparing it to a VM that did replicate it, the scsi config lines are the same, only things that are different really, are UUIDs/IPs/Name the usual suspects.

The disk does exist and the VM is booting just fine

I've tried searching the problem, but the only thing I did find was an old topic here which sadly no one replied too. Is there something I can check to see why it thinks it doesn't need to replicate the cloudinit disk?

pve-1: pve-manager/8.4.12/c2ea8261d32a5020 (running kernel: 6.8.12-14-pve)

pve-2: pve-manager/8.4.12/c2ea8261d32a5020 (running kernel: 6.8.12-14-pve)

Is there a service I need to restart to make it re-evaluate the VM configurations assuming I need to do something like that, or do I need to plan a reboot of the host?

I have two VMs which have cloudinit drives, which are failing to replicate over that drive, when a replication task is created, other VMs with cloudinit drives are replicating over fine but these two aren't. Granted those other VMs replication tasks were created months n months ago. Trying to replicate pve-1 to pve-2

VM 102 for example has this replication log

Code:

2025-09-22 09:30:00 102-0: start replication job

2025-09-22 09:30:00 102-0: guest => VM 102, running => 52759

2025-09-22 09:30:00 102-0: volumes => local-zfs:vm-102-disk-0

2025-09-22 09:30:01 102-0: freeze guest filesystem

2025-09-22 09:30:01 102-0: create snapshot '__replicate_102-0_1758529800__' on local-zfs:vm-102-disk-0

2025-09-22 09:30:01 102-0: thaw guest filesystem

2025-09-22 09:30:02 102-0: using insecure transmission, rate limit: none

2025-09-22 09:30:02 102-0: incremental sync 'local-zfs:vm-102-disk-0' (__replicate_102-0_1758529560__ => __replicate_102-0_1758529800__)

2025-09-22 09:30:02 102-0: send from @__replicate_102-0_1758529560__ to rpool/data/vm-102-disk-0@__replicate_102-0_1758529800__ estimated size is 17.9M

2025-09-22 09:30:02 102-0: total estimated size is 17.9M

2025-09-22 09:30:02 102-0: TIME SENT SNAPSHOT rpool/data/vm-102-disk-0@__replicate_102-0_1758529800__

2025-09-22 09:30:03 102-0: [pve-2] successfully imported 'local-zfs:vm-102-disk-0'

2025-09-22 09:30:03 102-0: delete previous replication snapshot '__replicate_102-0_1758529560__' on local-zfs:vm-102-disk-0

2025-09-22 09:30:03 102-0: (remote_finalize_local_job) delete stale replication snapshot '__replicate_102-0_1758529560__' on local-zfs:vm-102-disk-0

2025-09-22 09:30:03 102-0: end replication jobAs you can see it's not replicating `vm-102-cloudinit` disk, and this is my vm configuration. Comparing it to a VM that did replicate it, the scsi config lines are the same, only things that are different really, are UUIDs/IPs/Name the usual suspects.

Code:

agent: 1

boot: order=scsi0;net0

ciuser: fingerlessgloves

cores: 4

cpu: x86-64-v4

hotplug: disk,network,usb,memory

ipconfig0: ip=78.x.x.x/28,gw=78.x.x.x

memory: 6144

meta: creation-qemu=9.0.0,ctime=1721506383

name: mailcow

nameserver: 172.30.0.1

net0: virtio=BC:24:11:58:ED:72,bridge=vmbr0,tag=1100

numa: 1

onboot: 1

ostype: l26

scsi0: local-zfs:vm-102-disk-0,iothread=1,size=60G

scsi1: local-zfs:vm-102-cloudinit,media=cdrom,size=4M

scsihw: virtio-scsi-single

searchdomain: local

smbios1: uuid=0b5a9762-1ddf-4a1c-a1c5-5fca160eea87

sockets: 1

sshkeys: ssh-ed25519%20AAAAC3NzaC1lZDI1NTE5AAAAIMPkBlg2JGmmNMy77VBiYzRnCIJsq1GrWBYoTt5we5cw%20jonny%40sshkey

startup: order=1,up=10

tablet: 0

vmgenid: c0a65ff9-5ba4-490c-bec3-a8cdfdd6d193The disk does exist and the VM is booting just fine

Code:

root@pve-1# zfs list | grep 102

rpool/data/vm-102-cloudinit 72K 2.64T 72K -

rpool/data/vm-102-disk-0 43.3G 2.64T 43.3G -

Code:

root@pve-2# zfs list | grep 102

rpool/data/vm-102-disk-0 43.3G 642G 43.3G -I've tried searching the problem, but the only thing I did find was an old topic here which sadly no one replied too. Is there something I can check to see why it thinks it doesn't need to replicate the cloudinit disk?

pve-1: pve-manager/8.4.12/c2ea8261d32a5020 (running kernel: 6.8.12-14-pve)

pve-2: pve-manager/8.4.12/c2ea8261d32a5020 (running kernel: 6.8.12-14-pve)

Is there a service I need to restart to make it re-evaluate the VM configurations assuming I need to do something like that, or do I need to plan a reboot of the host?