I Just updated my test servers MSSQL to v271 from v208 about 3 hours ago and I've gotten zero events, I'll keep an eye on it and report if anything pops up.Hi,

I updated MSSQL to driver 271 and the 129 error is back, although it is less frequent but it is back. I am going back to 208 tonight.View attachment 86006

Redhat VirtIO developers would like to coordinate with Proxmox devs re: "[vioscsi] Reset to device ... system unresponsive"

- Thread starter ylluminate

- Start date

-

- Tags

- virtio virtio disk virtio scsi

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I

I updated over the weekend and tonight there was one error that stopped the nightly task. This task was triggering this error pretty regularly. I was hoping this would be resolved after it worked for three days without a hitch.I Just updated my test servers MSSQL to v271 from v208 about 3 hours ago and I've gotten zero events, I'll keep an eye on it and report if anything pops up.

Well, I also had to rollback from v266 / v271 to v208 to be stable again.Hi,

I updated MSSQL to driver 271 and the 129 error is back, although it is less frequent but it is back. I am going back to 208 tonight.

I have a workload to run scsi stresstest with different KMS tuning :

It's using OODefrag to run "complete/name" hard drive optimisation 4 times consecutively (htop = 100% Disk IO)

Code:

----------------------------------------------------------------------------------------------------------------

OK = STABLE

CRASH = kvm: ../block/block-backend.c:1780: blk_drain: Assertion `qemu_in_main_thread()' failed.

----------------------------------------------------------------------------------------------------------------

pve-manager/7.4-19/f98bf8d4 (running kernel: 5.15.158-2-pve)

QEMU emulator version 7.2.10

scsihw: virtio-scsi-single

----------------------------------------------------------------------------------------------------------------

v271 + cache=unsafe,discard=on,iothread=1 : CRASH (FIO_R = 3794MBs_58,0k_0,31ms / FIO_W = 3807MBs_58,0k_0,31ms)

v271 + cache=unsafe,discard=on,iothread=0 : OK (FIO_R = 3748MBs_70,2k_0,26ms / FIO_W = 3762MBs_70,1k_0,27ms)

v266 + cache=unsafe,discard=on,iothread=1 : CRASH (FIO_R = 3817MBs_56,2k_0,32ms / FIO_W = 3830MBs_56,2k_0,32ms)

v266 + cache=unsafe,discard=on,iothread=0 : OK (FIO_R = 3804MBs_71,9k_0,26ms / FIO_W = 3818MBs_71,8k_0,26ms)

v208 + cache=unsafe,discard=on,iothread=1 : OK (FIO_R = 3922MBs_55,6k_0,32ms / FIO_W = 3937MBs_55,6k_0,32ms)

v208 + cache=unsafe,discard=on,iothread=0 : OK (FIO_R = 3823MBs_68,6k_0,27ms / FIO_W = 3835MBs_68,5k_0,27ms) **BEST**

v208 + cache=unsafe,discard=ignore,iothread=1 : OK (FIO_R = 3856MBs_55,7k_0,32ms / FIO_W = 3867MBs_55,6k_0,32ms)

v208 + cache=unsafe,discard=ignore,iothread=0 : OK (FIO_R = 3806MBs_68,0k_0,27ms / FIO_W = 3819MBs_68,0k_0,27ms)

v208 + discard=on,iothread=1 : OK (FIO_R = 234MBs_30,9k_0,95ms / FIO_W = 245MBs_30,8k_1,10ms)

v208 + discard=on,iothread=0 : OK (FIO_R = 239MBs_29,9k_0,85ms / FIO_W = 252MBs_29,9k_1,14ms)

----------------------------------------------------------------------------------------------------------------

Last edited:

Do you use Local storage ?I also had to rollback from v266 / v271 to v208 to be stable again.

What about PVE version 8.4 shipped with QEMU 9.0 and Kernel 6.8 ?

Thoses tests were done on a very simple station: Ryzen 7 5700X + SSD Crucial MX500 sata + Local Thin LVM.Do you use Local storage ?

What about PVE version 8.4 shipped with QEMU 9.0 and Kernel 6.8 ?

When I will have time, I will also test PVE 8.4 and Qemu 9.2

No drivers can improve this consumer ssd where outside of their internal dram cache, they write slow.SSD Crucial MX500 sata

Moreover cache=unsafe increase the slowness because of double if not triple cache involved.

Last edited:

I'm totally agree, this kind of hardware is not for for production.No drivers can improve this consumer ssd where outside of their internal dram cache, they write slow.

Moreover cache=unsafe increase the slowness because of double if not triple cache involved.

What is interesting here is that the v208 windows driver doesn't kill the VM, contrary to v266 + v271 (blk_drain in block-backend.c with iothread=1)

please test with pve8 && recent qemu, I remember than some iothreads crash has been fixed since. (and pve7 is EOL anyway)

Hey All,

Here are some of my testings that I hope help.

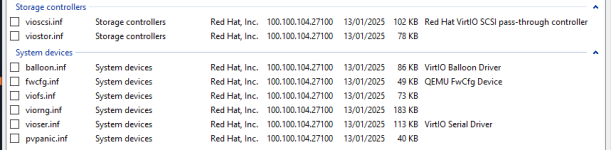

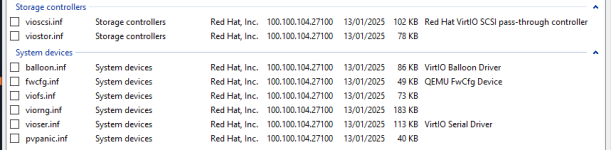

I have upgraded the drivers to v271 on one of my production servers (non critical) to do testing with, here's the verification of said drivers:

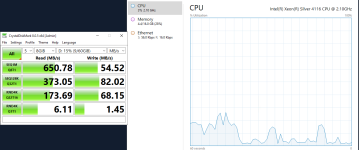

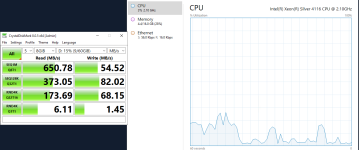

I ran that same crystal disk benchmark that was proven to force a vioscsi crash last year almost guaranteed and this time the test completed successfully without ever locking up the VM or causing a vioscsi crash

Here's the PVE version details:

ceph: 19.2.1-pve3

pve-qemu-kvm: 9.2.0-5

qemu-server: 8.3.12

proxmox-ve: 8.4.0 (running kernel: 6.8.12-10-pve)

And the configuration of the Virtual Machine:

Happy to do more testing if required but I essentially don't have that vioscsi error on either this machine or my test MSSQL servers.

Here are some of my testings that I hope help.

I have upgraded the drivers to v271 on one of my production servers (non critical) to do testing with, here's the verification of said drivers:

I ran that same crystal disk benchmark that was proven to force a vioscsi crash last year almost guaranteed and this time the test completed successfully without ever locking up the VM or causing a vioscsi crash

Here's the PVE version details:

ceph: 19.2.1-pve3

pve-qemu-kvm: 9.2.0-5

qemu-server: 8.3.12

proxmox-ve: 8.4.0 (running kernel: 6.8.12-10-pve)

And the configuration of the Virtual Machine:

Code:

cat /etc/pve/qemu-server/106.conf

agent: 1

bios: ovmf

boot: order=scsi0;net0;scsi2

cores: 4

cpu: host

efidisk0: cluster-storage:vm-106-disk-0,efitype=4m,pre-enrolled-keys=1,size=528K

machine: pc-q35-8.1

memory: 16384

meta: creation-qemu=8.1.5,ctime=1710814878

name: <name>

net0: virtio=BC:24:11:8A:D4:F1,bridge=vmbr1,firewall=1

numa: 1

onboot: 1

ostype: win10

scsi0: cluster-storage:vm-106-disk-1,discard=on,iothread=1,size=70G

scsi1: cluster-storage:vm-106-disk-2,discard=on,iothread=1,size=60G

scsihw: virtio-scsi-singleHappy to do more testing if required but I essentially don't have that vioscsi error on either this machine or my test MSSQL servers.

I'm having a real hard time with this issue across the board on my PVE clusters. All of them have Ceph as the main filestore for all the VMs, and all are on 40G dedicated storage network. When I run nightly backups to the cephfs filesystem, my Windows machines completely freeze for up to 5-10 minutes at time throwing that vioscsi device reset error that was mentioned in the thread earlier.

I'm running the latest and greatest VirtIO Drivers (v271), and the Windows machines seem to be most affected, the Linux machines, not so much although they throw a bunch of hung_task error messages on the console.

Any suggestions would be greatly appreciated!

I'm running the latest and greatest VirtIO Drivers (v271), and the Windows machines seem to be most affected, the Linux machines, not so much although they throw a bunch of hung_task error messages on the console.

Any suggestions would be greatly appreciated!

Are you using Proxmox Backup Server ?When I run nightly backups to the cephfs filesystem

Yes we are. I had set up nightly vzdump backups for critical systems so I can take the resulting .lzo file or what not and back it up to the cloud, but these systems are being backed up to PBS as well, so I may have to disable the local backups for now and work on a good method to get our critical system backups from PBS into the cloud.Are you using Proxmox Backup Server ?

PBS has its own issues depending configuration of course, like PBS over WAN or slow link can timeout source VM, PBS fleecing can help nowadays.

But to dig out, I should start disabling PBS temporarily and keep vzdump.

But to dig out, I should start disabling PBS temporarily and keep vzdump.

you really should use fleecing option in your backup advanced option. (you can use same ceph rbd storage than your main vm).I'm having a real hard time with this issue across the board on my PVE clusters. All of them have Ceph as the main filestore for all the VMs, and all are on 40G dedicated storage network. When I run nightly backups to the cephfs filesystem, my Windows machines completely freeze for up to 5-10 minutes at time throwing that vioscsi device reset error that was mentioned in the thread earlier.

I'm running the latest and greatest VirtIO Drivers (v271), and the Windows machines seem to be most affected, the Linux machines, not so much although they throw a bunch of hung_task error messages on the console.

Any suggestions would be greatly appreciated!

(btw, I hope than your cephfs storage for your backup is not in the same ceph cluster than your production rbd storage, right ?

you really should use fleecing option in your backup advanced option. (you can use same ceph rbd storage than your main vm).

(btw, I hope than your cephfs storage for your backup is not in the same ceph cluster than your production rbd storage, right ?

Thanks for the suggestion! I just read up about fleecing, and while I've been using PVE since 5.x, I did not realize they added this option. I enabled Fleecing to use the local storage of each node, so I'll see how this works on the run tonight.

Hi all, I'm the author of this ass-kicking post. After more than year, I'm back to service, as bug hunting never ends.Hi all, this is a result of my deep analysis of this problem within the last few days.

Just a quick recap for newcomers - if you're affected by sudden Windows VM hangs, reboots, file systems errors and related I/O stuff, especially with messages like:

this thread and post is probably for you (under specific circumstances).Code:PVE host: QEMU[ ]: kvm: Desc next is 3 QEMU[ ]: kvm: virtio: zero sized buffers are not allowed Windows guest: Warning | vioscsi | Event ID 129 | Reset to device, \Device\RaidPort[X], was issued. Warning | disk | Event ID 153 | The IO operation at logical block address [XXX] for Disk 1 (PDO name: \Device\000000XX) was retried.

I'm quite confident with my findings, as they are reproducible, (almost) deterministic and analyzed/captured in three different setups.

At first - there is (probably) nothing wrong with your storage (as some posts are trying to tell you, though in good faith - here or in github threads). If storage stress-tests work with correct drivers, VirtIO Block or non-virtualized, all recommendations about modifying some windows storage timeouts seem to be unfounded and doesn't make any sense to me. All my tests have been done with local storage and all are affected.

No iSCSI, no network storage, even no RDMA storage here. I'm absolutely sure regarding this.

TLDR:

- It's all about drivers.

- VirtIO SCSI drivers (vioscsi) up to 0.1.208 are stable. I can say that 0.1.208 (virtio-win-0.1.208.iso) is super stable. No problems so far, with extensive testing. But it's sufficient to apply only the "vioscsi" driver, not all other ISO drivers (i.e. SCSI driver downgrade is needed only).

- Drivers since 0.1.215 (virtio-win-0.1.215.iso) are crippled. Howgh. Note - this is the first ISO version (from January 2022) where dedicated drivers for Windows 2022 were included (although it's only separate folder and it's binary identical version as for Windows 2019).

- The most dangerous config is VirtIO SCSI Single with aio=io_uring (i.e. default). I'm able to crash it almost "on-demand" on each of my three independent setups.

If you're affected, these are the steps to become stable:

- Update (i.e. downgrade) your "vioscsi" driver to 0.1.208. It's a definitive solution.

- If you cannot downgrade „vioscsi" driver (e.g. too many VMs, etc.), switch to VirtIO Block - at least for problematic „data" drives.

You'll lose some performance (but max. 20% in general with SSDs and only under massive random load - many threads, high QL/QD), but don’t worry - there’re is a performance boost ready for QEMU 9.0 (more at the end of this post). It's also a definitive solution.- Get rid of „io_uring“ until the bug is fixed. Switch to „Native“ and if it doesn’t work, switch also to the base VirtIO SCSI (not „Single“). But wait - this is just a partial mitigation, not solution, but it may help a lot.

Note: switching to VirtIO Block or to base VirtIO SCSI implies storage change to the Windows guest. As a result, you'll probably need to switch affected disks back to online after the first boot.

... (shortened, see the original post)

I'm sorry that I've missed many of the questions and messages, but let's move forward, we have another urgent problem(s).

In production, I have 100+ of 0.1.266 and a few 0.1.271 virtio versions. So far so good, no more scsi event log bugs.

But with the latest 0.1.285, it's another story. It's really crippled, especially with WS2025, and it's even more tricky.

TLDR: don't use 0.1.285 in production. Hidden (application) bugs are hard to find and cause brutal instability.

Speaking about WS2025, use rather 0.1.271. I cannot say that for another Windows versions it's the same, but it's better expect the worst.

Mentioned and broadly discussed "vioscsi Reset to device" event log bugs were extremely annoying, but relatively easy to find - at least there were present in Windows System log. But with 0.1.285 and WS2025, it's no more true.

It's not one single bug, it's not just about storage and vioscsi. It affects networking, storage and probably some other areas.

1) Storage issues - I'm aware of SQL Server-related bugs, but I'm quite sure it's omnipresent. Hidden bugs cause read retries, like this:

- A read of the file '*.mdf' at offset 0x00003897472000 succeeded after failing 1 time(s) with error: incorrect checksum (expected: 0xad4c6778; actual: 0xad4c6778) - see that actual/expected are the same, i.e. a storage problem (read retry)

- A read of the file '*.mdf' at offset 0x00003897470000 succeeded after failing 1 time(s) with error: incorrect pageid (expected 1:29669944; actual 1:29669944) - see that actual/expected are the same, i.e. a storage problem (read retry)

For the last two weeks I had the feeling I'm the only one affected, but it's not true - see e.g. this @santiagobiali report: Torn page error

What's going on? Hidden middleware/driver bugs cause (especially under high pressure) some kind of race conditions (my expectation), but OS is not aware of anything wrong, so only application mechanisms take care of this issue. Here the SQL Server is aware of some read retries, i.e. IO request is not correctly processed within the first try, but it's masked to messages related to DB corruption (but DBCC found nothing wrong).

After 2-3 days of such frequent SQL errors, SQL Server service suddenly hangs. Only SQL Server log (or Windows Application log) is useful for debugging.

2) Networking issues - like iSCSI and general connection drops, invalid SMB signatures, AD errors, etc.

See the mentioned W2025 virtio NIC -> connection drop outs

Other potentially related threads:

Proxmox VE 8.4 Extremely Poor Windows 11 VM Network Performance by @anon314159

Windows & Linux VDI VMs feels So laggy by @Hydra

Long story short: All I can say is that virtio nightmare is back, so check your systems, applications, workloads and be very cautious about 0.1.285.

I really believe in community and open-source development, but what's clear to me: Proxmox Server Solutions GmbH needs more subscription customers to establish dedicated virtio testing and debugging team, as we simply cannot rely on some upstream RedHat-driven development, without serious release notes, stability reports, and quick bug analysis and resolutions.

CC @fiona, @fweber, @t.lamprecht, @fabian, @aaron

Have some SQL VMs on different servers with 100+ small databases each, every time I tried to update them about 30% of them get corrupted and I have to restore from backup. The only fix that worked for me was to set Cache from "Default (No Cache)" to "Write through". The storage for the VMs is ZFS RAID1 with Samsung Datacenter SSDs. Another thing with "Default (No Cache)" is that when I update the databases the VM was also sluggish and unresponsive for a few seconds.

Hi all, I'm the author of this ass-kicking post. After more than year, I'm back to service, as bug hunting never ends.

I'm sorry that I've missed many of the questions and messages, but let's move forward, we have another urgent problem(s).

In production, I have 100+ of 0.1.266 and a few 0.1.271 virtio versions. So far so good, no more scsi event log bugs.

But with the latest 0.1.285, it's another story. It's really crippled, especially with WS2025, and it's even more tricky.

TLDR: don't use 0.1.285 in production. Hidden (application) bugs are hard to find and cause brutal instability.

Speaking about WS2025, use rather 0.1.271. I cannot say that for another Windows versions it's the same, but it's better expect the worst.

Mentioned and broadly discussed "vioscsi Reset to device" event log bugs were extremely annoying, but relatively easy to find - at least there were present in Windows System log. But with 0.1.285 and WS2025, it's no more true.

It's not one single bug, it's not just about storage and vioscsi. It affects networking, storage and probably some other areas.

1) Storage issues - I'm aware of SQL Server-related bugs, but I'm quite sure it's omnipresent. Hidden bugs cause read retries, like this:

- A read of the file '*.mdf' at offset 0x00003897472000 succeeded after failing 1 time(s) with error: incorrect checksum (expected: 0xad4c6778; actual: 0xad4c6778) - see that actual/expected are the same, i.e. a storage problem (read retry)

- A read of the file '*.mdf' at offset 0x00003897470000 succeeded after failing 1 time(s) with error: incorrect pageid (expected 1:29669944; actual 1:29669944) - see that actual/expected are the same, i.e. a storage problem (read retry)

For the last two weeks I had the feeling I'm the only one affected, but it's not true - see e.g. this @santiagobiali report: Torn page error

What's going on? Hidden middleware/driver bugs cause (especially under high pressure) some kind of race conditions (my expectation), but OS is not aware of anything wrong, so only application mechanisms take care of this issue. Here the SQL Server is aware of some read retries, i.e. IO request is not correctly processed within the first try, but it's masked to messages related to DB corruption (but DBCC found nothing wrong).

After 2-3 days of such frequent SQL errors, SQL Server service suddenly hangs. Only SQL Server log (or Windows Application log) is useful for debugging.

2) Networking issues - like iSCSI and general connection drops, invalid SMB signatures, AD errors, etc.

See the mentioned W2025 virtio NIC -> connection drop outs

Other potentially related threads:

Proxmox VE 8.4 Extremely Poor Windows 11 VM Network Performance by @anon314159

Windows & Linux VDI VMs feels So laggy by @Hydra

Long story short: All I can say is that virtio nightmare is back, so check your systems, applications, workloads and be very cautious about 0.1.285.

I really believe in community and open-source development, but what's clear to me: Proxmox Server Solutions GmbH needs more subscription customers to establish dedicated virtio testing and debugging team, as we simply cannot rely on some upstream RedHat-driven development, without serious release notes, stability reports, and quick bug analysis and resolutions.

CC @fiona, @fweber, @t.lamprecht, @fabian, @aaron

This has been my anecdotal experience as well. I installed 0.1.285 on a windows/sql host and it caused a lot of suspect virtio-related messages in event viewer. Rolling it to the version before that (0.1.271) seems to have cleared it up. The older established bug-free versions (0.1.204 and I think .208) also still work well, though like the rest of us I’m sure, I worry a little about not getting the other unrelated bugfixes etc in the newer versions.

Thanks for continuing your efforts here!

Last edited: