You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Yeah, light. Mine been doing 10M constantly... ty for sharing again Louie!

> But still would appreciate some guidance on how to disable it globally? I can see a lot of entries for "atime"

For ZFS, note that you can set 'noatime' at the top level when creating the pool and it will inherit. Otherwise the setting is per-dataset.

zpool create -o ashift=12 -o autoreplace=off -o autoexpand=on -O atime=off -O compression=lz4 $zpoolname [topology] [devices]

For virtual filesystems you don't have to worry, but for ext4 / xfs and the like you would put it in fstab options (defaults,noatime,rw) and remount the filesystem in-place ' mount / -oremount,rw ' or reboot

For windows guests - NOTE run as Administrator, applies to ALL windows disks:

BEGIN noatime.cmd

fsutil behavior set disablelastaccess 1

pause

For ZFS, note that you can set 'noatime' at the top level when creating the pool and it will inherit. Otherwise the setting is per-dataset.

zpool create -o ashift=12 -o autoreplace=off -o autoexpand=on -O atime=off -O compression=lz4 $zpoolname [topology] [devices]

For virtual filesystems you don't have to worry, but for ext4 / xfs and the like you would put it in fstab options (defaults,noatime,rw) and remount the filesystem in-place ' mount / -oremount,rw ' or reboot

For windows guests - NOTE run as Administrator, applies to ALL windows disks:

BEGIN noatime.cmd

fsutil behavior set disablelastaccess 1

pause

Well It's already running so future reference. Thoughts on adding it to grub as global?> But still would appreciate some guidance on how to disable it globally? I can see a lot of entries for "atime"

For ZFS, note that you can set 'noatime' at the top level when creating the pool and it will inherit. Otherwise the setting is per-dataset.

zpool create -o ashift=12 -o autoreplace=off -o autoexpand=on -O atime=off -O compression=lz4 $zpoolname [topology] [devices]

For virtual filesystems you don't have to worry, but for ext4 / xfs and the like you would put it in fstab options (defaults,noatime,rw) and remount the filesystem in-place ' mount / -oremount,rw ' or reboot

For windows guests - NOTE run as Administrator, applies to ALL windows disks:

BEGIN noatime.cmd

fsutil behavior set disablelastaccess 1

pause

GRUB_CMDLINE_LINUX_DEFAULT="quiet noatime"

Well It's already running so future reference. Thoughts on adding it to grub as global?

GRUB_CMDLINE_LINUX_DEFAULT="quiet noatime"

I've never done it that way, and it wouldn't be reliable if something replaced grub...? YMMV, I would do it the way I described

@Kingneutron I apologize my ignorance but I'm not sure how. You are describing to do this while creating zpool but the raid pool where OS sits is already created so I cannot go that route (I think?!).I've never done it that way, and it wouldn't be reliable if something replaced grub...? YMMV, I would do it the way I described

Some kb talks about modifying fstab and remounting those pointers but I wouldn't know if this is valid way i.e:

Code:

/dev/mapper/pve-root / ext4 defaults,noatime 0 1

Code:

mount -o remount,noatime /EDIT. So fstab it seems like its only for non zfs type..

I think I know now what you meant by the datasets (again new to this).

zfs list will give us all the pointers and from there we could disable atime like this?

Code:

sudo zfs set atime=off rpool/ROOT/pve-1Would that be correct approach @Kingneutron ?

Last edited:

> Would that be correct approach @Kingneutron ?

Yep

relatime still updates, just not immediately from what I know. I just do atime=off everywhere and haven't had any issues

Yep

relatime still updates, just not immediately from what I know. I just do atime=off everywhere and haven't had any issues

Thank You ! I'll be taking a snapshot of performance of that raid today before and after migration/improvements. I'll share it here to provide artifacts.> Would that be correct approach @Kingneutron ?

Yep

relatime still updates, just not immediately from what I know. I just do atime=off everywhere and haven't had any issues

You don't really have much meaningful choices, price/value ratio-wise, perhaps Kingston DC600M for SATA SSD. For M.2 NVMe, you are limited to Microns 7300 or 7450 especially if you need 2280 size.

I just got to learn Kingston now has DC2000B for 2280 M.2 Gen4 NVMe's with PLP:

https://www.kingston.com/en/company/press/article/73941

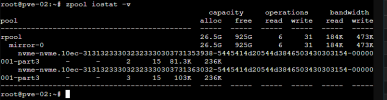

Migrated vFW to nvme, there is nothing left on raid1, can someone explain to me why iostat -v still shows write @ 10M?, but when I do every 5 seconds its shows small amounts:> Would that be correct approach @Kingneutron ?

Yep

relatime still updates, just not immediately from what I know. I just do atime=off everywhere and haven't had any issues

Either way, creating timestamp.

- 8/29 - Disk at 35% (77 days uptime) usage with vpfsense on raid1 +cluster services ON with 0.5% degradation daily

- 8/30 - TBD

Last edited:

chassis i'm using could only do ssd for OS since I needed space for data with nvme slots - unit runs 15 nvmes, definitely going with enterprise next time. Hopefully could do hot swap and be done down the line - that will be a nice new challengeI just got to learn Kingston now has DC2000B for 2280 M.2 Gen4 NVMe's with PLP:

https://www.kingston.com/en/company/press/article/73941

> Migrated vFW to nvme, there is nothing left on raid1, can someone explain to me why iostat -v still shows write @ 10M?

Read the man page for zpool-iostat and check out the -y flag, and leave zpool iostat -v 5 running ;-)

This is what I use:

BEGIN ziostat.sh

#!/bin/bash

zpool iostat $1 -y -T d -v 5

Read the man page for zpool-iostat and check out the -y flag, and leave zpool iostat -v 5 running ;-)

This is what I use:

BEGIN ziostat.sh

#!/bin/bash

zpool iostat $1 -y -T d -v 5

This definitely looks better, wish I took that capture before move.> Migrated vFW to nvme, there is nothing left on raid1, can someone explain to me why iostat -v still shows write @ 10M?

Read the man page for zpool-iostat and check out the -y flag, and leave zpool iostat -v 5 running ;-)

This is what I use:

BEGIN ziostat.sh

#!/bin/bash

zpool iostat $1 -y -T d -v 5

Hi, quick update.

Moving vpfsense did the trick. Degradation is no longer there. Drives are still at 35%. I did also removed cluster services but I think the vFw were the root cause. Thank You everyone for chipping in!

Perhaps during pfsense deployment should not use default zfs or have separate ssd for those alone.

Moving vpfsense did the trick. Degradation is no longer there. Drives are still at 35%. I did also removed cluster services but I think the vFw were the root cause. Thank You everyone for chipping in!

Perhaps during pfsense deployment should not use default zfs or have separate ssd for those alone.

you sure that relevant properties of your drives are fully supported by smartmon? 0% after 1 year seems too good.I use consumer NVMe SSDs in my Proxmox machines, without a problem. I do disable the corosync, pve-ha-crm, and pve-ha-lrm services to minimize drive writes (no clusters here). These drives are just about a year old and have zero % wear out, so I would say something is not right with your set up. I also really don't store any data on my Proxmox nodes. All application/persistence data, docker volumes and VM/CT backups, etc. reside on my Synology NAS. This particular machine has the OS and the VMs all on the same mirrored ZFS drives.

View attachment 73787

View attachment 73788

Last edited: