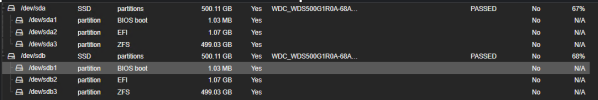

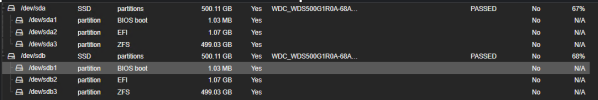

This been first deployment using raid1 using wd red ssd (not the best tier but would never expect such a quick wear out).

Server been up for 75 days with wear out at 30% already (almost .5 % per day).

Purpose of those drives was primary OS (only) but they also hold couple (11) virtual security appliances (pfsense) (this could potentially be the reason of the high wear - but haven't tested that theory).

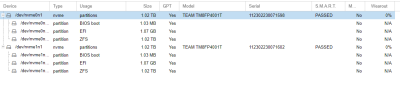

iostat for the raid:

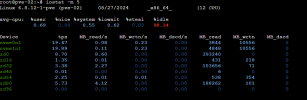

And they seem heavy read/write based on smart:

241 Host_Writes_GiB 0x0030 253 253 --- Old_age Offline - 32044

242 Host_Reads_GiB 0x0030 253 253 --- Old_age Offline - 1091

I would appreciate some opinions on best approach here to perhaps minimize the wearout - something I may not be aware with raid1 we could potentially turn off?

Options and my ask:

1)migrate the vFWs to other pools so they don't use the raid - and monitor if the % is slowing down.

2)migrate to enterprise grade ssds - perhaps hot swap. Would love options on best SSDs for this based on the use case and read/write its doing now

3)any options that could be looked at with raid1 to perhaps improve hw longevity?

4)any other suggestions perhaps?!

Thank You in advance for replies!

Server been up for 75 days with wear out at 30% already (almost .5 % per day).

Purpose of those drives was primary OS (only) but they also hold couple (11) virtual security appliances (pfsense) (this could potentially be the reason of the high wear - but haven't tested that theory).

iostat for the raid:

Code:

zpool iostat -v

capacity operations bandwidth

pool alloc free read write read write

-------------------------------------- ----- ----- ----- ----- ----- -----

rpool 171G 293G 24 214 351K 10.1M

mirror-0 171G 293G 24 214 351K 10.1M

ata-WDC_WDS500G1R0A-68A4W0_24070L800900-part3 - - 12 107 174K 5.03M

ata-WDC_WDS500G1R0A-68A4W0_24070L800864-part3 - - 12 107 176K 5.03M

-------------------------------------- ----- ----- ----- ----- ----- -----And they seem heavy read/write based on smart:

241 Host_Writes_GiB 0x0030 253 253 --- Old_age Offline - 32044

242 Host_Reads_GiB 0x0030 253 253 --- Old_age Offline - 1091

I would appreciate some opinions on best approach here to perhaps minimize the wearout - something I may not be aware with raid1 we could potentially turn off?

Options and my ask:

1)migrate the vFWs to other pools so they don't use the raid - and monitor if the % is slowing down.

2)migrate to enterprise grade ssds - perhaps hot swap. Would love options on best SSDs for this based on the use case and read/write its doing now

3)any options that could be looked at with raid1 to perhaps improve hw longevity?

4)any other suggestions perhaps?!

Thank You in advance for replies!

Last edited: