Hi everyone,

I would like some help regarding CEPH topology.

I have the following environment:

- 5x Servers (PVE01,02,03,04,05)

- PVE 01,02, and 03 in one datacenter and PVE04 and 05 in another datacenter.

- 6x Disks in each (3x HDD and 3x SSD)

- All of the same capacity/model.

I would like to create a topology using CEPH storage, where I can lose up to 2 servers.

Here comes the first question: is it possible for my storage to work with only 2 nodes?

Another question: should I change anything in my CrushMap?

Initially, I only created a replicate rule to differentiate the HDD disks from the SSD disks.

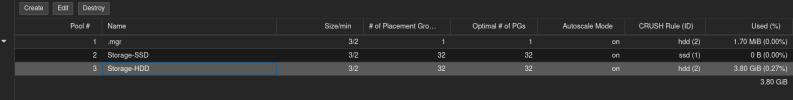

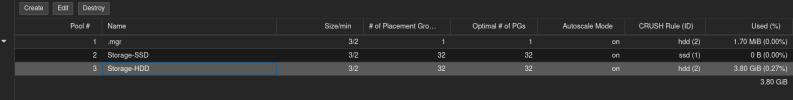

I will then create two pools:

Storage-SSD and Storage-HDD.

How much size/min.sizedo I have to put in order to be able to lose up to 2 servers?

I would like some help regarding CEPH topology.

I have the following environment:

- 5x Servers (PVE01,02,03,04,05)

- PVE 01,02, and 03 in one datacenter and PVE04 and 05 in another datacenter.

- 6x Disks in each (3x HDD and 3x SSD)

- All of the same capacity/model.

I would like to create a topology using CEPH storage, where I can lose up to 2 servers.

Here comes the first question: is it possible for my storage to work with only 2 nodes?

Another question: should I change anything in my CrushMap?

Initially, I only created a replicate rule to differentiate the HDD disks from the SSD disks.

I will then create two pools:

Storage-SSD and Storage-HDD.

How much size/min.sizedo I have to put in order to be able to lose up to 2 servers?