Hello Team,

I'am new in Proxmox, so sorry for another interfaces bond thread.

I have Grandstream GWN7813P L2-L3 Switch, that supports LAG: static and LACP.

I have Server with 1 HIC (Host Interface Card) with 4 x 1Gb ports. I need to bond this 4 interfaces to achive logical 4Gb with LACP.

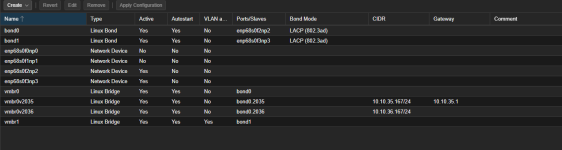

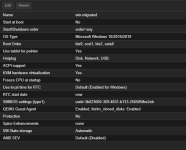

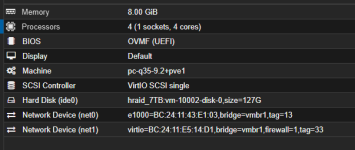

My config:

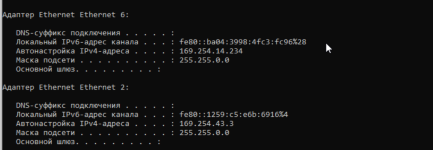

On switch I have VLAN 136. I had added 4 interfaces that connected to HIC in VLAN 136 trunk mode. At this moment I can reach Proxmox Server by 10.1.1.4 ip address.

When I create LAG with LACP on Grandstream switch - Proxmox anavailable, no ping to 10.1.1.4 and no access.

I tried examples from PVE manual, forum threads - but all start to broke when I creating LACP LAG on switch.

Can You, please, help? Where are my mistakes?

Thank you!

I'am new in Proxmox, so sorry for another interfaces bond thread.

I have Grandstream GWN7813P L2-L3 Switch, that supports LAG: static and LACP.

I have Server with 1 HIC (Host Interface Card) with 4 x 1Gb ports. I need to bond this 4 interfaces to achive logical 4Gb with LACP.

My config:

auto lo

iface lo inet loopback

auto ens4f0

iface ens4f0 inet manual

iface enx3a68dd6e1e87 inet manual

auto ens4f1

iface ens4f1 inet manual

auto ens4f2

iface ens4f2 inet manual

auto ens4f3

iface ens4f3 inet manual

auto bond0

iface bond0 inet manual

bond-slaves ens4f0 ens4f1 ens4f2 ens4f3

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

iface bond0.136 inet manual

auto vmbr0

iface vmbr0 inet manual

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

auto vmbr0v136

iface vmbr0v136 inet static

address 10.1.1.4/24

gateway 10.1.1.1

bridge_ports bond0.136

bridge_stp off

source /etc/network/interfaces.d/*

On switch I have VLAN 136. I had added 4 interfaces that connected to HIC in VLAN 136 trunk mode. At this moment I can reach Proxmox Server by 10.1.1.4 ip address.

When I create LAG with LACP on Grandstream switch - Proxmox anavailable, no ping to 10.1.1.4 and no access.

I tried examples from PVE manual, forum threads - but all start to broke when I creating LACP LAG on switch.

Can You, please, help? Where are my mistakes?

Thank you!