Hi, I have a somewhat related question hoping someone might be able to shed some light on.

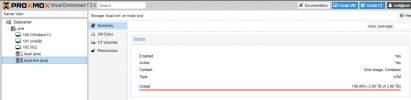

I have setup my PVE on a 500GB NVME drive, using LVM using ext4 file system.

During setup I explicitly requested my local-LVM (maxvz) to be 32GB. This is because I will be deploying a ZFS raid 1 for my VM storage.

I left all other fields empty (so they should default).

According to this page:

https://pve.proxmox.com/wiki/Installation

1. The maximum limit of root is hdsize/4; mine is showing as ~100GB (checks out).

2. With more than 128GB storage available the default is 16GB, else hdsize/8 will be used.

Should I not fall into that first category as I have over 128GB free storage available?

Here is some useful output from the system:

Bash:

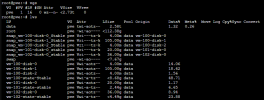

root@homeserver:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 7.6G 0 7.6G 0% /dev

tmpfs 1.6G 1.1M 1.6G 1% /run

/dev/mapper/pve-root 94G 2.9G 87G 4% /

tmpfs 7.7G 34M 7.6G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/nvme0n1p2 1022M 344K 1022M 1% /boot/efi

/dev/fuse 128M 16K 128M 1% /etc/pve

tmpfs 1.6G 0 1.6G 0% /run/user/0

root@homeserver:~# vgs

VG #PV #LV #SN Attr VSize VFree

pve 1 3 0 wz--n- <464.76g 328.76g

root@homeserver:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-a-tz-- <30.00g 0.00 1.58

root pve -wi-ao---- 96.00g

swap pve -wi-ao---- 8.00g

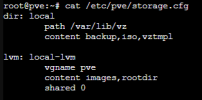

root@homeserver:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content iso,vztmpl,backup

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

root@homeserver:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

nvme0n1 259:0 0 465.8G 0 disk

├─nvme0n1p1 259:1 0 1007K 0 part

├─nvme0n1p2 259:2 0 1G 0 part /boot/efi

└─nvme0n1p3 259:3 0 464.8G 0 part

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 96G 0 lvm /

├─pve-data_tmeta 253:2 0 1G 0 lvm

│ └─pve-data 253:4 0 30G 0 lvm

└─pve-data_tdata 253:3 0 30G 0 lvm

└─pve-data 253:4 0 30G 0 lvm

root@homeserver:~#

So onto my question:

Under the vgs command it shows 328.76GB VFree, which add up correctly based on the three LVs.

Why is this and can this storage be used by the system in anyway? Or is it just 'wasted storage'?

I'm re-doing my system so happy to re-build.

Will I be better off manually setting max root size to a larger number? Or manually setting min free size to a smaller number? I presume I need to be careful when doing this?

Thanks in advance!