why are you patching a Tesla P4 ?I just did a brand new install of Proxmox 8.2.2. Followed PolloLoco's guide and since I have a Tesla P4 installed I used 535.161.05 and applied the patch. Everything worked every step of the way without fail, but when I domdevctl typesI get a list of profiles for the Tesla P40. I can only find one other instance of this happening in this thread [https://forum.proxmox.com/threads/vgpu-tesla-p4-wrong-mdevctl-gpu.143247/] but other than repeatedly trying other drivers until they ended back up on 535.104.06, they don't know what caused the profiles to start displaying correctly. Does anyone here have an idea?

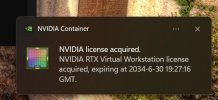

View attachment 69518

View attachment 69519

[TUTORIAL] PVE 8.22 / Kernel 6.8 and NVidia vGPU

- Thread starter dooferorg

- Start date

-

- Tags

- nvidia nvidia-vgpu-vfio vgpu

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The 16.x drivers didn't want to work with kernel 6.8, I had to apply a patch to make it install. When it showed up as a P40 I followed another guide that runs a do-it-all script and it downgraded the kernel to 6.5 and pinned it. Then it installed the unpatched 16.x driver. But the card still shows as a P40. I got it to passthrough to my VMs (one windows, one linux) and it seems to be working, but I wasn't sure if it was taking full advantage of its capabilities. That and I got a P4 specifically because it was natively supported, and it had a great price to performance ratio.why are you patching a Tesla P4 ?

If I could get it to show as a P4 I would prefer it, but multiple posts in multiple forums, along with the discord server linked by PolloLoco's guide have netted me one singular reply, and that is yours.

install the last one, it compile just fine, 550.90.05, the patch is for use not supported cards, your's is support, no need to patch, also, remove the vgpuConfig.xml file before install, to make sure that no id replacement is made (by the patch).The 16.x drivers didn't want to work with kernel 6.8, I had to apply a patch to make it install. When it showed up as a P40 I followed another guide that runs a do-it-all script and it downgraded the kernel to 6.5 and pinned it. Then it installed the unpatched 16.x driver. But the card still shows as a P40. I got it to passthrough to my VMs (one windows, one linux) and it seems to be working, but I wasn't sure if it was taking full advantage of its capabilities. That and I got a P4 specifically because it was natively supported, and it had a great price to performance ratio.

If I could get it to show as a P4 I would prefer it, but multiple posts in multiple forums, along with the discord server linked by PolloLoco's guide have netted me one singular reply, and that is yours.

/edit: if your using the 16.x coz nvidia drop support for pascal, you still can install 550.90.05, then on windows vm use the 16.5 driver, 538.46, as the one from 17.x will not detect the card, they still work.

/edit2: sorry my english, im gonna try to make a quick guide:

1) uninstall everything

2) install (17.2) NVIDIA-Linux-x86_64-550.90.05-vgpu-kvm.run

3) extract (16.6) NVIDIA-Linux-x86_64-535.183.04-vgpu-kvm.run (NVIDIA-Linux-x86_64-535.183.04-vgpu-kvm.run -x)

4) copy extracted vgpuConfig.xml from 16.6 to /usr/share/nvidia/vgpu/vgpuConfig.xml (replace the existing file)

5) reboot

6) install windows vm, install guest drivers from 16.6 (538.67) (538.67_grid_win10_win11_server2019_server2022_dch_64bit_international.exe)

Last edited:

Thank you for this, I will definitely try as soon as I can; probably this weekend. Does this work with the 6.8 kernel in Proxmox. If so, I will probably try to unpin the kernel and get it updated back to the latest.install the last one, it compile just fine, 550.90.05, the patch is for use not supported cards, your's is support, no need to patch, also, remove the vgpuConfig.xml file before install, to make sure that no id replacement is made (by the patch).

/edit: if your using the 16.x coz nvidia drop support for pascal, you still can install 550.90.05, then on windows vm use the 16.5 driver, 538.46, as the one from 17.x will not detect the card, they still work.

/edit2: sorry my english, im gonna try to make a quick guide:

1) uninstall everything

2) install (17.2) NVIDIA-Linux-x86_64-550.90.05-vgpu-kvm.run

3) extract (16.6) NVIDIA-Linux-x86_64-535.183.04-vgpu-kvm.run (NVIDIA-Linux-x86_64-535.183.04-vgpu-kvm.run -x)

4) copy extracted vgpuConfig.xml from 16.6 to /usr/share/nvidia/vgpu/vgpuConfig.xml (replace the existing file)

5) reboot

6) install windows vm, install guest drivers from 16.6 (538.67) (538.67_grid_win10_win11_server2019_server2022_dch_64bit_international.exe)

Apparently another forum poster who used the script I mentioned discovered the same issue, but said after updating the driver it showed the mdevctl types.

https://forum.proxmox.com/threads/vgpu-tesla-p4-wrong-mdevctl-gpu.143247/post-679757

I have been looking for a cost effective way to play with nvidia vgpu - I am thinking a 2080ti off ebay is the most cost effective way to do this (cheaper than T4, L4 etc). I am not interested in gaming this would be for AI, transcoding etc.

Is my logic sound here or do i just need to accept i need to plump down the cash for a T4 / L4?

Is my logic sound here or do i just need to accept i need to plump down the cash for a T4 / L4?

I'm currently using a GTX 1050 TI (pascal), with latest drivers, don't see any problem with a 2080ti, get in mind that's the latest supported arquitecture at the moment (turing), don't buy anything newer than that, 30xx, 40xx, etc...I have been looking for a cost effective way to play with nvidia vgpu - I am thinking a 2080ti off ebay is the most cost effective way to do this (cheaper than T4, L4 etc). I am not interested in gaming this would be for AI, transcoding etc.

Is my logic sound here or do i just need to accept i need to plump down the cash for a T4 / L4?

thanks for replying, i luckily already new there was no way to hack the 30x / 40x cards - i have to say the nvidia website of supported cards is every hard to parse, the 2080ti looks good value for money to try and looks like i don't have to worry about nvidia licensing too?I'm currently using a GTX 1050 TI (pascal), with latest drivers, don't see any problem with a 2080ti, get in mind that's the latest supported arquitecture at the moment (turing), don't buy anything newer than that, 30xx, 40xx, etc...

Hey!

I have followed the steps to install NVidia drivers on Proxmox server. I have a L40S, so it should work with V16 of V17. I tried installing 535.161 and 550.90. I have applied the patch on 535.161.

I used the following commands:

Then I get a variety of errors during the setup.

With 550.90:

With 535.116 after patch:

If I do

This has been done on a fresh Proxmox 8.2.4 installation.

I really don't know anymore what to do to get this working. Any suggestions?

I have followed the steps to install NVidia drivers on Proxmox server. I have a L40S, so it should work with V16 of V17. I tried installing 535.161 and 550.90. I have applied the patch on 535.161.

I used the following commands:

Code:

chmod +x NVIDIA-Linux-x86_64-XXX-vgpu-kvm.run

./NVIDIA-Linux-x86_64-XXX-vgpu-kvm.runThen I get a variety of errors during the setup.

With 550.90:

Code:

Unable to load the kernel module 'nvidia.ko'. This happens most frequently when this kernel module was built against the wrong or improperly

configured kernel sources, with a version of gcc that differs from the one used to build the target kernel, or if another driver, such as nouveau,

is present and prevents the NVIDIA kernel module from obtaining ownership of the NVIDIA device(s), or no NVIDIA device installed in this system is

supported by this NVIDIA Linux graphics driver release.

Please see the log entries 'Kernel module load error' and 'Kernel messages' at the end of the file '/var/log/nvidia-installer.log' for more

information.With 535.116 after patch:

Code:

ERROR: An error occurred while performing the step: "Checking to see whether the nvidia kernel module was successfully built". See /var/log/nvidia-installer.>

-> The command `cd ./kernel; /usr/bin/make -k -j32 NV_EXCLUDE_KERNEL_MODULES="" SYSSRC="/lib/modules/6.8.12-1-pve/build" SYSOUT="/lib/modules/6.8.12-1-pve/b>If I do

Code:

uname -r

6.8.12-1-pveThis has been done on a fresh Proxmox 8.2.4 installation.

I really don't know anymore what to do to get this working. Any suggestions?

Last edited:

Sorry, that gives the same error:

In the logs I see:

Code:

Unable to load the kernel module 'nvidia.ko'. This happens most frequently when this kernel module was built against the wrong or improperly

configured kernel sources, with a version of gcc that differs from the one used to build the target kernel, or if another driver, such as nouveau,

is present and prevents the NVIDIA kernel module from obtaining ownership of the NVIDIA device(s), or no NVIDIA device installed in this system is

supported by this NVIDIA Linux graphics driver release.

Please see the log entries 'Kernel module load error' and 'Kernel messages' at the end of the file '/var/log/nvidia-installer.log' for more

information.In the logs I see:

Code:

-> Kernel messages:

NVRM: Firmware' sections in the NVIDIA Virtual GPU (vGPU)

NVRM: Software documentation, available at docs.nvidia.com.

[41994.960797] nvidia: probe of 0000:82:00.0 failed with error -1

[41994.960812] NVRM: The NVIDIA probe routine failed for 2 device(s).

[41994.960814] NVRM: None of the NVIDIA devices were initialized.

[41994.960995] nvidia-nvlink: Unregistered Nvlink Core, major device number 510

[42412.786566] nvidia-nvlink: Nvlink Core is being initialized, major device number 510

[42412.788233] NVRM: The NVIDIA GPU 0000:e3:00.0 (PCI ID: 10de:26b9)

NVRM: installed in this vGPU host system is not supported by

NVRM: proprietary nvidia.ko.

NVRM: Please see the 'Open Linux Kernel Modules' and 'GSP

NVRM: Firmware' sections in the NVIDIA Virtual GPU (vGPU)

NVRM: Software documentation, available at docs.nvidia.com.

[42412.788294] nvidia: probe of 0000:e3:00.0 failed with error -1

[42412.788384] NVRM: The NVIDIA GPU 0000:82:00.0 (PCI ID: 10de:26b9)

NVRM: installed in this vGPU host system is not supported by

NVRM: proprietary nvidia.ko.

NVRM: Please see the 'Open Linux Kernel Modules' and 'GSP

NVRM: Firmware' sections in the NVIDIA Virtual GPU (vGPU)

NVRM: Software documentation, available at docs.nvidia.com.

[42412.788433] nvidia: probe of 0000:82:00.0 failed with error -1

[42412.788449] NVRM: The NVIDIA probe routine failed for 2 device(s).

[42412.788451] NVRM: None of the NVIDIA devices were initialized.

[42412.788631] nvidia-nvlink: Unregistered Nvlink Core, major device number 510

Last edited:

mdevctl types

gives empty back...

It also is quite odd. I have attached one GPU to one VM and another to the second VM. When I reboot PVE, I have both GPUs listed in nvidia-smi. When I start one VM and shut it down again (using Windows in the VM), that GPU is not listed anymore in nvidia-smi. When I try to restart that VM, I get a blue screen, as the GPU "does not exist".

When I then Shutdown | Stop through ProxMox the VM, and then restart, it works fine. It seems always to work if I shutdown through ProxMox and never work if I shutdown through the Windows VM...

Is this normal behavior?

gives empty back...

It also is quite odd. I have attached one GPU to one VM and another to the second VM. When I reboot PVE, I have both GPUs listed in nvidia-smi. When I start one VM and shut it down again (using Windows in the VM), that GPU is not listed anymore in nvidia-smi. When I try to restart that VM, I get a blue screen, as the GPU "does not exist".

When I then Shutdown | Stop through ProxMox the VM, and then restart, it works fine. It seems always to work if I shutdown through ProxMox and never work if I shutdown through the Windows VM...

Is this normal behavior?

No... Indeed, I did not install iommu=pt. that is a good suggestion, as this might make it more stable.

Now it says:

Sadly, it did not solve the problem that Windows does not want to boot without bluescreen the second time I open the VM. Also, odd thing, the very first time I open the VM, it directly goes to recovery mode (not BSOD). The second time works, third time, BSOD (driver power state error). Only after force shutdown or after reboot with BSOD, it works again.

I indeed would like to pass parts of the GPU instead of only the full GPU. I have vGPU licenses available that I hope to soon be able to use Currently, though, the only thing I could get to work (not entirely stable) is the full GPU. The idea is to pass through one of the vGPU Profiles (Q, C, B or A profiles) per VM, so we can test the needs of our applications.

Currently, though, the only thing I could get to work (not entirely stable) is the full GPU. The idea is to pass through one of the vGPU Profiles (Q, C, B or A profiles) per VM, so we can test the needs of our applications.

Now it says:

Code:

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt"

update-grub

rebootSadly, it did not solve the problem that Windows does not want to boot without bluescreen the second time I open the VM. Also, odd thing, the very first time I open the VM, it directly goes to recovery mode (not BSOD). The second time works, third time, BSOD (driver power state error). Only after force shutdown or after reboot with BSOD, it works again.

I indeed would like to pass parts of the GPU instead of only the full GPU. I have vGPU licenses available that I hope to soon be able to use

I have further checked all setup, it seems to correspond with the requirements in

https://gitlab.com/polloloco/vgpu-proxmox

I see the following:

So, the vGPUs seem to be visible, but they do not come through.

mdevctl is still empty.

I have installed vgpu_unlock-rs in /opt.

I have also installed the Client Config Token from NVidia in the VM that I want to use.

I tried using

but that does not work.

Running

seems to indicate all is running correctly. However setting the parameters of the VM does not work:

Running the VM, results in an error:

Sorry for the mountain of code, but I thought best to be thorough... Maybe this does ring a bell?

https://gitlab.com/polloloco/vgpu-proxmox

I see the following:

Code:

root@pve:~# nvidia-smi vgpu -s

GPU 00000000:82:00.0

NVIDIA L40S-1B

NVIDIA L40S-2B

NVIDIA L40S-1Q

NVIDIA L40S-2Q

NVIDIA L40S-3Q

NVIDIA L40S-4Q

NVIDIA L40S-6Q

NVIDIA L40S-8Q

NVIDIA L40S-12Q

NVIDIA L40S-16Q

NVIDIA L40S-24Q

NVIDIA L40S-48Q

NVIDIA L40S-1A

NVIDIA L40S-2A

NVIDIA L40S-3A

NVIDIA L40S-4A

NVIDIA L40S-6A

NVIDIA L40S-8A

NVIDIA L40S-12A

NVIDIA L40S-16A

NVIDIA L40S-24A

NVIDIA L40S-48A

GPU 00000000:E3:00.0

NVIDIA L40S-1B

NVIDIA L40S-2B

NVIDIA L40S-1Q

NVIDIA L40S-2Q

NVIDIA L40S-3Q

NVIDIA L40S-4Q

NVIDIA L40S-6Q

NVIDIA L40S-8Q

NVIDIA L40S-12Q

NVIDIA L40S-16Q

NVIDIA L40S-24Q

NVIDIA L40S-48Q

NVIDIA L40S-1A

NVIDIA L40S-2A

NVIDIA L40S-3A

NVIDIA L40S-4A

NVIDIA L40S-6A

NVIDIA L40S-8A

NVIDIA L40S-12A

NVIDIA L40S-16A

NVIDIA L40S-24A

NVIDIA L40S-48A

root@pve:~# nvidia-smi vgpu -q

GPU 00000000:82:00.0

Active vGPUs : 0

GPU 00000000:E3:00.0

Active vGPUs : 0

root@pve:~# nvidia-smi

Sat Aug 10 14:47:07 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.90.05 Driver Version: 550.90.05 CUDA Version: N/A |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA L40S On | 00000000:82:00.0 Off | 0 |

| N/A 33C P8 37W / 350W | 0MiB / 46068MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 1 NVIDIA L40S On | 00000000:E3:00.0 Off | 0 |

| N/A 35C P8 39W / 350W | 0MiB / 46068MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+So, the vGPUs seem to be visible, but they do not come through.

Code:

root@pve:~# mdevctl types

root@pve:~#mdevctl is still empty.

I have installed vgpu_unlock-rs in /opt.

I have also installed the Client Config Token from NVidia in the VM that I want to use.

I tried using

Code:

echo '4' > /sys/class/drm/card0/device/sriov_numvfsbut that does not work.

Running

Code:

root@pve:/opt/vgpu_unlock-rs# systemctl status nvidia-vgpu-mgr.service

● nvidia-vgpu-mgr.service - NVIDIA vGPU Manager Daemon

Loaded: loaded (/lib/systemd/system/nvidia-vgpu-mgr.service; enabled; preset: enabled)

Drop-In: /etc/systemd/system/nvidia-vgpu-mgr.service.d

└─vgpu_unlock.conf

Active: active (running) since Sat 2024-08-10 14:56:46 CEST; 21s ago

Process: 6497 ExecStart=/usr/bin/nvidia-vgpu-mgr (code=exited, status=0/SUCCESS)

Main PID: 6498 (nvidia-vgpu-mgr)

Tasks: 1 (limit: 629145)

Memory: 352.0K

CPU: 6ms

CGroup: /system.slice/nvidia-vgpu-mgr.service

└─6498 /usr/bin/nvidia-vgpu-mgr

Aug 10 14:56:46 pve nvidia-vgpu-mgr[6498]: NvA081CtrlVgpuConfigGetVgpuTypeInfoParams {

vgpu_type: 1158,

vgpu_type_info: NvA081CtrlVgpuInfo {

vgpu_type: 1158,

vgpu_name: "NVIDIA L40S-2A",

vgpu_class: "NVS",

vgpu_signature: [],

license: "GRID-Virtual-Apps,3.0",

max_instance: 24,

num_heads: 1,

max_resolution_x: 1280,

max_resolution_y: 1024,

max_pixels: 1310720,

frl_config: 60,

cuda_enabled: 0,

ecc_supported: 0,

gpu_instance_size: 0,

multi_vgpu_supported: 0,

vdev_id: 0x26b91896,

pdev_id: 0x26b9,

profile_size: 0x80000000,

fb_length: 0x78000000,seems to indicate all is running correctly. However setting the parameters of the VM does not work:

Code:

root@pve:/opt/vgpu_unlock-rs# nano /etc/pve/qemu-server/100.conf

...

hostpci0: 0000:e3:00.0,pcie=1,vgpu=NVIDIA-L40S-2A

...Running the VM, results in an error:

Code:

root@pve:/opt/vgpu_unlock-rs# qm start 100

vm 100 - unable to parse value of 'hostpci0' - format error

vgpu: property is not defined in schema and the schema does not allow additional properties

swtpm_setup: Not overwriting existing state file.Sorry for the mountain of code, but I thought best to be thorough... Maybe this does ring a bell?

I don't have a supported card, i'm currently using a 1050ti, and i'm using this patch https://github.com/VGPU-Community-Drivers/vGPU-Unlock-patcher

# git clone --recursive https://github.com/VGPU-Community-Drivers/vGPU-Unlock-patcher

# cd vGPU-Unlock-patcher

# wget somewhere.NVIDIA-Linux-x86_64-550.90.05-vgpu-kvm.run

# ./patch.sh vgpu-kvm

# cd NVIDIA-Linux-x86_64-550.90.05-vgpu-kvm-patched

# ./nvidia-installer --dkms -m=kernel

that install the full driver including the drm (no need for --no-drm), then i have the intermediated devices, needed for vgpu:

it's a small card, i created my own profiles of 512mb ram, also i add the device to the vm thru the gui, not editing the .conf

once i forgot the add the MDev type, and i have those strange bsod you talking about, about iommu, make sure you're using grub, if not the correct file is /etc/kernel/cmdline and the update is thru # proxmox-boot-tool refresh.

# git clone --recursive https://github.com/VGPU-Community-Drivers/vGPU-Unlock-patcher

# cd vGPU-Unlock-patcher

# wget somewhere.NVIDIA-Linux-x86_64-550.90.05-vgpu-kvm.run

# ./patch.sh vgpu-kvm

# cd NVIDIA-Linux-x86_64-550.90.05-vgpu-kvm-patched

# ./nvidia-installer --dkms -m=kernel

that install the full driver including the drm (no need for --no-drm), then i have the intermediated devices, needed for vgpu:

Code:

# mdevctl types

0000:10:00.0

nvidia-1024

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1Q

Description: num_heads=4, frl_config=60, framebuffer=1024M, max_resolution=3840x2160, max_instance=4

nvidia-2048

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2Q

Description: num_heads=4, frl_config=60, framebuffer=2048M, max_resolution=3840x2160, max_instance=2

nvidia-4096

Available instances: 0

Device API: vfio-pci

Name: GRID P40-4Q

Description: num_heads=4, frl_config=60, framebuffer=4096M, max_resolution=3840x2160, max_instance=1

nvidia-512

Available instances: 4

Device API: vfio-pci

Name: GRID P40-0.5Q

Description: num_heads=4, frl_config=60, framebuffer=512M, max_resolution=3840x2160, max_instance=8

Code:

# nvidia-smi

Sat Aug 10 10:22:59 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.90.05 Driver Version: 550.90.05 CUDA Version: N/A |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce GTX 1050 Ti On | 00000000:10:00.0 Off | N/A |

| 45% 25C P8 N/A / 75W | 1973MiB / 4096MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 2842 C+G vgpu 488MiB |

| 0 N/A N/A 2907 C+G vgpu 488MiB |

| 0 N/A N/A 2977 C+G vgpu 488MiB |

| 0 N/A N/A 3047 C+G vgpu 488MiB |

+-----------------------------------------------------------------------------------------+it's a small card, i created my own profiles of 512mb ram, also i add the device to the vm thru the gui, not editing the .conf

once i forgot the add the MDev type, and i have those strange bsod you talking about, about iommu, make sure you're using grub, if not the correct file is /etc/kernel/cmdline and the update is thru # proxmox-boot-tool refresh.