Hi thank you for the suggestion, after upgrading my PVE to 8.3.2 i can able to see the metric and the dashboard working as wellAs stated in the initial release post, we require up-to-date Proxmox VE remotes. The metric export API endpoint was introduced inpve-manager 8.2.5, it seems like you are using a version older than that.

Hope this helps!

Proxmox Datacenter Manager - First Alpha Release

- Thread starter t.lamprecht

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Great, thanks for letting me know that everything works okay nowHi thank you for the suggestion, after upgrading my PVE to 8.3.2 i can able to see the metric and the dashboard working as well

Thank you for the great addition to PVE infrastructure.

We've installed PDM in our environment and connected it to two clusters, all nodes running:

When trying to migrate the VM between clusters we get the following error message in the Target Network field:

The storage portion is populated successfully.

Any suggestions?

Datacenter Manager 0.1.10

P.S. just realized that perhaps the fact that we are running PVE as instances in Openstack with Public/Private network may have something to do with it?

Public IP from PDM point of view: 172.22.1.142

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

We've installed PDM in our environment and connected it to two clusters, all nodes running:

pve-manager/8.3.2/3e76eec21c4a14a7 (running kernel: 6.8.12-1-pve)When trying to migrate the VM between clusters we get the following error message in the Target Network field:

Code:

Error: api error (status = 400 Bad Request): api returned unexpected data - failed to parse api responseThe storage portion is populated successfully.

Any suggestions?

Datacenter Manager 0.1.10

P.S. just realized that perhaps the fact that we are running PVE as instances in Openstack with Public/Private network may have something to do with it?

Public IP from PDM point of view: 172.22.1.142

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8950 qdisc pfifo_fast master vmbr0 state UP group default qlen 1000

link/ether fa:16:3e:99:48:e2 brd ff:ff:ff:ff:ff:ff

altname enp0s3

3: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8950 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:99:48:e2 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.98/24 brd 10.0.0.255 scope global dynamic vmbr0

valid_lft 79339sec preferred_lft 79339sec

inet 192.168.100.11/24 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe99:48e2/64 scope link

valid_lft forever preferred_lft forever

4: ens7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8950 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:b3:58:52 brd ff:ff:ff:ff:ff:ff

altname enp0s7

inet 10.1.0.216/24 brd 10.1.0.255 scope global dynamic ens7

valid_lft 79575sec preferred_lft 79575sec

inet6 fe80::f816:3eff:feb3:5852/64 scope link

valid_lft forever preferred_lft forever

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8950 qdisc pfifo_fast master vmbr0 state UP group default qlen 1000

link/ether fa:16:3e:99:48:e2 brd ff:ff:ff:ff:ff:ff

altname enp0s3

3: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8950 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:99:48:e2 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.98/24 brd 10.0.0.255 scope global dynamic vmbr0

valid_lft 79339sec preferred_lft 79339sec

inet 192.168.100.11/24 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe99:48e2/64 scope link

valid_lft forever preferred_lft forever

4: ens7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8950 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:b3:58:52 brd ff:ff:ff:ff:ff:ff

altname enp0s7

inet 10.1.0.216/24 brd 10.1.0.255 scope global dynamic ens7

valid_lft 79575sec preferred_lft 79575sec

inet6 fe80::f816:3eff:feb3:5852/64 scope link

valid_lft forever preferred_lft forever

Blockbridge : Ultra low latency all-NVME shared storage for Proxmox - https://www.blockbridge.com/proxmox

Last edited:

i think so...?! im connection with "root"Do you have set the permissions?

UPD: I've reinstalled DCM.

Upgrading proxmox-datacenter-manager:amd64 (0.1.8, 0.1.10), proxmox-datacenter-manager-client:amd64 (0.1.8, 0.1.10) made DCM non-functional due to:

Reboot didn't change anything.

Unit log.

Upgrading proxmox-datacenter-manager:amd64 (0.1.8, 0.1.10), proxmox-datacenter-manager-client:amd64 (0.1.8, 0.1.10) made DCM non-functional due to:

Code:

systemctl list-units --failed

UNIT LOAD ACTIVE SUB DESCRIPTION

● proxmox-datacenter-api.service loaded failed failed Proxmox Datacenter Manager API daemonUnit log.

Code:

Jan 05 17:12:05 pm-dcm systemd[1]: Starting proxmox-datacenter-api.service - Proxmox Datacenter Manager API daemon...

Jan 05 17:12:05 pm-dcm proxmox-datacenter-api[797]: applied rrd journal (2 entries in 0.001 seconds)

Jan 05 17:12:05 pm-dcm proxmox-datacenter-api[797]: rrd journal successfully committed (0 files in 0.000 seconds)

Jan 05 17:12:05 pm-dcm proxmox-datacenter-api[797]: thread 'main' panicked at /usr/share/cargo/registry/proxmox-rest-server-0.8.5/src/connection.rs:154:38:

Jan 05 17:12:05 pm-dcm proxmox-datacenter-api[797]: called `Result::unwrap()` on an `Err` value: ErrorStack([Error { code: 167772350, library: "SSL routines", function: "SSL_CTX_check_private_key", reason: "no private key assigned", file:>Jan 05 17:12:05 pm-dcm proxmox-datacenter-api[797]: note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

Jan 05 17:12:05 pm-dcm systemd[1]: proxmox-datacenter-api.service: Main process exited, code=exited, status=101/n/a

Jan 05 17:12:05 pm-dcm systemd[1]: proxmox-datacenter-api.service: Failed with result 'exit-code'.

Jan 05 17:12:05 pm-dcm systemd[1]: Failed to start proxmox-datacenter-api.service - Proxmox Datacenter Manager API daemon.

Last edited:

I discovered DCM somehow gets a different serial for a host certificate. How is that possible?

None of the certificates has a serial 47:69:ef:da:f8:1d:9d:e9:ca:34:29:85:90:26:54:39:8e:6e:ad:7a:bc:b3:55:fe:a3:98:5a:6d:70:47:04:98

Code:

Jan 05 18:56:29 pm-dcm proxmox-datacenter-api[1316]: bad fingerprint: 47:69:ef:da:f8:1d:9d:e9:ca:34:29:85:90:26:54:39:8e:6e:ad:7a:bc:b3:55:fe:a3:98:5a:6d:70:47:04:98

Jan 05 18:56:29 pm-dcm proxmox-datacenter-api[1316]: expected fingerprint: 0c:65:97:47:d1:c5:eb:02:54:e8:40:0f:09:1b:3d:bb:a7:ae:cc:9c:73:38:c5:06:8f:28:00:14:be:57:dd:75None of the certificates has a serial 47:69:ef:da:f8:1d:9d:e9:ca:34:29:85:90:26:54:39:8e:6e:ad:7a:bc:b3:55:fe:a3:98:5a:6d:70:47:04:98

Last edited:

Hello,

we hit BUG:

We tried to migrate Live VM cross clusters using DCM 0.1.10 and got these errors:

VM.conf:

Tokens for DCM was created by DCM itself as root@pam.

When I removed args and rng0 lines, migration was successful!

When I tried to migrate it from single node dell4 to proxmox cluster dc6 I cannot select Target Node. Menu is empty after picking Target Remote.

we hit BUG:

We tried to migrate Live VM cross clusters using DCM 0.1.10 and got these errors:

Code:

failed - failed to handle 'config' command - only root can set 'args' config

failed - failed to handle 'config' command - only root can set 'rng0' config

Code:

agent: 1

args:

boot: order=scsi0

cores: 8

ide2: none,media=cdrom

memory: 32768

meta: creation-qemu=9.0.2,ctime=1733520413

name: posearch01

net0: virtio=00:ff:56:b9:79:ea,bridge=vmbr1002

onboot: 1

ostype: l26

reboot: 1

rng0: /dev/urandom

scsi0: local-zfs:vm-166-disk-0,aio=native,cache=none,format=raw,size=15G

scsihw: virtio-scsi-pci

smbios1: uuid=7feac005-3982-4477-b1b2-92826621c438

sockets: 1

tags: new_world

vmgenid: 8ab52681-90e9-44d9-9c4a-e338219fbd43Tokens for DCM was created by DCM itself as root@pam.

When I removed args and rng0 lines, migration was successful!

When I tried to migrate it from single node dell4 to proxmox cluster dc6 I cannot select Target Node. Menu is empty after picking Target Remote.

Last edited:

I tested the Datacenter-Manager today with a blank new test-cluster.

(It is virtualized under a Proxmox-Cluster)

First-Things first - what i have done?

Set up my first PVE-Node and add them to PDM.

After that i build up the cluster with two more Nodes.

Thes didn't appear automaticaly (or i havn't wait to much) - but thats not a problem for me.

After i have build up my Testcluster, i add a CEPH Pool. ( 3 vDisk pro Server, 3 Server, 9 OSD in Total)

Every one has 32GB.

The OS-Disk hast 16GB

I have added a NAS also - it has 71.65TiB (because i have my .iso on it)

BUG Reports:

1. Bug: wrong Host Storage Value

Now i noticed: PDM Shows me 79.829 TiB of 195.783TiB

It shows a wrong Value.

2. Bug: Jump to Proxmox Host -> Everytime password prompt

If i use the "Jump-to" function in the PDM and jump to the VM (see: 100 (ubuntu) the right button behind Stop / Start / Migrate / Jump-to ) i jump to the proxmox server and everytime i get asked for my user and password.

3. Bug: Application panic:

When i click or move on "CPU Usage Graph" i get an application panic. (or the other graphs)

< Reason: panicked at /usr/share/cargo/registry/proxmox-yew-comp-0.3.5/src/rrd_graph_new.rs:637:41:index out of bounds: the len is 0 but the index is 0

I didn't find a better Way to report bugs.

BUT:

i am happy to see this Feature are under development and coming soon!

(It is virtualized under a Proxmox-Cluster)

First-Things first - what i have done?

Set up my first PVE-Node and add them to PDM.

After that i build up the cluster with two more Nodes.

Thes didn't appear automaticaly (or i havn't wait to much) - but thats not a problem for me.

After i have build up my Testcluster, i add a CEPH Pool. ( 3 vDisk pro Server, 3 Server, 9 OSD in Total)

Every one has 32GB.

The OS-Disk hast 16GB

I have added a NAS also - it has 71.65TiB (because i have my .iso on it)

BUG Reports:

1. Bug: wrong Host Storage Value

Now i noticed: PDM Shows me 79.829 TiB of 195.783TiB

It shows a wrong Value.

2. Bug: Jump to Proxmox Host -> Everytime password prompt

If i use the "Jump-to" function in the PDM and jump to the VM (see: 100 (ubuntu) the right button behind Stop / Start / Migrate / Jump-to ) i jump to the proxmox server and everytime i get asked for my user and password.

3. Bug: Application panic:

When i click or move on "CPU Usage Graph" i get an application panic. (or the other graphs)

< Reason: panicked at /usr/share/cargo/registry/proxmox-yew-comp-0.3.5/src/rrd_graph_new.rs:637:41:index out of bounds: the len is 0 but the index is 0

I didn't find a better Way to report bugs.

BUT:

i am happy to see this Feature are under development and coming soon!

Last edited:

you can use https://bugzilla.proxmox.comI didn't find a better Way to report bugs.

this is also linked on the 'ALPHA' text on the top of the datacenter manager UI

EDIT: (pressed enter to early

fixed on the pve side with a newer version already2. Bug: Jump to Proxmox Host -> Everytime password prompt

the graphs are empty because you use an older pve version (PDM requires the newest pve versions to work correctly)3. Bug: Application panic:

the application panic is already known

Thanks for reporting issues!

Last edited:

Because Im a dummy I had to delete and remake the VM for the DataCenter Manager and when I try to re add my proxmox servers its saying the certs are already in use. How can delete the old ones and where are they if they old Manager VM is powered down.

api error (status = 400 Bad Request): error creating token: api error (status = 400 Bad Request): Parameter verification failed. tokenid: Token already exists.

Last edited:

I ran into the same issue as you and found the solution. The fingerprint it's getting is from the CA part of the certificate chain. What I ended up doing is using that CA fingerprint in the first step and was able to proceed. In the last step though where I identify endpoints, I had 3 results. 2 were my individual hosts with their respective fingerprints (only the hostname was showing), and one was the FQDN and port I identified in the first step with the CA cert fingerprint. I ran into an issue here too and the solution was to remove the 2 entries with only the hostnames, and make a new entry for my 2nd host with it's FQDN and port, using the same CA cert fingerprint. Now everything works for me!I discovered DCM somehow gets a different serial for a host certificate. How is that possible?

Code:Jan 05 18:56:29 pm-dcm proxmox-datacenter-api[1316]: bad fingerprint: 47:69:ef:da:f8:1d:9d:e9:ca:34:29:85:90:26:54:39:8e:6e:ad:7a:bc:b3:55:fe:a3:98:5a:6d:70:47:04:98 Jan 05 18:56:29 pm-dcm proxmox-datacenter-api[1316]: expected fingerprint: 0c:65:97:47:d1:c5:eb:02:54:e8:40:0f:09:1b:3d:bb:a7:ae:cc:9c:73:38:c5:06:8f:28:00:14:be:57:dd:75

None of the certificates has a serial 47:69:ef:da:f8:1d:9d:e9:ca:34:29:85:90:26:54:39:8e:6e:ad:7a:bc:b3:55:fe:a3:98:5a:6d:70:47:04:98

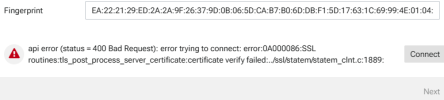

View attachment 80229

Last edited:

Go into the host GUI, then under the Datacenter, find Permissions --> API Tokens, and delete the token that says auto-generated by PDM host 'proxmox-datacenter-manager'Because Im a dummy I had to delete and remake the VM for the DataCenter Manager and when I try to re add my proxmox servers its saying the certs are already in use. How can delete the old ones and where are they if they old Manager VM is powered down.

Many thanksGo into the host GUI, then under the Datacenter, find Permissions --> API Tokens, and delete the token that says auto-generated by PDM host 'proxmox-datacenter-manager'

Check my post just a bit above this one. It's possible you're running into the same issue I was. It seems like the Manager is (mistakenly?) looking for the fingerprint from the CA not the certificate itself. Could be that because we have to upload a chain, it takes the first fingerprint in the chain, which is the CA.I can't add a node.

All nodes use certificates from our company

View attachment 80402

here is the cert

View attachment 80403

I don't understand the answer correctly.Check my post just a bit above this one. It's possible you're running into the same issue I was. It seems like the Manager is (mistakenly?) looking for the fingerprint from the CA not the certificate itself. Could be that because we have to upload a chain, it takes the first fingerprint in the chain, which is the CA.

When adding a node there are only two fields. The IP + port and the fingerprint.

We can't go any further here.

No matter which fingerprint I take from the three possible certificates of the node.

you don't need Fingerprint if server certificate is from Trusted CA (this can be your internal CA if you added the certificate into trusted onesI can't add a node.

All nodes use certificates from our company

View attachment 80402

here is the cert

View attachment 80403

Bash:

# put root CA cert to correct place

mv domain.crt /usr/local/share/ca-certificates/domain.crt

# update ca list... it should say something like "1 certificate added"

update-ca-certificates