I try to setup the following:

3 Node Proxmox Cluster using EVPN between nodes. This works as expected.

Now I want to uplink the EVPN to a Fortigate via BGP for uplink.

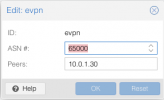

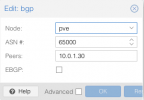

I have added a BGP Controller to a node and set this node as exit node.

Routes are correctly advertised.

But traffic sent from a lxc container is leaving on the wrong interface and not sent to the correct gateway:

PCAP:

10:32:49.632523 veth124i0 P IP 10.182.3.100 > one.one.one.one: ICMP echo request, id 57889, seq 6, length 64

10:32:49.632530 fwln124i0 Out IP 10.182.3.100 > one.one.one.one: ICMP echo request, id 57889, seq 6, length 64

10:32:49.632531 fwpr124p0 P IP 10.182.3.100 > one.one.one.one: ICMP echo request, id 57889, seq 6, length 64

10:32:49.632531 evpn01 In IP 10.182.3.100 > one.one.one.one: ICMP echo request, id 57889, seq 6, length 64

10:32:49.632545 vmbr0_182 Out IP 10.182.3.100 > one.one.one.one: ICMP echo request, id 57889, seq 6, length 64

10:32:49.632551 eno2 Out IP 10.182.3.100 > one.one.one.one: ICMP echo request, id 57889, seq 6, length 64

As you can see traffic is sent out via vmbr0_182, vmbr0_182 is the interface with the default gateway, but traffic should be sent to the IP 100.111.64.1, which is directly connected on vmbr0_164

IP Route:

default via 10.182.2.1 dev vmbr0_182 proto kernel onlink

default nhid 149 via 100.111.64.1 dev vmbr0_164 proto bgp metric 20

10.42.1.0/24 nhid 149 via 100.111.64.1 dev vmbr0_164 proto bgp metric 20

10.42.42.0/24 nhid 149 via 100.111.64.1 dev vmbr0_164 proto bgp metric 20

10.42.55.0/24 nhid 149 via 100.111.64.1 dev vmbr0_164 proto bgp metric 20

10.182.0.0/24 nhid 150 via 100.111.64.2 dev vmbr0_164 proto bgp metric 20

10.182.1.0/24 nhid 150 via 100.111.64.2 dev vmbr0_164 proto bgp metric 20

10.182.2.0/24 dev vmbr0_182 proto kernel scope link src 10.182.2.101

10.212.134.254/31 nhid 149 via 100.111.64.1 dev vmbr0_164 proto bgp metric 20

100.111.64.0/29 dev vmbr0_164 proto kernel scope link src 100.111.64.3

100.111.64.10/31 nhid 149 via 100.111.64.1 dev vmbr0_164 proto bgp metric 20

100.111.64.12/31 nhid 149 via 100.111.64.1 dev vmbr0_164 proto bgp metric 20

151.248.130.0/24 nhid 149 via 100.111.64.1 dev vmbr0_164 proto bgp metric 20

192.168.101.0/24 dev vmbr1_101 proto kernel scope link src 192.168.101.101

192.168.102.0/24 dev vmbr1_102 proto kernel scope link src 192.168.102.101

FRR routing table:

root@chsfl1-cl01-pve01:~# vtysh -c "sh ip route"

B>* 0.0.0.0/0 [20/0] via 100.111.64.1, vmbr0_164, weight 1, 00:49:13

B>* 10.42.1.0/24 [20/0] via 100.111.64.1, vmbr0_164, weight 1, 00:59:57

B>* 10.42.42.0/24 [20/10] via 100.111.64.1, vmbr0_164, weight 1, 00:59:57

B>* 10.42.55.0/24 [20/10] via 100.111.64.1, vmbr0_164, weight 1, 00:59:57

B>* 10.182.0.0/24 [20/0] via 100.111.64.2, vmbr0_164, weight 1, 00:59:57

B>* 10.182.1.0/24 [20/0] via 100.111.64.2, vmbr0_164, weight 1, 00:59:57

B 10.182.2.0/24 [20/0] via 100.111.64.1, vmbr0_164, weight 1, 00:59:57

C>* 10.182.2.0/24 is directly connected, vmbr0_182, 1d14h32m

B>* 10.182.3.0/24 [20/0] is directly connected, evpn01 (vrf vrf_evpn), weight 1, 00:49:14

B>* 10.212.134.254/31 [20/0] via 100.111.64.1, vmbr0_164, weight 1, 00:59:57

B 100.111.64.0/29 [20/0] via 100.111.64.1 inactive, weight 1, 00:59:57

C>* 100.111.64.0/29 is directly connected, vmbr0_164, 1d14h31m

B>* 100.111.64.10/31 [20/0] via 100.111.64.1, vmbr0_164, weight 1, 00:59:57

B>* 100.111.64.12/31 [20/0] via 100.111.64.1, vmbr0_164, weight 1, 00:59:57

B>* 151.248.130.0/24 [20/0] via 100.111.64.1, vmbr0_164, weight 1, 00:59:57

C>* 192.168.101.0/24 is directly connected, vmbr1_101, 1d14h32m

C>* 192.168.102.0/24 is directly connected, vmbr1_102, 1d14h32m

How can I get the SDN to send traffic to the gateway received by FRR via BGP instead of to the default gateway of the PVE host?