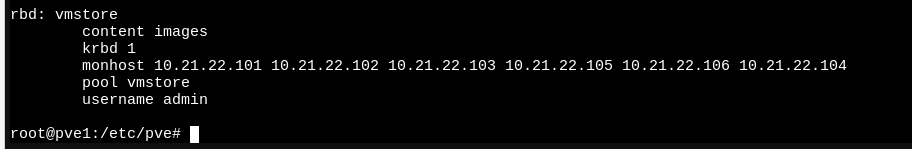

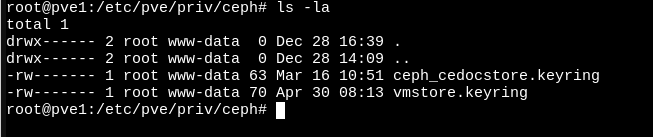

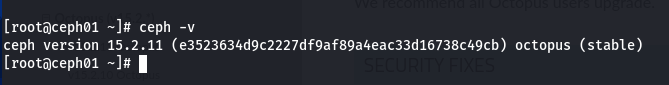

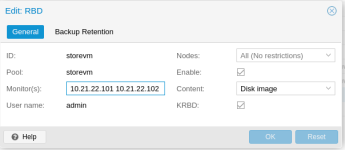

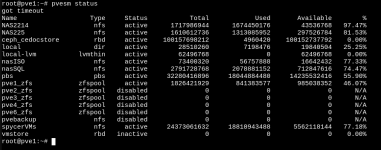

Good morning, I have a cluster with 16 Hosts proxmox and an external CEPH cluster configured to store the VMS from the promox, I was using it normally on the proxmox and recently we had to do a maintenance on the storage, we moved all the VMS to another storage, I allocated the RBD storage from the proxmox cluster and formatted the ceph, after we returned the RBD storage with the same IPS to the proxmox but it doesn’t connect anymore, I can make it go up on another proxmox that I have, but no longer on this cluster where it was before removing, now I have unknow status, my doubt is if there was any trash left behind that would not allow ceph to be connected again.

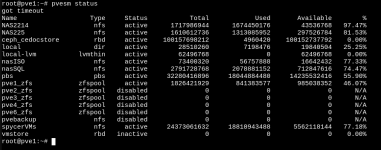

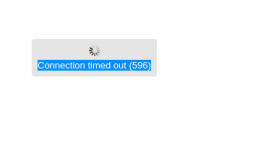

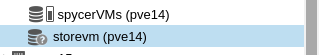

In the picture show the vmstore rbd inactive , but i dont see error , only got time out , in other proxmox this same storage its ok , but in this cluster i has this error

any idea ?

Many tks

In the picture show the vmstore rbd inactive , but i dont see error , only got time out , in other proxmox this same storage its ok , but in this cluster i has this error

any idea ?

Many tks