# guest setup

# disable secureboot in guest bios # todo: see if we can get secureboot enabled but i doubt it, not even az ND series has

# /etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet pci=realloc"

# end of guest setup

# pve side guest setup

# command to set with qm

qm set <vmid> -args '-global q35-pcihost.pci-hole64-size=512G

# host cpu

# q35

# uefi bios but no secureboot

# pve config (4x rtx 6000)

args: -global q35-pcihost.pci-hole64-size=512G # todo: verify that this is required

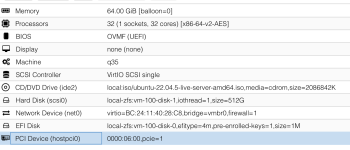

balloon: 0 # todo: verify that this is required, unsure of impact if ballooning permitted

bios: ovmf

boot: order=scsi0;ide2;net0

cores: 48

cpu: host # absolutely required, went from 2-8 visible GPUs with host CPU flag

efidisk0: local-zfs:vm-102-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

hostpci0: 0000:06:00.0,pcie=1

hostpci1: 0000:07:00.0,pcie=1

hostpci2: 0000:75:00.0,pcie=1

hostpci3: 0000:76:00.0,pcie=1

ide2: local:iso/ubuntu-22.04.5-live-server-amd64.iso,media=cdrom,size=2086842K

machine: q35

memory: 262144

meta: creation-qemu=10.0.2,ctime=1761713353

name: gpu-test-1

net0: virtio=BC:24:11:F2:EF:A0,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi0: local-zfs:vm-102-disk-1,iothread=1,size=256G

scsihw: virtio-scsi-single

smbios1: uuid=8685f01d-6ed9-46fa-9782-b615ad18a8b4

sockets: 1

tpmstate0: local-zfs:vm-102-disk-2,size=4M,version=v2.0

# end of pve side guest setup

# host setup

# verify resize bar, above 4g encoding, sr-iov and iommu in bios - todo: add host side verification script

# /etc/default/grub

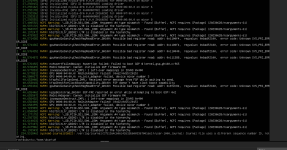

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt vfio-pci.ids=10de:2bb5" # todo: verify if vfio-pci line required, has worked without

cat /etc/modprobe.d/blacklist-gpu.conf # cut down from exhaustive list

blacklist radeon

blacklist nouveau

blacklist nvidia

cat /etc/modprobe.d/vfio.conf

options kvm ignore_msrs=1 report_ignored_msrs=0 # todo: verify if %100 required

options vfio-pci ids=10de:2bb5 disable_vga=1 disable_idle_d3=1 # # todo: verified, d3 required or we enter weird power state

cat /etc/modules-load.d/vfio.conf # required

vfio

vfio_iommu_type1

vfio_pci

# end of host setup