Hi, I'm trying to build a hyper-converged 3 node cluster with 4 OSD each on proxmox but I'm having some issues with the OSDs...

First one is the rebalance speed: I've noticed that, even over a 10Gbps network, ceph rebalance my pool at max 1Gbps

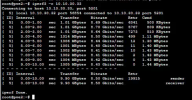

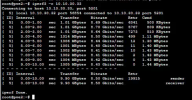

but iperf3 confirm that the link is effectively 10Gbps (now is a little slower since it's rebalacing, otherwise it's stable at basically 9.90Gbits)

All the OSDs are Samsung Enterprise 1.92TB SATA SSD with a theorical speed of 4160Mbps read and 3880Mbps write.

Each node has 128GB RAM and 2x Xeon Gold 6150 18C/36T.

Proxmox version is 8.0.4.

Any type of help is really really appreciated!

First one is the rebalance speed: I've noticed that, even over a 10Gbps network, ceph rebalance my pool at max 1Gbps

but iperf3 confirm that the link is effectively 10Gbps (now is a little slower since it's rebalacing, otherwise it's stable at basically 9.90Gbits)

All the OSDs are Samsung Enterprise 1.92TB SATA SSD with a theorical speed of 4160Mbps read and 3880Mbps write.

Each node has 128GB RAM and 2x Xeon Gold 6150 18C/36T.

Proxmox version is 8.0.4.

Any type of help is really really appreciated!