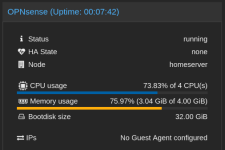

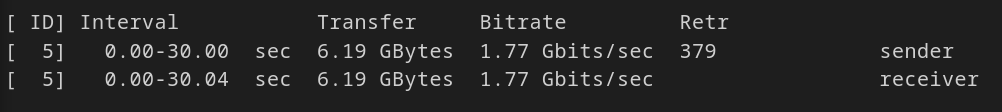

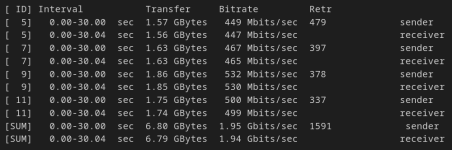

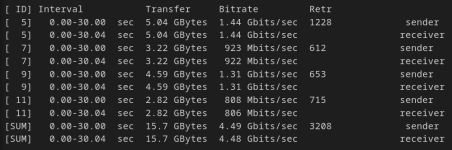

I am running an opensense VM in proxmox and while VM-VM throughput on the same VLAN can do ~32Gbps, routing across VLANs incur a 94% penalty dropping it to 2Gbps.

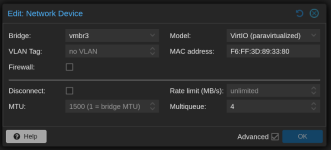

I understand this is a limitation of emulating a L3 switch through software in which these devices typically have tailor-made ASICs for routing packets. I have tried a) setting multiqueue to 4 b) hardware offloading is disabled by default c) cpu type is host and aes flag is added anyways just to be sure.

Currently I am assessing my options to see if I have any options in getting as close to 10G as I can.

2. Passthrough the NIC then route the traffic to the switch, then back to another NIC assigned by the bridge which the other VMs are connected to. This method presumably would leverage hardware on the NIC. This seems a bit clunky and wasteful having the traffic traverse the whole chain when the destination is on the same machine not to mention require 3 NICs.

3) SR-IOV? I have read you can pass some of the virtual functions to the VM to get *native performance while allowing the host access to the actual NIC. If I am not mistaken, this would be a mix of option #1 and #2 where inter-vlan routing is not done by the CPU but being offloaded to the NIC?

I was able to create VFs on my x520s and passthrough was fine to my OpenSense VM after shuffling around the PCIe slots due to IOMMU groupings. However I am stuck in trying to bridge the VF to a bridge for the other VMs to use. I am trying to use it the same way as I would a linux bridge set to VLAN aware but from what it looks like I may have to take a different approach? While I have read a lot on SR-IOV, I admit I am having problems digesting all this info.

What are my options? Should I take another approach altogether?

I understand this is a limitation of emulating a L3 switch through software in which these devices typically have tailor-made ASICs for routing packets. I have tried a) setting multiqueue to 4 b) hardware offloading is disabled by default c) cpu type is host and aes flag is added anyways just to be sure.

Currently I am assessing my options to see if I have any options in getting as close to 10G as I can.

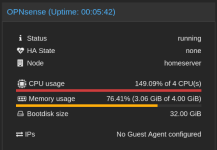

- My current setup which suffers from the problems detailed above. i5 7400 maintains 2Gbps routing with 4 cores assigned to it at ~30-50% cpu utilization.

2. Passthrough the NIC then route the traffic to the switch, then back to another NIC assigned by the bridge which the other VMs are connected to. This method presumably would leverage hardware on the NIC. This seems a bit clunky and wasteful having the traffic traverse the whole chain when the destination is on the same machine not to mention require 3 NICs.

3) SR-IOV? I have read you can pass some of the virtual functions to the VM to get *native performance while allowing the host access to the actual NIC. If I am not mistaken, this would be a mix of option #1 and #2 where inter-vlan routing is not done by the CPU but being offloaded to the NIC?

I was able to create VFs on my x520s and passthrough was fine to my OpenSense VM after shuffling around the PCIe slots due to IOMMU groupings. However I am stuck in trying to bridge the VF to a bridge for the other VMs to use. I am trying to use it the same way as I would a linux bridge set to VLAN aware but from what it looks like I may have to take a different approach? While I have read a lot on SR-IOV, I admit I am having problems digesting all this info.

What are my options? Should I take another approach altogether?