I am having a Problem with one Debian Guest VM (running Debian 12 Bookworm AMD64, currently running the Upgrade to Debian 13 Trixie AMD64).

It's extremely sluggish, even to just run

While compiling Kernel Modules (e.g.

All other VMs on the same Host seem to behave just fine.

At first I thought that maybe it was due to the fact that it was the only VM on that Server that was of . I briefly also tried to set the VM Version to

. I briefly also tried to set the VM Version to

Host: Supermicro X10SLM+-F, Intel Xeon E3-1265L, 32GB RAM, Debian Trixie / Proxmox VE 9

Output of

Guest: Debian 12 AMD64 (currently upgrading to Debian 13 AMD64)

Any Idea what might cause such poor Performance but only affecting one VM ? Tips to troubleshoot ?

It's extremely sluggish, even to just run

apt update.While compiling Kernel Modules (e.g.

zfs via dkms) I can see that the CPU Usage never gets above 15% or so which is weird.All other VMs on the same Host seem to behave just fine.

At first I thought that maybe it was due to the fact that it was the only VM on that Server that was of

q35 Type (all other were i440fx), but even after Switching to i440fx and removing the PCIe Device Passthrough (I was passing a LSI 9211-8i HBA through) the slowness didn't go away 9.0 or even the CPU Type from host to Haswell-NoTSX but Things didn't improve much if at all).Host: Supermicro X10SLM+-F, Intel Xeon E3-1265L, 32GB RAM, Debian Trixie / Proxmox VE 9

Output of

pveversion:

Code:

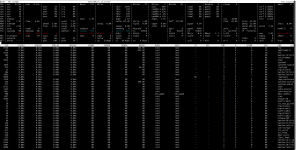

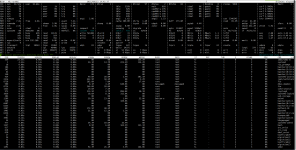

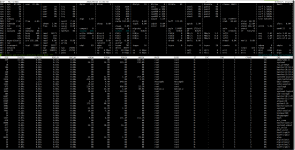

root@HOST:~# pveversion --verbose

proxmox-ve: 9.0.0 (running kernel: 6.8.12-15-pve)

pve-manager: 9.0.10 (running version: 9.0.10/deb1ca707ec72a89)

proxmox-kernel-helper: 9.0.4

proxmox-kernel-6.14.11-2-pve-signed: 6.14.11-2

proxmox-kernel-6.14: 6.14.11-2

proxmox-kernel-6.8: 6.8.12-15

proxmox-kernel-6.8.12-15-pve-signed: 6.8.12-15

pve-kernel-5.15.158-2-pve: 5.15.158-2

ceph-fuse: 19.2.3-pve1

corosync: 3.1.9-pve2

criu: 4.1.1-1

frr-pythontools: 10.3.1-1+pve4

ifupdown: residual config

ifupdown2: 3.3.0-1+pmx10

intel-microcode: 3.20250512.1

libjs-extjs: 7.0.0-5

libproxmox-acme-perl: 1.7.0

libproxmox-backup-qemu0: 2.0.1

libproxmox-rs-perl: 0.4.1

libpve-access-control: 9.0.3

libpve-apiclient-perl: 3.4.0

libpve-cluster-api-perl: 9.0.6

libpve-cluster-perl: 9.0.6

libpve-common-perl: 9.0.10

libpve-guest-common-perl: 6.0.2

libpve-http-server-perl: 6.0.4

libpve-network-perl: 1.1.8

libpve-rs-perl: 0.10.10

libpve-storage-perl: 9.0.13

libspice-server1: 0.15.2-1+b1

lvm2: 2.03.31-2+pmx1

lxc-pve: 6.0.5-1

lxcfs: 6.0.4-pve1

novnc-pve: 1.6.0-3

proxmox-backup-client: 4.0.15-1

proxmox-backup-file-restore: 4.0.15-1

proxmox-backup-restore-image: 1.0.0

proxmox-firewall: 1.1.2

proxmox-kernel-helper: 9.0.4

proxmox-mail-forward: 1.0.2

proxmox-mini-journalreader: 1.6

proxmox-offline-mirror-helper: 0.7.2

proxmox-widget-toolkit: 5.0.5

pve-cluster: 9.0.6

pve-container: 6.0.13

pve-docs: 9.0.8

pve-edk2-firmware: not correctly installed

pve-esxi-import-tools: 1.0.1

pve-firewall: 6.0.3

pve-firmware: 3.16-4

pve-ha-manager: 5.0.4

pve-i18n: 3.6.0

pve-qemu-kvm: 10.0.2-4

pve-xtermjs: 5.5.0-2

qemu-server: 9.0.22

smartmontools: 7.4-pve1

spiceterm: 3.4.1

swtpm: 0.8.0+pve2

vncterm: 1.9.1

zfsutils-linux: 2.3.4-pve1Guest: Debian 12 AMD64 (currently upgrading to Debian 13 AMD64)

Any Idea what might cause such poor Performance but only affecting one VM ? Tips to troubleshoot ?