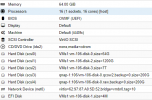

I have a Ubuntu Desktop VM with two hard disks. Both reside on an NFS server and the connection is 10GbE. One disk is in raw format and one in qcow2 format. The image files are called vm-106-disk-0.raw and vm-106-disk-0.qcow2. Both are 200 GB in size and attached using VirtIO SCSI controller and SCSI Bus/Device. Neither one has any advanced options set and cahche is set to Default (no cache).

Now when I run the following script (qcow2 mounted at /data and raw mounted at /data2, i change that in the script between tests):

I get weird results.

For the qcow2 disk iotop shows Total DISK WRITE: 3,6 GB/s (36 Gb/s = impossible with the 10 GbE NIC) and no network traffic is shown on the summary page of the node or in the NFS server.

For the raw disk iotop shows Total DISK WRITE: 970 MB/s (9,7 Gb/s = almost full speed for the 10 GbE NIC) and the traffic is shown both on the Proxmox node summary page and in the Resource monitor of the NFS server.

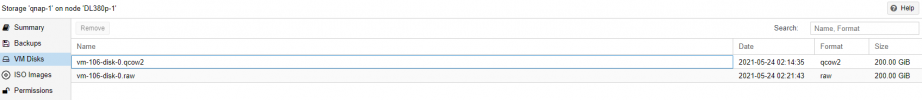

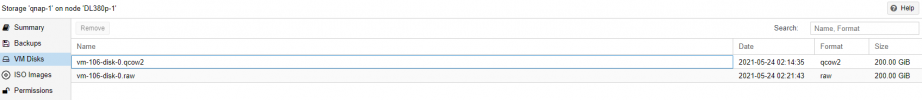

In the following screenshots you can see the image files next to each other in the same storage (first image from QNAP File Station and second from PVE).

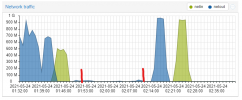

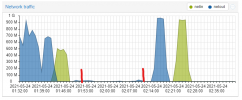

Here is a screenshot from the PVE node summary page. The write and read to and from the qcow2 disk were done between the red markers. There was also nothing on the QNAP Resource monitor, but it showed the right speed for the raw disk (at the same time as the grahps after the latter red marker in the image from PVE).

The same applied for a read test done using the following script (again /data = qcow2, /data2 = raw):

None of this weird behaviour is noticed if running the tests on the node with the NFS mount path /mnt/pve/qnap-1.

What could be causing this and where is the data going? It can't be going at 36 Gb/s since the NIC is only 10 GbE. df -h reports the space on both drives getting filled so the data has to go somewhere. There's also no network traffic after the test (like cache flushing) and the tested data size 16*12 GB is more than the RAM of the guest OS and even more than the node has RAM installed so it can't write the whole thing to RAM.

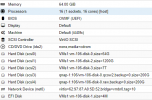

VM config:

EDIT:

In case it's important, the NFS server is a QNAP TS-1277XU-RP with Ryzen 5 2600 CPU and 8 GB RAM. The disks are Seagate IronWolf Pro 12 TB and there are 10 disks in RAID10 + 2 hot spares. Additionally, there is a write-only cache of two 500 GB Samsung 980 NVMe SSDs in a RAID1 configuration. The cache is enabled for Random I/O data blocks under 4MB.

It's connected to both PVE nodes straight with no switch using 10 GbE DAC cables and the PVE nodes are HP ProLiant DL380p Gen8 with HP NC523 dual port 10 GbE SFP+ NICs.

Now when I run the following script (qcow2 mounted at /data and raw mounted at /data2, i change that in the script between tests):

Code:

!/bin/bash

for i in {1..16}

do

dd if=/dev/zero of=/data/test-$i bs=4k count=3000000 &

done

time -p waitI get weird results.

For the qcow2 disk iotop shows Total DISK WRITE: 3,6 GB/s (36 Gb/s = impossible with the 10 GbE NIC) and no network traffic is shown on the summary page of the node or in the NFS server.

For the raw disk iotop shows Total DISK WRITE: 970 MB/s (9,7 Gb/s = almost full speed for the 10 GbE NIC) and the traffic is shown both on the Proxmox node summary page and in the Resource monitor of the NFS server.

In the following screenshots you can see the image files next to each other in the same storage (first image from QNAP File Station and second from PVE).

Here is a screenshot from the PVE node summary page. The write and read to and from the qcow2 disk were done between the red markers. There was also nothing on the QNAP Resource monitor, but it showed the right speed for the raw disk (at the same time as the grahps after the latter red marker in the image from PVE).

The same applied for a read test done using the following script (again /data = qcow2, /data2 = raw):

Code:

#!/bin/bash

for i in {1..16}

do

pv /data/test5-$i > /dev/null &

done

time -p waitNone of this weird behaviour is noticed if running the tests on the node with the NFS mount path /mnt/pve/qnap-1.

What could be causing this and where is the data going? It can't be going at 36 Gb/s since the NIC is only 10 GbE. df -h reports the space on both drives getting filled so the data has to go somewhere. There's also no network traffic after the test (like cache flushing) and the tested data size 16*12 GB is more than the RAM of the guest OS and even more than the node has RAM installed so it can't write the whole thing to RAM.

VM config:

EDIT:

In case it's important, the NFS server is a QNAP TS-1277XU-RP with Ryzen 5 2600 CPU and 8 GB RAM. The disks are Seagate IronWolf Pro 12 TB and there are 10 disks in RAID10 + 2 hot spares. Additionally, there is a write-only cache of two 500 GB Samsung 980 NVMe SSDs in a RAID1 configuration. The cache is enabled for Random I/O data blocks under 4MB.

It's connected to both PVE nodes straight with no switch using 10 GbE DAC cables and the PVE nodes are HP ProLiant DL380p Gen8 with HP NC523 dual port 10 GbE SFP+ NICs.

Last edited: