I have 3 node ceph cluster, each node has 4x600GB OSD and I have just one pool with size 3/2.

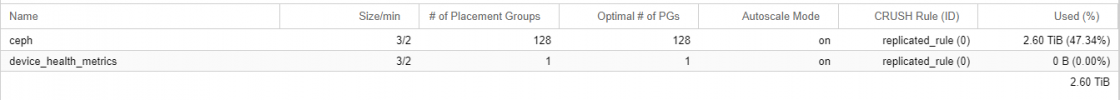

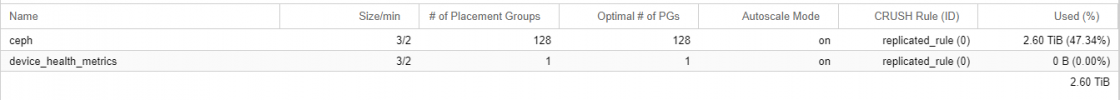

I was thinking that over 33% of used storage(I mean just data no replicas) I would have received some warning message, but cluster seems healthy over 40% and everything is green. I'm attaching some screens and the ceph df result. So can someone explain to me how is possible to have 2 replicas if storage is over 33%?

I was thinking that over 33% of used storage(I mean just data no replicas) I would have received some warning message, but cluster seems healthy over 40% and everything is green. I'm attaching some screens and the ceph df result. So can someone explain to me how is possible to have 2 replicas if storage is over 33%?

Code:

root@node1:~# ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 6.5 TiB 3.9 TiB 2.6 TiB 2.6 TiB 39.83

TOTAL 6.5 TiB 3.9 TiB 2.6 TiB 2.6 TiB 39.83

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

ceph 1 128 885 GiB 230.34k 2.6 TiB 47.34 986 GiB

device_health_metrics 4 1 0 B 0 0 B 0 986 GiB