New Import Wizard Available for Migrating VMware ESXi Based Virtual Machines

- Thread starter t.lamprecht

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi jad238,

thanks for the manifest file.

I'm not sure if this is the reason for the import troubles -- but there's one entry where there's not datastore recognized for a VM:

Could you check, how the actual storage config for this VM looks like on the ESXI-side -- is it on the same datastore as the remaining ones?

Best regards,

Daniel

thanks for the manifest file.

I'm not sure if this is the reason for the import troubles -- but there's one entry where there's not datastore recognized for a VM:

Code:

"vCLS-4c4c4544-0042-5310-8030-b1c04f5a3233": {

"config": {

"datastore": "",

"path": "/var/run/crx/infra/vCLS-4c4c4544-0042-5310-8030-b1c04f5a3233/vCLS-4c4c4544-0042-5310-8030-b1c04f5a3233.vmx",

"checksum": "b2cee0014fab6e951cd89d30c1e8a9bf8718f6e3f5d9903a7cada39fa8571fa6"

},

"disks": [],

"power": "poweredOn"

},Could you check, how the actual storage config for this VM looks like on the ESXI-side -- is it on the same datastore as the remaining ones?

Best regards,

Daniel

Hi Daniel,

vCLS-4c4c4544-0042-5310-8030-b1c04f5a3233 is a vSphere cluster VM which is required to maintain the health of vSphere cluster services (vCLS).

Some general context, vCLS configures a quorum on these VMs on the cluster. A vSphere Cluster Service VM is deployed from an OVA with a minimal installed profile of PhotonOS. vSphere Cluster Service manages the resources, power state and availability of these VMs. vSphere Cluster Service VMs are required for maintaining the health and availability of vSphere Cluster Service. Any impact on the power state or resources of these VMs might degrade the health of the vSphere Cluster Service and cause vSphere DRS to cease operation for the cluster.

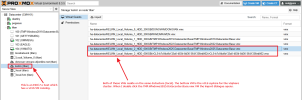

It's worth noting that a vCLS VM exists on the ESXi 7.x host which work perfectly fine to migrate systems. I’ve attached screenshots for reference. The ESXi 7.x host (kelvin) has a VM on the same datastore as the vCLS VM and the import configuration dialogue opens without issue. To my knowledge the vCLS VMs are the same across a vSphere cluster and are not ESXi version specific, but I will verify if there are any differences between versions.

As a test I moved a VM to another datastore which does not have a vCLS VM and attempted the migration, with the same error result. I’m not sure this is specific to the datastore on which the vCLS resides.

vCLS-4c4c4544-0042-5310-8030-b1c04f5a3233 is a vSphere cluster VM which is required to maintain the health of vSphere cluster services (vCLS).

Some general context, vCLS configures a quorum on these VMs on the cluster. A vSphere Cluster Service VM is deployed from an OVA with a minimal installed profile of PhotonOS. vSphere Cluster Service manages the resources, power state and availability of these VMs. vSphere Cluster Service VMs are required for maintaining the health and availability of vSphere Cluster Service. Any impact on the power state or resources of these VMs might degrade the health of the vSphere Cluster Service and cause vSphere DRS to cease operation for the cluster.

It's worth noting that a vCLS VM exists on the ESXi 7.x host which work perfectly fine to migrate systems. I’ve attached screenshots for reference. The ESXi 7.x host (kelvin) has a VM on the same datastore as the vCLS VM and the import configuration dialogue opens without issue. To my knowledge the vCLS VMs are the same across a vSphere cluster and are not ESXi version specific, but I will verify if there are any differences between versions.

As a test I moved a VM to another datastore which does not have a vCLS VM and attempted the migration, with the same error result. I’m not sure this is specific to the datastore on which the vCLS resides.

Attachments

Hi jad238,

Thanks for the esxi7-screenshots.

It appears as if on ESXI-7 the .vmx file of the vCLS machine resides on a datastore, while on the ESXI-8 host it resides somewhere on the host's root filesystem under /var/run/crx, which is not related to any datastore. This might cause some confusion for the importer.

Thanks for the esxi7-screenshots.

It appears as if on ESXI-7 the .vmx file of the vCLS machine resides on a datastore, while on the ESXI-8 host it resides somewhere on the host's root filesystem under /var/run/crx, which is not related to any datastore. This might cause some confusion for the importer.

Hi Daniel,

I investigated the vCLS VM in question more fully and found an issue with it. Because this host is no longer using shared disk (as we are transitioning to a different storage platform) it was possible for me to disable DRS and HA, and enable retreat mode temporarily with no impact to the environment. This removed the vCLS VMs in this cluster (again not a big deal in this instance). I then disabled retreat mode (change the checkbox back to system managed), after which new vCLS VMs were created. The details regarding retreat mode can be found here.

After making this change and allowing the new vCLS VMs to come back online (I waited about 5 minutes to be safe) I attempted another migration to Proxmox. This time the dialogue box opened and the import was successful.

I’m going to do some additional testing to verify fully. Since the vCLS VMs are specific to vCenter and won’t work on other platforms, maybe something can be done in the Proxmox migration tool to exclude/ignore those VMs.

I investigated the vCLS VM in question more fully and found an issue with it. Because this host is no longer using shared disk (as we are transitioning to a different storage platform) it was possible for me to disable DRS and HA, and enable retreat mode temporarily with no impact to the environment. This removed the vCLS VMs in this cluster (again not a big deal in this instance). I then disabled retreat mode (change the checkbox back to system managed), after which new vCLS VMs were created. The details regarding retreat mode can be found here.

After making this change and allowing the new vCLS VMs to come back online (I waited about 5 minutes to be safe) I attempted another migration to Proxmox. This time the dialogue box opened and the import was successful.

I’m going to do some additional testing to verify fully. Since the vCLS VMs are specific to vCenter and won’t work on other platforms, maybe something can be done in the Proxmox migration tool to exclude/ignore those VMs.

Attachments

Hi jad238,

hacky, not checked for safety&effectivity, but if you're willing to risk&test, you could try modding "/usr/libexec/pve-esxi-import-tools/listvms.py":

This should exclude all VMs with an empty datastore string (as are the vCLS machines) from the manifest.json file and not let these interfere with the esxi storage activation anymore.

If you want to get rid of these changes again, you can just 'apt reinstall pve-esxi-import-tools'.

hacky, not checked for safety&effectivity, but if you're willing to risk&test, you could try modding "/usr/libexec/pve-esxi-import-tools/listvms.py":

Code:

--- #<buffer listvms.py<rust>>

+++ #<buffer listvms.py<libexec>>

@@ -265,6 +265,12 @@

with connect_to_esxi_host(connection_args) as connection:

data = {}

for vm in list_vms(connection):

+ # skip vms with empty datastore_name

+ datastore_name, relative_vmx_path = parse_file_path(

+ vm.config.files.vmPathName

+ )

+ if not datastore_name:

+ continue

try:

fetch_and_update_vm_data(vm, data)

except Exception as err:This should exclude all VMs with an empty datastore string (as are the vCLS machines) from the manifest.json file and not let these interfere with the esxi storage activation anymore.

If you want to get rid of these changes again, you can just 'apt reinstall pve-esxi-import-tools'.

Hi All,

Import wizard has improved alot, not just storage but you can now configure VMs, It works without any issue.

Here is step by step tutorial for those who are still struggling, Tutorial on VM Import Wizard Proxmox

Import wizard has improved alot, not just storage but you can now configure VMs, It works without any issue.

Here is step by step tutorial for those who are still struggling, Tutorial on VM Import Wizard Proxmox

Hi Daniel,

I was able to do more in depth testing and the changes to listvms.py seems to work. I did reverse those changes as this is an adhoc test, and we don’t want to implement changes which are not fully tested/validated and implemented in an official patch/update from the vendor.

For other who may read this. Another way this can be addressed as a “workaround” is to enable retreat mode in the vSphere cluster. Keep in mind doing this will have implications to the vSphere cluster, specifically around things like HA, DRS, etc. I would only suggest doing this through proper change processes.

The best approach is to have this “fix” added to a future release/patch of Proxmox.

I greatly appreciate your help in tracking this down with us.

I was able to do more in depth testing and the changes to listvms.py seems to work. I did reverse those changes as this is an adhoc test, and we don’t want to implement changes which are not fully tested/validated and implemented in an official patch/update from the vendor.

For other who may read this. Another way this can be addressed as a “workaround” is to enable retreat mode in the vSphere cluster. Keep in mind doing this will have implications to the vSphere cluster, specifically around things like HA, DRS, etc. I would only suggest doing this through proper change processes.

The best approach is to have this “fix” added to a future release/patch of Proxmox.

I greatly appreciate your help in tracking this down with us.

Hello,FAQ

[...]

Q: Why can't I find updates for those packages?

A: As of this writing, these packages are available in the pvetest and the pve-no-subscription repositories.

Does this mean if I have Proxmox VE 8.4 using pve-no-subscription repositories and I get a support subscription, the packages will be removed ? Are they available at all on the enterprise repositories, or when would they be ?

no, it means that *back when this feature was initially announced for testing over a year ago*, it was only available in the non-enterprise repositories. this has obviously changed in the meantime..

This limitation is because of ESXi. Nothing PVE can do about it.

You can use other means of transferring the data, e. g. using a shared storage (NFS) or clonezilla.

See https://pve.proxmox.com/wiki/Migrate_to_Proxmox_VE

You can use other means of transferring the data, e. g. using a shared storage (NFS) or clonezilla.

See https://pve.proxmox.com/wiki/Migrate_to_Proxmox_VE

I tried to use Veeam to restore also limited at 1Gbit but backup can be up to 7Gbit..This limitation is because of ESXi. Nothing PVE can do about it.

You can use other means of transferring the data, e. g. using a shared storage (NFS) or clonezilla.

See https://pve.proxmox.com/wiki/Migrate_to_Proxmox_VE

We recently tried live migration and it was quite weird.

The host we migrated (from ESXi 8.0.3 to Proxmox 9) was a simple Linux VM with some docker containers.

While the target Proxmox server reported a constant ingress of data of around 800MBit/s from the ESXi node, the progress on the VM migration itself was very very slow, far slower than it should've been considering the ingress rate of data.

Sometimes when the VM did not access the disk, you could see that the progress was approximately 100MByte/s.

But when the VM began accessing the disk (mostly reads!), the progress dropped far below the 100MByte/s while the ingress was still around 800MBit/s.

Even after waiting twice as long as it would take to migrate the entire VM disk (given the ingress rate of data), the overall migration was only at around 25%.

I also realized that during live migration (when you realize that it doesn't make sense, because your VM is SO SLOW that it's almost equal to the VM being down), you should NOT STOP the VM, because the migration will then fail.

Overall, I'd recommend to stay away from live migration in its current state.

The host we migrated (from ESXi 8.0.3 to Proxmox 9) was a simple Linux VM with some docker containers.

While the target Proxmox server reported a constant ingress of data of around 800MBit/s from the ESXi node, the progress on the VM migration itself was very very slow, far slower than it should've been considering the ingress rate of data.

Sometimes when the VM did not access the disk, you could see that the progress was approximately 100MByte/s.

But when the VM began accessing the disk (mostly reads!), the progress dropped far below the 100MByte/s while the ingress was still around 800MBit/s.

Even after waiting twice as long as it would take to migrate the entire VM disk (given the ingress rate of data), the overall migration was only at around 25%.

I also realized that during live migration (when you realize that it doesn't make sense, because your VM is SO SLOW that it's almost equal to the VM being down), you should NOT STOP the VM, because the migration will then fail.

Overall, I'd recommend to stay away from live migration in its current state.

We recently tried live migration and it was quite weird.

The host we migrated (from ESXi 8.0.3 to Proxmox 9) was a simple Linux VM with some docker containers.

While the target Proxmox server reported a constant ingress of data of around 800MBit/s from the ESXi node, the progress on the VM migration itself was very very slow, far slower than it should've been considering the ingress rate of data.

Sometimes when the VM did not access the disk, you could see that the progress was approximately 100MByte/s.

But when the VM began accessing the disk (mostly reads!), the progress dropped far below the 100MByte/s while the ingress was still around 800MBit/s.

Even after waiting twice as long as it would take to migrate the entire VM disk (given the ingress rate of data), the overall migration was only at around 25%.

I also realized that during live migration (when you realize that it doesn't make sense, because your VM is SO SLOW that it's almost equal to the VM being down), you should NOT STOP the VM, because the migration will then fail.

Overall, I'd recommend to stay away from live migration in its current state.

Yup, it's slow... That's why I recommend not even consider it for anything under 150GB, and even then it's generally better to build a new machine and sync the data between guests if you have a large vm. I used it as an excuse to do an OS upgrade for some large multi-TB VMs.... If you do run it, you might even give the migrating VM extra memory during the migration so it can cache more. It's also critical you get the virtio drivers installed prior to the migration as trying to run vmxnet3 is extremely slow in proxmox. Definitely don't do it for anything needing more than mild I/O, possibly an infrequently accessed archive server that you need to keep online.

When I tested, the speed was closer to 50-75% of doing the migration with the vm off, but I was careful to to ensure all layer 2, and proxmox had 4x25gbe to talk layer 2 to vmware.

hat's why I recommend not even consider it for anything under 150GB, and even then it's generally better to build a new machine and sync the data between guests if you have a large vm. I used it as an excuse to do an OS upgrade for some large multi-TB VMs

Or use this method: https://pve.proxmox.com/wiki/Migrate_to_Proxmox_VE#Attach_Disk_&_Move_Disk_(minimal_downtime)

The basic idea is that you have the *vmdk files on a network share seen by ESXi and ProxmoxVE. You still need to create a new VM in ProxmoxVE, afterwards you shutdown the VM on ESXi, attach the vmdk files to the ProxmoxVE VM and then launch the new VM. After launch you can move the disc to your actual target storage. You will still have a short downtime but to wait until the transfer of the VM data is completed since the actual transfer to your final VM storage is done while the new VM is already running on ProxmoxVE.