done. Thanks for reporting this.The preparation section of the wiki page should include checking for such policies.

New Import Wizard Available for Migrating VMware ESXi Based Virtual Machines

- Thread starter t.lamprecht

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Indeed.I have discovered the problem. The VM had a VM Storage Policy applying to it, the VM was encrypted using the VMware VM Encryption Provider. Removing the policy, and thus the encryption, allowed it to be imported. The preparation section of the wiki page should include checking for such policies.

We have some storage policies in place to limit IO on the VM disks and this was blocking the import process.

Once we remove them in VMware, no trouble with import.

another question on importing VMware to Proxmox... can you do snapshots after you import a VMWare VM into Proxmox? if so how can you do that? I am trying to do this but i am getting an error saying that this VM is not configured to do snapshots, this is an issue as this is one of the things that should work "out of the box" when importing VM's.

That depends largely on what storage the imported vm is on. See https://pve.proxmox.com/wiki/Storage for which types of storage support and do not support snapshots.another question on importing VMware to Proxmox... can you do snapshots after you import a VMWare VM into Proxmox? if so how can you do that? I am trying to do this but i am getting an error saying that this VM is not configured to do snapshots, this is an issue as this is one of the things that should work "out of the box" when importing VM's.

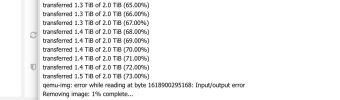

I have seen similar issues, and retries fail at different spot each time. Basically decided not going to try for anything over 500gb and instead rebuild any transfer data vm to vm, preferably with something that can do an incremental sync. Might do some more testing in the future, but we only have have about a dozen large vms.

8.3 - removed esxi mount but still exist when df -ah

I have removed the esxi mounted storage - Datacenter -> Storage and confirmed from the GUI it is no longer shown.

When i access any of the hosts, i can see see it df -ah

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88124/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88117/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88115/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88116/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88119/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88120/mnt

I have removed the esxi mounted storage - Datacenter -> Storage and confirmed from the GUI it is no longer shown.

When i access any of the hosts, i can see see it df -ah

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88124/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88117/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88115/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88116/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88119/mnt

/dev/fuse 0 0 0 - /run/pve/import/esxi/esxi88120/mnt

I converted physical PC using VMWare Converter Standalone and import to VMWare Esxi 6.7, from this ESXi storages connected to Proxmox 8.2.4, import VM and it worked great. THX.

After some trial and error with vmfs6-tools (Don't try this on live VMFS-Datastores, it'll disrupt ESXi's access to them) and QEMU "block streaming" as used by the proxmox esxi import wizard, I have built a proof of concept (really bad quality script in bash/jq/curl) that can pull info from VMWare import wizard but attach vmdk files from a shared NFS storage directly to a newly created proxmox VM so it can be started as soon as possible after shutting it down in vSphere. The data migration from vmdk to native can then be done while the vm already is live again. This for some reason is much faster than QEMU block streaming...

The post is in German, but I guess if someone wants to build on it, they could:

https://forum.proxmox.com/threads/performance-esxi-importer.146905/post-736000

FIXMEs are:

Just brainstorming:

If someone were able to get ATS (Atomic Test and Set)-Semantics into "vmfs6-tools" so we could access live vmfs6 Datastores from SAN, and combine it's readonly access with an overlay-fs for writes, it would be even nicer, because us SAN users wouldn't need to conjure up a shared NFS datastore and copy VMs disks volume twice to import it.

This is all because QEMU block streaming seems to be slow and the fuse-esxi driver kind of slow and/or unstable for big amounts of data. Of course there might be other ways around the issues - why does a bootup with QEMU block streaming take 6 minutes when just attaching the VMDKs directly has the VM booting in 40 seconds?

Maybe one could also just spin up a VM in vSphere and attach the VMDKs to be imported there. That way they could be streamed to proxmox apart from rate limited vSphere API access that the esxi-importer uses?

I can imagine having a "Proxy VM" in vSphere serving up directly attached VMDKs QEMU NBD (using raw format as source) - that way I can work around the Issues with vmfs6-tools and don't need to copy the data twice!? Maybe I'll try that some time...

The post is in German, but I guess if someone wants to build on it, they could:

https://forum.proxmox.com/threads/performance-esxi-importer.146905/post-736000

FIXMEs are:

- getting parameters from command line or maybe a file list

- automating the move to shared NFS store and shutting down the VM in vSphere

- automating import/conversion of disks to native format

- Cleanup of vmdks after importing vmdk to native disk format

- Fix network mapping - currently I need to manually assign the correct VLAN tag to the target VM

- Fixup of jq code, maybe reimplement the whole thing in Python?

Just brainstorming:

If someone were able to get ATS (Atomic Test and Set)-Semantics into "vmfs6-tools" so we could access live vmfs6 Datastores from SAN, and combine it's readonly access with an overlay-fs for writes, it would be even nicer, because us SAN users wouldn't need to conjure up a shared NFS datastore and copy VMs disks volume twice to import it.

This is all because QEMU block streaming seems to be slow and the fuse-esxi driver kind of slow and/or unstable for big amounts of data. Of course there might be other ways around the issues - why does a bootup with QEMU block streaming take 6 minutes when just attaching the VMDKs directly has the VM booting in 40 seconds?

Maybe one could also just spin up a VM in vSphere and attach the VMDKs to be imported there. That way they could be streamed to proxmox apart from rate limited vSphere API access that the esxi-importer uses?

I can imagine having a "Proxy VM" in vSphere serving up directly attached VMDKs QEMU NBD (using raw format as source) - that way I can work around the Issues with vmfs6-tools and don't need to copy the data twice!? Maybe I'll try that some time...

Sounds interesting. What I have been using for manual imports is sshfs to mount the filesystem over ssh from proxmox to a vmware server and works surprisingly well. You might want to give that a try as it avoids the step of having to move to nfs and does give proxmox rw access to the vmfs filesystem (local or san) on vmware.After some trial and error with vmfs6-tools (Don't try this on live VMFS-Datastores, it'll disrupt ESXi's access to them) and QEMU "block streaming" as used by the proxmox esxi import wizard, I have built a proof of concept (really bad quality script in bash/jq/curl) that can pull info from VMWare import wizard but attach vmdk files from a shared NFS storage directly to a newly created proxmox VM so it can be started as soon as possible after shutting it down in vSphere. The data migration from vmdk to native can then be done while the vm already is live again. This for some reason is much faster than QEMU block streaming...

The post is in German, but I guess if someone wants to build on it, they could:

https://forum.proxmox.com/threads/performance-esxi-importer.146905/post-736000

FIXMEs are:

- getting parameters from command line or maybe a file list

- automating the move to shared NFS store and shutting down the VM in vSphere

- automating import/conversion of disks to native format

- Cleanup of vmdks after importing vmdk to native disk format

- Fix network mapping - currently I need to manually assign the correct VLAN tag to the target VM

- Fixup of jq code, maybe reimplement the whole thing in Python?

Just brainstorming:

If someone were able to get ATS (Atomic Test and Set)-Semantics into "vmfs6-tools" so we could access live vmfs6 Datastores from SAN, and combine it's readonly access with an overlay-fs for writes, it would be even nicer, because us SAN users wouldn't need to conjure up a shared NFS datastore and copy VMs disks volume twice to import it.

This is all because QEMU block streaming seems to be slow and the fuse-esxi driver kind of slow and/or unstable for big amounts of data. Of course there might be other ways around the issues - why does a bootup with QEMU block streaming take 6 minutes when just attaching the VMDKs directly has the VM booting in 40 seconds?

Maybe one could also just spin up a VM in vSphere and attach the VMDKs to be imported there. That way they could be streamed to proxmox apart from rate limited vSphere API access that the esxi-importer uses?

I can imagine having a "Proxy VM" in vSphere serving up directly attached VMDKs QEMU NBD (using raw format as source) - that way I can work around the Issues with vmfs6-tools and don't need to copy the data twice!? Maybe I'll try that some time...

Like may places, we're trying to find an exit from VMware. I've been trying to import a Linux VM from ESXi but it appears to not be able to find its disk when it boots. The scenario is:

The source VM is running Rocky Linux 8.

It has no snapshots & is powered off.

Both importing via the Promox GUI & via importing an OVF file generated from the ovftool fail the same way with disk not found during boot.

Apologies if this has been asked before but I wasn't able to find any mention. Perhaps I'm missing something very basic here?

The source VM is running Rocky Linux 8.

It has no snapshots & is powered off.

Both importing via the Promox GUI & via importing an OVF file generated from the ovftool fail the same way with disk not found during boot.

Apologies if this has been asked before but I wasn't able to find any mention. Perhaps I'm missing something very basic here?

Have you try to attach Boot Disk as SATA or IDE Disk?Like may places, we're trying to find an exit from VMware. I've been trying to import a Linux VM from ESXi but it appears to not be able to find its disk when it boots. The scenario is:

The source VM is running Rocky Linux 8.

It has no snapshots & is powered off.

Both importing via the Promox GUI & via importing an OVF file generated from the ovftool fail the same way with disk not found during boot.

Apologies if this has been asked before but I wasn't able to find any mention. Perhaps I'm missing something very basic here?

View attachment 81663

What was the controller type in vmware? (Under SCSI controller in edit hardware settings, or what is scsi0.virtualDev set to in the .vmx file for the vm?

Depending on what that was, impacts what controller you have to set proxmox to use, unless you preloaded the right driver into the boot kernel.

ie: If proxmox is set to VirtIO SCSI, you might be able to get it to boot by changing it to Vmware PVSCSI in proxmox. That will give you slower performance as it's emulation of vmware's hardware isn't as good, but it makes migration easier.

Another option, depending on the OS, you can typically pick rescue kernel from the grub boot (and least with a lot of RH derived versions). That kernel will have more drivers built in and likely work. If it does, you can then "dnf reinstall kernel" and it will reinstall the kernel with the proper disk driver.

Depending on what that was, impacts what controller you have to set proxmox to use, unless you preloaded the right driver into the boot kernel.

ie: If proxmox is set to VirtIO SCSI, you might be able to get it to boot by changing it to Vmware PVSCSI in proxmox. That will give you slower performance as it's emulation of vmware's hardware isn't as good, but it makes migration easier.

Another option, depending on the OS, you can typically pick rescue kernel from the grub boot (and least with a lot of RH derived versions). That kernel will have more drivers built in and likely work. If it does, you can then "dnf reinstall kernel" and it will reinstall the kernel with the proper disk driver.

I have a licensed esxi host 7.0.1 - Proxmox 8.3.0. I can ssh directly into esxi from proxmox but when I try to add via the GUI I get a timeout error. This has plagued me with adding a NFS share as well. Yet I can manually add the NFS share via cli. Any ideas?

they are both on the same LAN, no firewall restrictions between them.

they are both on the same LAN, no firewall restrictions between them.

Last edited:

I'm doing some testing, migrating VMs from ESXi 8.0 Update 3 to PVE 8.3.3. It failed on my first test VM (migration worked, but no boot device), but worked on my second because I'd only installed VirtIO on the second VM. I deleted the migrated copy of my first VM from PVE, but now I can't find it listed as a migration source. The VM still exists on the VMware environment. Is a VM removed from the list once it's migrated? Or have I done something wrong? How can I migrate the VM again now that I've installed VirtIO installed? Thanks!