Hi,

I need advice whats best scenario to connect 3 node Proxmox cluster to PowerVault ME5024. NAS has 2x iSCSI connectors, and each Proxmox node has 2x10GB.

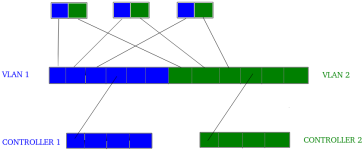

I used to have 10GB switch between NAS and cluster but now I decided to connect cluster directly to NAS. So far I connected each node to NAS like NIC1:Controller_A, NIC2:Controller_B. SO I have used 3/4 ports on each controller. I am planning to use 4th port on each controller to also connect Proxmox Backup Server.

Now I am at creating volumes and hosts on NAS and I wonder:

1) Should I create 1 host with 3 initiators for cluster and 2nd host with 1 initiator for backups server

2) or should I create 3 separate hosts with 1 initiator each for each node separetly ?

3) or I messed all up and should do it different, and how?

I need advice whats best scenario to connect 3 node Proxmox cluster to PowerVault ME5024. NAS has 2x iSCSI connectors, and each Proxmox node has 2x10GB.

I used to have 10GB switch between NAS and cluster but now I decided to connect cluster directly to NAS. So far I connected each node to NAS like NIC1:Controller_A, NIC2:Controller_B. SO I have used 3/4 ports on each controller. I am planning to use 4th port on each controller to also connect Proxmox Backup Server.

Now I am at creating volumes and hosts on NAS and I wonder:

1) Should I create 1 host with 3 initiators for cluster and 2nd host with 1 initiator for backups server

2) or should I create 3 separate hosts with 1 initiator each for each node separetly ?

3) or I messed all up and should do it different, and how?