how i can see if i use it?yes, available in both pve-no-subscription and pve-enterprise.

[SOLVED] LXC Backup randomly hangs at suspend

- Thread starter pa657

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Running lxcfs 2.0.0-pve1 and after rebooting it's worse than before :

You see, it took five hours to lxc-freeze to give up on freezing the VT. After that, the host went completely south, all the CT was shutdown, I couldn't even SSH the host. I think the RAM was totally overloaded (64GB!), I had to hard reboot the host.

It was a backup over NFS, the log files was successfully written (the first backup I mentioned and then all the others which failed with a rsync error code 14). A qemu backup was fine too.

Before lxcfs 2.0.0 the backup was randomly hanging and I had to kill it but now the first CT backup fails and there seems to be a big OOM thing or something like that.

mars 08 00:44:40 INFO: first sync finished (532 seconds)

mars 08 00:44:40 INFO: suspend vm

mars 08 05:39:02 INFO: lxc-freeze: freezer.c: do_freeze_thaw: 64 Failed to get new freezer state for /var/lib/lxc:153

mars 08 05:39:02 INFO: lxc-freeze: lxc_freeze.c: main: 84 Failed to freeze /var/lib/lxc:153

mars 08 05:39:37 ERROR: Backup of VM 153 failed - command 'lxc-freeze -n 153' failed: exit code 1

You see, it took five hours to lxc-freeze to give up on freezing the VT. After that, the host went completely south, all the CT was shutdown, I couldn't even SSH the host. I think the RAM was totally overloaded (64GB!), I had to hard reboot the host.

It was a backup over NFS, the log files was successfully written (the first backup I mentioned and then all the others which failed with a rsync error code 14). A qemu backup was fine too.

Before lxcfs 2.0.0 the backup was randomly hanging and I had to kill it but now the first CT backup fails and there seems to be a big OOM thing or something like that.

Please provide more log files (vzdump, host, container), container configuration, backup configuration and the complete output of "pveversion -v". If you had an OOM situation, it is possible that the kernel killed the lxc process, and the lxc-freeze failed because of that. Without logs, it is impossible to determine what the problem was..

If you want to provide us with even better information, you can try the following:

start the container (if it is not already running)

run a manual "lxc-freeze -n <VMID>" (replace <VMID> with the correct ID)

if lxc-freeze returns immediately or after a few seconds, it worked correctly

if lxc-freeze does not return, collect the output of "ps faxo f,uid,pid,ppid,pri,ni,vsz,rss,wchan:20,stat,tty,time,comm" and kill the hanging lxc-freeze (with kill or Ctrl+C)

run a manual "lxc-unfreeze -n <VMID>" (replace <VMID> with the correct ID, this should unfreeze the container in both cases)

repeat a few times until you trigger a hang, collect the output and report back

if you trigger a hang, you can also try attaching gdb to the lxcfs process (although it might be that it is not lxcfs that is hanging), as described earlier in this thread. if you want to do that, please install lxcfs-dbg first

thanks in advance!

If you want to provide us with even better information, you can try the following:

start the container (if it is not already running)

run a manual "lxc-freeze -n <VMID>" (replace <VMID> with the correct ID)

if lxc-freeze returns immediately or after a few seconds, it worked correctly

if lxc-freeze does not return, collect the output of "ps faxo f,uid,pid,ppid,pri,ni,vsz,rss,wchan:20,stat,tty,time,comm" and kill the hanging lxc-freeze (with kill or Ctrl+C)

run a manual "lxc-unfreeze -n <VMID>" (replace <VMID> with the correct ID, this should unfreeze the container in both cases)

repeat a few times until you trigger a hang, collect the output and report back

if you trigger a hang, you can also try attaching gdb to the lxcfs process (although it might be that it is not lxcfs that is hanging), as described earlier in this thread. if you want to do that, please install lxcfs-dbg first

thanks in advance!

The new lxcfs doesnt do the job for me either, however the converted openvz to lxc having no problems with the backup.

The other is a new created LXC, so I will make some new containers and try to backup them again!

With the STOP action as a backup works on that lxc container!.

The other is a new created LXC, so I will make some new containers and try to backup them again!

With the STOP action as a backup works on that lxc container!.

The new lxcfs doesnt do the job for me either, however the converted openvz to lxc having no problems with the backup.

The other is a new created LXC, so I will make some new containers and try to backup them again!

With the STOP action as a backup works on that lxc container!.

Please post the debugging output I asked for above - without logs and configuration files it is impossible to get to the bottom of any problems you experience.

In my case still same. I can kill freeze and unfreeze, but blocking process remains in D state. It's some 'simle' php script, manipulating files/images and is operating in area mounted with lxc.mount.entry.

Process is sleeping in kernel function nfs_wait_on_request (wchan).

Container use lxc.mount.entry: /mnt/.... home/.... none bind,create=dir,optional 0 0

Update:

I got the file, which is causing problem. It's freezing out of lxc also!Simple test ls <path> hangs in stat:

Update #2:

My problem is maybe related to something else. Symptoms are same: backup is failing. Real problem with process in D state happend 12 hours before backuping. There is some kernel erroutput in /var/log/messages, see attachement.

Update #3:

Someone reported same troubles: https://forum.proxmox.com/threads/call-traces-in-syslog-of-proxmox-4-1.26282/

# pveversion -v

proxmox-ve: 4.1-41 (running kernel: 4.2.8-1-pve)

pve-manager: 4.1-22 (running version: 4.1-22/aca130cf)

pve-kernel-4.2.6-1-pve: 4.2.6-36

pve-kernel-4.2.8-1-pve: 4.2.8-41

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 1.0-1

pve-cluster: 4.0-36

qemu-server: 4.0-64

pve-firmware: 1.1-7

libpve-common-perl: 4.0-54

libpve-access-control: 4.0-13

libpve-storage-perl: 4.0-45

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.5-9

pve-container: 1.0-52

pve-firewall: 2.0-22

pve-ha-manager: 1.0-25

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-7

lxcfs: 2.0.0-pve2

cgmanager: 0.39-pve1

criu: 1.6.0-1

Process is sleeping in kernel function nfs_wait_on_request (wchan).

Container use lxc.mount.entry: /mnt/.... home/.... none bind,create=dir,optional 0 0

Update:

I got the file, which is causing problem. It's freezing out of lxc also!Simple test ls <path> hangs in stat:

access("<path>", F_OK) = 0

stat("<path>",

Any advice howto debug this?stat("<path>",

Update #2:

My problem is maybe related to something else. Symptoms are same: backup is failing. Real problem with process in D state happend 12 hours before backuping. There is some kernel erroutput in /var/log/messages, see attachement.

Update #3:

Someone reported same troubles: https://forum.proxmox.com/threads/call-traces-in-syslog-of-proxmox-4-1.26282/

# pveversion -v

proxmox-ve: 4.1-41 (running kernel: 4.2.8-1-pve)

pve-manager: 4.1-22 (running version: 4.1-22/aca130cf)

pve-kernel-4.2.6-1-pve: 4.2.6-36

pve-kernel-4.2.8-1-pve: 4.2.8-41

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 1.0-1

pve-cluster: 4.0-36

qemu-server: 4.0-64

pve-firmware: 1.1-7

libpve-common-perl: 4.0-54

libpve-access-control: 4.0-13

libpve-storage-perl: 4.0-45

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.5-9

pve-container: 1.0-52

pve-firewall: 2.0-22

pve-ha-manager: 1.0-25

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-7

lxcfs: 2.0.0-pve2

cgmanager: 0.39-pve1

criu: 1.6.0-1

Attachments

Last edited:

Are you (bind) mounting an NFS share into the container there? Are you sure that the NFS connection is not the cause of the issue? NFS shares mounted with the "hard" parameter block at the kernel level when the server cannot reached, which can be even more aggravated by the "intr" parameter which makes those hangs uninterruptible. Depending on your use case, consider switching to "soft", if applicable.

Could you maybe post the complete NFS export and mount options as well as the container configuration?

Could you maybe post the complete NFS export and mount options as well as the container configuration?

Last edited:

Hi all,

I am running several lxc containers stored on a SAN disk through a lvm group.

I quickly experienced backup problems, solved using the lxcfs_2.0.0-pve1_amd64.deb package : backups went ok using a full "stop"/"start" on containers, 2 weeks ago, after I found this thread.

For the second time this week, I am experiencing a weird problem : My containers are working, but I cannot enter them (neither through ssh nor "pct enter").

When I try to log in using ssh, I can enter my password, then get a motd prompt such as "blahlbha... Last login: Fri Apr 8 16:44:52 2016 from xx.yy.zz.ww" then ... nothing more, forever. No shell prompt.

"pct enter" also hangs forever, and cannot be interrupted (C-c)

Looking at /var/log/messages I see these strange rows (this was 14h ago)

Apr 14 03:43:37 sd-88336 kernel: [213527.639224] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639235] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.639395] ffff880fe5233040 fffffffffffffe00 ffff880f5719fc08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.639405] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.639412] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.639562] if_eth0 D ffff880feebd6a00 0 26114 36356 0x20020104

Apr 14 03:43:37 sd-88336 kernel: [213527.639571] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639579] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.639736] ffff880fe5233040 fffffffffffffe00 ffff880f6487bc08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.639745] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.639752] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.639901] if_eth0 D ffff880feeb56a00 0 26580 42681 0x00000104

Apr 14 03:43:37 sd-88336 kernel: [213527.639910] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639918] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.640075] ffff880fe5233040 fffffffffffffe00 ffff880f64b83c08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.640084] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.640091] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.640240] if_eth0 D ffff880feead6a00 0 26583 42687 0x00000104

Apr 14 03:43:37 sd-88336 kernel: [213527.640249] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.640257] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:45:37 sd-88336 kernel: [213647.733370] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:45:37 sd-88336 kernel: [213647.733376] [<ffffffff812faa80>] __fuse_request_send+0x90/0xa0

Apr 14 03:45:37 sd-88336 kernel: [213647.733379] [<ffffffff81305198>] fuse_direct_io+0x3a8/0x5b0

# pveversion -v

proxmox-ve: 4.1-34 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-5 (running version: 4.1-5/f910ef5c)

pve-kernel-4.2.6-1-pve: 4.2.6-34

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-31

qemu-server: 4.0-49

pve-firmware: 1.1-7

libpve-common-perl: 4.0-45

libpve-access-control: 4.0-11

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.5-3

pve-container: 1.0-39

pve-firewall: 2.0-15

pve-ha-manager: 1.0-19

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-6

lxcfs: 2.0.0-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

#uname -a

Linux sd-88336 4.2.6-1-pve #1 SMP Thu Jan 21 09:34:06 CET 2016 x86_64 GNU/Linux

First time I had this problem, a "reboot" on the host could not complete. The server would take an infinite time to reboot, and subsequent ssh tries would say "reboot in progress" + throw me out. I had to do a physical reboot. I think this is what I will have to do today.

First thing I want to do after reboot is upgrade lxcfs from 2.0.0-pve1 to 2.0.0-pve2 . I am right?

Any clue regarding the whole problem? I am not a proficient sysadmin, and am ok to follow your instructions in order to help debugging.

Regards,

Laurent

I am running several lxc containers stored on a SAN disk through a lvm group.

I quickly experienced backup problems, solved using the lxcfs_2.0.0-pve1_amd64.deb package : backups went ok using a full "stop"/"start" on containers, 2 weeks ago, after I found this thread.

For the second time this week, I am experiencing a weird problem : My containers are working, but I cannot enter them (neither through ssh nor "pct enter").

When I try to log in using ssh, I can enter my password, then get a motd prompt such as "blahlbha... Last login: Fri Apr 8 16:44:52 2016 from xx.yy.zz.ww" then ... nothing more, forever. No shell prompt.

"pct enter" also hangs forever, and cannot be interrupted (C-c)

Looking at /var/log/messages I see these strange rows (this was 14h ago)

Apr 14 03:43:37 sd-88336 kernel: [213527.639224] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639235] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.639395] ffff880fe5233040 fffffffffffffe00 ffff880f5719fc08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.639405] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.639412] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.639562] if_eth0 D ffff880feebd6a00 0 26114 36356 0x20020104

Apr 14 03:43:37 sd-88336 kernel: [213527.639571] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639579] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.639736] ffff880fe5233040 fffffffffffffe00 ffff880f6487bc08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.639745] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.639752] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.639901] if_eth0 D ffff880feeb56a00 0 26580 42681 0x00000104

Apr 14 03:43:37 sd-88336 kernel: [213527.639910] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639918] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.640075] ffff880fe5233040 fffffffffffffe00 ffff880f64b83c08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.640084] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.640091] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.640240] if_eth0 D ffff880feead6a00 0 26583 42687 0x00000104

Apr 14 03:43:37 sd-88336 kernel: [213527.640249] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.640257] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:45:37 sd-88336 kernel: [213647.733370] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:45:37 sd-88336 kernel: [213647.733376] [<ffffffff812faa80>] __fuse_request_send+0x90/0xa0

Apr 14 03:45:37 sd-88336 kernel: [213647.733379] [<ffffffff81305198>] fuse_direct_io+0x3a8/0x5b0

# pveversion -v

proxmox-ve: 4.1-34 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-5 (running version: 4.1-5/f910ef5c)

pve-kernel-4.2.6-1-pve: 4.2.6-34

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-31

qemu-server: 4.0-49

pve-firmware: 1.1-7

libpve-common-perl: 4.0-45

libpve-access-control: 4.0-11

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.5-3

pve-container: 1.0-39

pve-firewall: 2.0-15

pve-ha-manager: 1.0-19

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-6

lxcfs: 2.0.0-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

#uname -a

Linux sd-88336 4.2.6-1-pve #1 SMP Thu Jan 21 09:34:06 CET 2016 x86_64 GNU/Linux

First time I had this problem, a "reboot" on the host could not complete. The server would take an infinite time to reboot, and subsequent ssh tries would say "reboot in progress" + throw me out. I had to do a physical reboot. I think this is what I will have to do today.

First thing I want to do after reboot is upgrade lxcfs from 2.0.0-pve1 to 2.0.0-pve2 . I am right?

Any clue regarding the whole problem? I am not a proficient sysadmin, and am ok to follow your instructions in order to help debugging.

Regards,

Laurent

Hi all,

I am running several lxc containers stored on a SAN disk through a lvm group.

I quickly experienced backup problems, solved using the lxcfs_2.0.0-pve1_amd64.deb package : backups went ok using a full "stop"/"start" on containers, 2 weeks ago, after I found this thread.

For the second time this week, I am experiencing a weird problem : My containers are working, but I cannot enter them (neither through ssh nor "pct enter").

When I try to log in using ssh, I can enter my password, then get a motd prompt such as "blahlbha... Last login: Fri Apr 8 16:44:52 2016 from xx.yy.zz.ww" then ... nothing more, forever. No shell prompt.

"pct enter" also hangs forever, and cannot be interrupted (C-c)

Looking at /var/log/messages I see these strange rows (this was 14h ago)

Apr 14 03:43:37 sd-88336 kernel: [213527.639224] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639235] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.639395] ffff880fe5233040 fffffffffffffe00 ffff880f5719fc08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.639405] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.639412] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.639562] if_eth0 D ffff880feebd6a00 0 26114 36356 0x20020104

Apr 14 03:43:37 sd-88336 kernel: [213527.639571] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639579] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.639736] ffff880fe5233040 fffffffffffffe00 ffff880f6487bc08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.639745] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.639752] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.639901] if_eth0 D ffff880feeb56a00 0 26580 42681 0x00000104

Apr 14 03:43:37 sd-88336 kernel: [213527.639910] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.639918] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:43:37 sd-88336 kernel: [213527.640075] ffff880fe5233040 fffffffffffffe00 ffff880f64b83c08 ffffffff81804257

Apr 14 03:43:37 sd-88336 kernel: [213527.640084] [<ffffffff812faab7>] fuse_request_send+0x27/0x30

Apr 14 03:43:37 sd-88336 kernel: [213527.640091] [<ffffffff811fd0ea>] vfs_read+0x8a/0x130

Apr 14 03:43:37 sd-88336 kernel: [213527.640240] if_eth0 D ffff880feead6a00 0 26583 42687 0x00000104

Apr 14 03:43:37 sd-88336 kernel: [213527.640249] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:43:37 sd-88336 kernel: [213527.640257] [<ffffffff81305440>] fuse_direct_read_iter+0x40/0x60

Apr 14 03:45:37 sd-88336 kernel: [213647.733370] [<ffffffff812fa8d3>] request_wait_answer+0x163/0x280

Apr 14 03:45:37 sd-88336 kernel: [213647.733376] [<ffffffff812faa80>] __fuse_request_send+0x90/0xa0

Apr 14 03:45:37 sd-88336 kernel: [213647.733379] [<ffffffff81305198>] fuse_direct_io+0x3a8/0x5b0

If you don't have other FUSE filesystems in the container, lxcfs is probably at fault here - if that was still on lxcfs 2.0.0-pve1, you might have run into the last remains of the fork / user NS bug in lxcfs.

# pveversion -v

proxmox-ve: 4.1-34 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-5 (running version: 4.1-5/f910ef5c)

pve-kernel-4.2.6-1-pve: 4.2.6-34

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-31

qemu-server: 4.0-49

pve-firmware: 1.1-7

libpve-common-perl: 4.0-45

libpve-access-control: 4.0-11

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.5-3

pve-container: 1.0-39

pve-firewall: 2.0-15

pve-ha-manager: 1.0-19

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-6

lxcfs: 2.0.0-pve1

cgmanager: 0.39-pve1

criu: 1.6.0-1

#uname -a

Linux sd-88336 4.2.6-1-pve #1 SMP Thu Jan 21 09:34:06 CET 2016 x86_64 GNU/Linux

some of that is outdated, I would advise you to update to a current version.

First time I had this problem, a "reboot" on the host could not complete. The server would take an infinite time to reboot, and subsequent ssh tries would say "reboot in progress" + throw me out. I had to do a physical reboot. I think this is what I will have to do today.

First thing I want to do after reboot is upgrade lxcfs from 2.0.0-pve1 to 2.0.0-pve2 . I am right?

Any clue regarding the whole problem? I am not a proficient sysadmin, and am ok to follow your instructions in order to help debugging.

If you experience such an issue again (after an upgrade of all the PVE packages and a reboot), you could post the complete output of "ps faxl", any system logs, the config of containers experiencing this issue and a gdb backtrace of the lxcfs processes (howto get that is in this thread).

Same issue here. Seems to hang on random container was 116 before. Now when I gave complete host a reboot it backed up successfully all VMs then few days later like today and yesterday its hanging on 114. Very strange. I then have to forcibly stop backup and then VM 114 runs fine again. Looks like it gets stuck with this in logs:

Aug 14 11:07:40 vz-cpt-1 pvedaemon[7625]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:07:40 vz-cpt-1 pvedaemon[13978]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:08:48 vz-cpt-1 pvedaemon[7625]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:09:00 vz-cpt-1 pvedaemon[7625]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:09:00 vz-cpt-1 pvedaemon[7625]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:17:01 vz-cpt-1 CRON[3748]: (root) CMD ( cd / && run-parts --report /etc/cron.hourly)

Aug 14 11:31:55 vz-cpt-1 pvedaemon[744]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:32:09 vz-cpt-1 vzdump[1852]: ERROR: Backup of VM 114 failed - command '/usr/bin/lxc-freeze -n 114' failed: interrupted by signal

and with this in backup log:

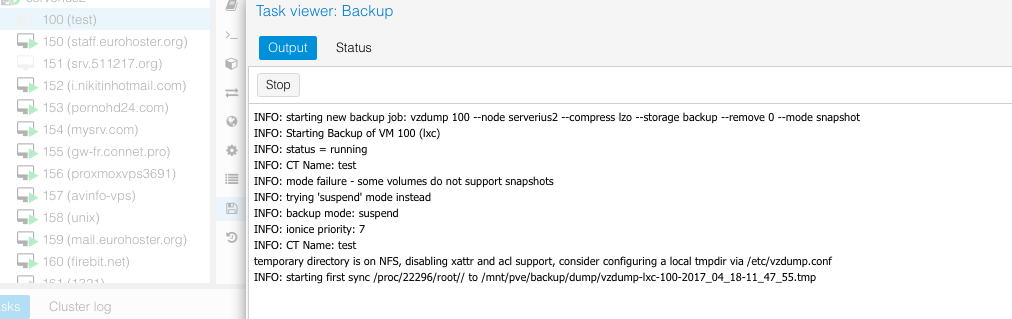

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: create storage snapshot 'vzdump'

Aug 14 11:07:40 vz-cpt-1 pvedaemon[7625]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:07:40 vz-cpt-1 pvedaemon[13978]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:08:48 vz-cpt-1 pvedaemon[7625]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:09:00 vz-cpt-1 pvedaemon[7625]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:09:00 vz-cpt-1 pvedaemon[7625]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:17:01 vz-cpt-1 CRON[3748]: (root) CMD ( cd / && run-parts --report /etc/cron.hourly)

Aug 14 11:31:55 vz-cpt-1 pvedaemon[744]: <root@pam> successful auth for user 'root@pam'

Aug 14 11:32:09 vz-cpt-1 vzdump[1852]: ERROR: Backup of VM 114 failed - command '/usr/bin/lxc-freeze -n 114' failed: interrupted by signal

and with this in backup log:

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: create storage snapshot 'vzdump'

could someone test this. I may have found my problem. I noticed it freeze again this morning. Then I check that particular VPS servers /etc/rc.sysinit file and noticed it did OS network updates because it uncommented this line:

/sbin/start_udev

I recommented it out:

#/sbin/start_udev

and stopped and started vm via pct and retested

it worked funny enough.

Is this just a fluke or what do you guys think?

/sbin/start_udev

I recommented it out:

#/sbin/start_udev

and stopped and started vm via pct and retested

it worked funny enough.

Is this just a fluke or what do you guys think?

vzdump on LXC use 99% IO when finishing the process.

Use only SSD. The archive created on NFS.

Use only SSD. The archive created on NFS.

This process use all IO.task UPID:host:00005F32:021F48E5:58F533B9:vzdump::root@pam:

vzdump on LXC use 99% IO when finishing the process.

Use only SSD. The archive created on NFS.

This process use all IO.

set a bandwidth limit for vzdump if you don't want it to use all the available I/O bandwidth..

It does not help. bwrate set a bandwidth limit for rsync only. But when the temporary directory clean up without limit.

if your tmpdir storage cannot handle deleting the tmpdir, you either need to use a mode which does not require a tmpdir (stop/snapshot) or put your tmpdir somewhere else.

snapshot does not work on LXC.

if your storage supports snapshots (e.g., LVM-Thin, ZFS, or Ceph), they do.