Hi spirit,

sorry it's not working reliable - make one test and it's fails:

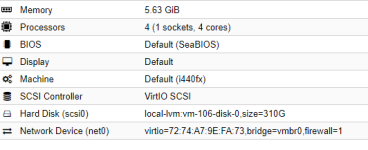

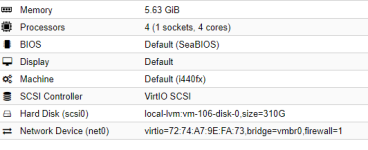

log on sending host:Code:boot: c bootdisk: scsi0 cores: 2 hotplug: disk,network,usb keyboard: de numa: 1 memory: 8192 name: vm01-dev net0: virtio=02:76:FC:6D:7F:08,bridge=vmbr0,tag=123 onboot: 1 ostype: l26 scsi0: local_vm_storage:623/vm-623-disk-1.qcow2,discard=on,size=25G scsi1: local_vm_storage:623/vm-623-disk-2.qcow2,iothread=1,size=25G scsihw: virtio-scsi-single serial0: socket smbios1: uuid=4a9fe3d2-df51-4163-b7bf-eb4657679379 sockets: 1

on the target:Code:Jun 3 20:34:35 pve08 qm[2928015]: <root@pam> starting task UPID:pve08:002CAD90:4B357DB7:5B1434BB:qmigrate:623:root@pam: Jun 3 20:44:01 pve08 qm[2930469]: VM 623 qmp command failed - VM 623 qmp command 'change' failed - unable to connect to VM 623 qmp socket - timeout after 599 retries Jun 3 20:44:54 pve08 qm[2928016]: VM 623 qmp command failed - interrupted by signal Jun 3 20:45:43 pve08 qm[2928016]: VM 623 qmp command failed - VM 623 qmp command 'query-migrate' failed - interrupted by signal Jun 3 20:45:46 pve08 qm[2928015]: <root@pam> end task UPID:pve08:002CAD90:4B357DB7:5B1434BB:qmigrate:623:root@pam: got unexpected control message: Jun 3 20:46:39 pve08 lldpd[3820]: error while receiving frame on tap623i0 (retry: 0): Network is down Jun 3 20:46:39 pve08 lldpd[3802]: 2018-06-03T20:46:39 [WARN/interfaces] error while receiving frame on tap623i0 (retry: 0): Network is down Jun 3 20:46:39 pve08 qm[2931245]: VM 623 qmp command failed - VM 623 qmp command 'change' failed - unable to connect to VM 623 qmp socket - Connection refused Jun 3 20:46:39 pve08 qm[2931203]: VM 623 qmp command failed - VM 623 qmp command 'change' failed - unable to connect to VM 623 qmp socket - Connection refused

both hosts are uptodate, but the source host aren't rebootet since 5 month (should rebooted tomorror morning).Code:Jun 3 20:34:37 pve06 qm[1414930]: <root@pam> starting task UPID:pve06:00159717:01D7AE68:5B1434BD:qmstart:623:root@pam: Jun 3 20:34:37 pve06 qm[1414935]: start VM 623: UPID:pve06:00159717:01D7AE68:5B1434BD:qmstart:623:root@pam: Jun 3 20:34:37 pve06 systemd[1]: Started 623.scope. Jun 3 20:34:37 pve06 systemd-udevd[1414959]: Could not generate persistent MAC address for tap623i0: No such file or directory Jun 3 20:34:38 pve06 kernel: [309113.136860] device tap623i0 entered promiscuous mode Jun 3 20:34:38 pve06 ovs-vsctl: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port tap623i0 Jun 3 20:34:38 pve06 ovs-vsctl: ovs|00002|db_ctl_base|ERR|no port named tap623i0 Jun 3 20:34:38 pve06 ovs-vsctl: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port fwln623i0 Jun 3 20:34:38 pve06 ovs-vsctl: ovs|00002|db_ctl_base|ERR|no port named fwln623i0 Jun 3 20:34:38 pve06 ovs-vsctl: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl add-port vmbr0 tap623i0 tag=123 Jun 3 20:34:38 pve06 qm[1414930]: <root@pam> end task UPID:pve06:00159717:01D7AE68:5B1434BD:qmstart:623:root@pam: OK Jun 3 20:46:38 pve06 ovs-vsctl: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port fwln623i0 Jun 3 20:46:38 pve06 ovs-vsctl: ovs|00002|db_ctl_base|ERR|no port named fwln623i0 Jun 3 20:46:38 pve06 ovs-vsctl: ovs|00001|vsctl|INFO|Called as /usr/bin/ovs-vsctl del-port tap623i0 Jun 3 20:46:38 pve06 lldpd[3748]: error while receiving frame on tap623i0 (retry: 0): Network is down Jun 3 20:46:38 pve06 lldpd[3451]: 2018-06-03T20:46:38 [WARN/interfaces] error while receiving frame on tap623i0 (retry: 0): Network is down Jun 3 20:46:39 pve06 lldpd[3748]: unable to send packet on real device for tap623i0: No such device or address Jun 3 20:46:39 pve06 lldpd[3451]: 2018-06-03T20:46:39 [WARN/lldp] unable to send packet on real device for tap623i0: No such device or address

I use CNTR-C, but must kill the vm process with -9.

The disk-data was transferred (target):Code:pveversion -v proxmox-ve: 5.2-2 (running kernel: 4.13.13-3-pve) pve-manager: 5.2-1 (running version: 5.2-1/0fcd7879) pve-kernel-4.15: 5.2-1 pve-kernel-4.13: 5.1-44 pve-kernel-4.15.17-1-pve: 4.15.17-9 pve-kernel-4.13.16-2-pve: 4.13.16-48 pve-kernel-4.13.16-1-pve: 4.13.16-46 pve-kernel-4.13.13-3-pve: 4.13.13-34 ceph: 12.2.5-pve1 corosync: 2.4.2-pve5 criu: 2.11.1-1~bpo90 glusterfs-client: 3.8.8-1 ksm-control-daemon: 1.2-2 libjs-extjs: 6.0.1-2 libpve-access-control: 5.0-8 libpve-apiclient-perl: 2.0-4 libpve-common-perl: 5.0-31 libpve-guest-common-perl: 2.0-16 libpve-http-server-perl: 2.0-8 libpve-storage-perl: 5.0-23 libqb0: 1.0.1-1 lvm2: 2.02.168-pve6 lxc-pve: 3.0.0-3 lxcfs: 3.0.0-1 novnc-pve: 0.6-4 openvswitch-switch: 2.7.0-2 proxmox-widget-toolkit: 1.0-18 pve-cluster: 5.0-27 pve-container: 2.0-23 pve-docs: 5.2-4 pve-firewall: 3.0-9 pve-firmware: 2.0-4 pve-ha-manager: 2.0-5 pve-i18n: 1.0-5 pve-libspice-server1: 0.12.8-3 pve-qemu-kvm: 2.11.1-5 pve-xtermjs: 1.0-5 qemu-server: 5.0-26 smartmontools: 6.5+svn4324-1 spiceterm: 3.0-5 vncterm: 1.5-3 zfsutils-linux: 0.7.8-pve1~bpo9

UdoCode:ls -lsa /data/images/623/ total 30436104 4 drwxr----- 2 root root 4096 Jun 3 20:34 . 4 drwxr-xr-x 27 root root 4096 Jun 3 20:34 .. 6872944 -rw-r----- 1 root root 26847870976 Jun 3 20:40 vm-623-disk-1.qcow2 23563152 -rw-r----- 1 root root 26847870976 Jun 3 20:38 vm-623-disk-2.qcow2

Can you send the task migration log ? And I don't see nothing related to migration in your logs.

>>VM 623 qmp command failed - VM 623 qmp command 'change' failed - unable to connect to VM 623 qmp socket - timeout after 599 >>retries

change qmp command is for eject cdrom, I don't see any cdrom in your vm config and it's not related to migration.

I'll try to reproduce with the config you have posted. (I don't have tested with iothread for example)