# cat /etc/network/interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

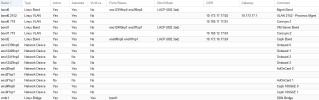

auto eno12399np0

iface eno12399np0 inet manual

#Onboard 0

auto ens5f0np0

iface ens5f0np0 inet manual

#AdOnCard 0

auto eno12409np1

iface eno12409np1 inet manual

#Onboard 1

iface eno12419np2 inet manual

#Onboard 2

iface eno12429np3 inet manual

#Onboard 3

auto ens6f0np0

iface ens6f0np0 inet manual

#Ceph 100GbE 0

auto ens6f1np1

iface ens6f1np1 inet manual

#Ceph 100GbE 1

auto ens5f1np1

iface ens5f1np1 inet manual

#AdOnCard 1

auto bond0

iface bond0 inet manual

bond-slaves eno12399np0 eno12409np1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer3+4

#Mgmt Bond

auto bond0.2152

iface bond0.2152 inet static

address 10.173.17.10/24

gateway 10.173.17.1

#VLAN 2152 - Proxmox Mgmt

auto bond1

iface bond1 inet manual

bond-slaves ens5f0np0 ens5f1np1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer3+4

#VM Server Bond

auto bond2

iface bond2 inet static

address 10.173.16.10/24

bond-slaves ens6f0np0 ens6f1np1

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer3+4

mtu 9000

#Ceph Bond

auto bond0.718

iface bond0.718 inet static

address 10.169.11.10/24

#Corosync1

auto bond1.719

iface bond1.719 inet static

address 10.169.12.10/24

#Corosync2

auto vmbr1

iface vmbr1 inet manual

bridge-ports bond1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

#SDN Bridge

source /etc/network/interfaces.d/*[CODE]

[CODE]# lspci -nnk

00:00.0 System peripheral [0880]: Intel Corporation Ice Lake Memory Map/VT-d [8086:09a2] (rev 20)

Subsystem: Dell Ice Lake Memory Map/VT-d [1028:0a6b]

00:00.1 System peripheral [0880]: Intel Corporation Ice Lake Mesh 2 PCIe [8086:09a4] (rev 20)

Subsystem: Dell Ice Lake Mesh 2 PCIe [1028:0a6b]

00:00.2 System peripheral [0880]: Intel Corporation Ice Lake RAS [8086:09a3] (rev 20)

Subsystem: Dell Ice Lake RAS [1028:0a6b]

00:00.4 Generic system peripheral [0807]: Intel Corporation Device [8086:0b23]

Subsystem: Dell Device [1028:0a6b]

Kernel driver in use: pcieport

00:0c.0 PCI bridge [0604]: Intel Corporation Device [8086:1bbc] (rev 11)

DeviceName: PCH Root Port

Subsystem: Dell Device [1028:0a6b]

Kernel driver in use: pcieport

00:0e.0 PCI bridge [0604]: Intel Corporation Device [8086:1bbe] (rev 11)

DeviceName: PCH Root Port

Subsystem: Dell Device [1028:0a6b]

Kernel driver in use: pcieport

00:14.0 USB controller [0c03]: Intel Corporation Emmitsburg (C740 Family) USB 3.2 Gen 1 xHCI Controller [8086:1bcd] (rev 11)

Subsystem: Dell Emmitsburg (C740 Family) USB 3.2 Gen 1 xHCI Controller [1028:0a6b]

Kernel driver in use: xhci_hcd

Kernel modules: xhci_pci

00:14.2 RAM memory [0500]: Intel Corporation Device [8086:1bce] (rev 11)

Subsystem: Dell Device [1028:0a6b]

00:14.4 Host bridge [0600]: Intel Corporation Device [8086:1bfe] (rev 11)

Subsystem: Dell Device [1028:0a6b]

00:15.0 System peripheral [0880]: Intel Corporation Device [8086:1bff] (rev 11)

Subsystem: Dell Device [1028:0a6b]

Kernel driver in use: ismt_smbus

Kernel modules: i2c_ismt

00:16.0 Communication controller [0780]: Intel Corporation Device [8086:1be0] (rev 11)

Subsystem: Dell Device [1028:0a6b]

Kernel modules: mei_me

00:18.0 SATA controller [0106]: Intel Corporation Sapphire Rapids SATA AHCI Controller [8086:1bf2] (rev 11)

Subsystem: Dell Sapphire Rapids SATA AHCI Controller [1028:0a6b]

Kernel driver in use: ahci

Kernel modules: ahci

00:19.0 SATA controller [0106]: Intel Corporation Sapphire Rapids SATA AHCI Controller [8086:1bd2] (rev 11)

Subsystem: Dell Sapphire Rapids SATA AHCI Controller [1028:0a6b]

Kernel driver in use: ahci

Kernel modules: ahci

00:1f.0 ISA bridge [0601]: Intel Corporation Device [8086:1b81] (rev 11)

Subsystem: Dell Device [1028:0a6b]

00:1f.4 SMBus [0c05]: Intel Corporation Device [8086:1bc9] (rev 11)

Subsystem: Dell Device [1028:0a6b]

Kernel driver in use: i801_smbus

Kernel modules: i2c_i801

00:1f.5 Serial bus controller [0c80]: Intel Corporation Device [8086:1bca] (rev 11)

Subsystem: Dell Device [1028:0a6b]

Kernel driver in use: intel-spi

Kernel modules: spi_intel_pci

02:00.0 PCI bridge [0604]: PLDA PCI Express Bridge [1556:be00] (rev 02)

03:00.0 VGA compatible controller [0300]: Matrox Electronics Systems Ltd. Integrated Matrox G200eW3 Graphics Controller [102b:0536] (rev 04)

DeviceName: Embedded Video

Subsystem: Dell Integrated Matrox G200eW3 Graphics Controller [1028:0a6b]

Kernel driver in use: mgag200

Kernel modules: mgag200

0c:00.0 System peripheral [0880]: Intel Corporation Ice Lake Memory Map/VT-d [8086:09a2] (rev 20)

Subsystem: Intel Corporation Ice Lake Memory Map/VT-d [8086:0000]

0c:00.1 System peripheral [0880]: Intel Corporation Ice Lake Mesh 2 PCIe [8086:09a4] (rev 20)

Subsystem: Intel Corporation Ice Lake Mesh 2 PCIe [8086:0000]

0c:00.2 System peripheral [0880]: Intel Corporation Ice Lake RAS [8086:09a3] (rev 20)

Subsystem: Intel Corporation Ice Lake RAS [8086:0000]

0c:00.4 Generic system peripheral [0807]: Intel Corporation Device [8086:0b23]

Subsystem: Intel Corporation Device [8086:0000]

Kernel driver in use: pcieport

21:00.0 System peripheral [0880]: Intel Corporation Ice Lake Memory Map/VT-d [8086:09a2] (rev 20)

Subsystem: Intel Corporation Ice Lake Memory Map/VT-d [8086:0000]

21:00.1 System peripheral [0880]: Intel Corporation Ice Lake Mesh 2 PCIe [8086:09a4] (rev 20)

Subsystem: Intel Corporation Ice Lake Mesh 2 PCIe [8086:0000]

21:00.2 System peripheral [0880]: Intel Corporation Ice Lake RAS [8086:09a3] (rev 20)

Subsystem: Intel Corporation Ice Lake RAS [8086:0000]

21:00.4 Generic system peripheral [0807]: Intel Corporation Device [8086:0b23]

Subsystem: Intel Corporation Device [8086:0000]

Kernel driver in use: pcieport

21:01.0 PCI bridge [0604]: Intel Corporation Device [8086:352a] (rev 30)

DeviceName: Root Port

Subsystem: Intel Corporation Device [8086:0000]

Kernel driver in use: pcieport

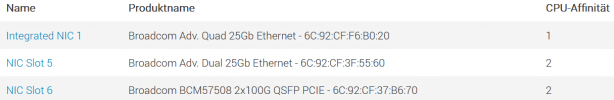

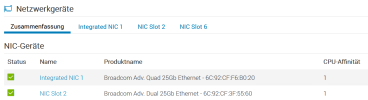

22:00.0 Ethernet controller [0200]: Broadcom Inc. and subsidiaries BCM57504 NetXtreme-E 10Gb/25Gb/40Gb/50Gb/100Gb/200Gb Ethernet [14e4:1751] (rev 12)

DeviceName: Integrated NIC 1 Port 1-1

Subsystem: Broadcom Inc. and subsidiaries NetXtreme-E BCM57504 4x25G OCP3.0 [14e4:5045]

Kernel driver in use: bnxt_en

Kernel modules: bnxt_en

22:00.1 Ethernet controller [0200]: Broadcom Inc. and subsidiaries BCM57504 NetXtreme-E 10Gb/25Gb/40Gb/50Gb/100Gb/200Gb Ethernet [14e4:1751] (rev 12)

DeviceName: Integrated NIC 1 Port 2-1

Subsystem: Broadcom Inc. and subsidiaries NetXtreme-E BCM57504 4x25G OCP3.0 [14e4:5045]

Kernel driver in use: bnxt_en

Kernel modules: bnxt_en

22:00.2 Ethernet controller [0200]: Broadcom Inc. and subsidiaries BCM57504 NetXtreme-E 10Gb/25Gb/40Gb/50Gb/100Gb/200Gb Ethernet [14e4:1751] (rev 12)

DeviceName: Integrated NIC 1 Port 3-1

Subsystem: Broadcom Inc. and subsidiaries NetXtreme-E BCM57504 4x25G OCP3.0 [14e4:5045]

Kernel driver in use: bnxt_en

Kernel modules: bnxt_en

22:00.3 Ethernet controller [0200]: Broadcom Inc. and subsidiaries BCM57504 NetXtreme-E 10Gb/25Gb/40Gb/50Gb/100Gb/200Gb Ethernet [14e4:1751] (rev 12)

DeviceName: Integrated NIC 1 Port 4-1

Subsystem: Broadcom Inc. and subsidiaries NetXtreme-E BCM57504 4x25G OCP3.0 [14e4:5045]

Kernel driver in use: bnxt_en

Kernel modules: bnxt_en

8a:01.0 PCI bridge [0604]: Intel Corporation Device [8086:352a] (rev 30)

DeviceName: SLOT 6

Subsystem: Intel Corporation Device [8086:0000]

Kernel driver in use: pcieport

8b:00.0 Ethernet controller [0200]: Broadcom Inc. and subsidiaries BCM57508 NetXtreme-E 10Gb/25Gb/40Gb/50Gb/100Gb/200Gb Ethernet [14e4:1750] (rev 11)

Subsystem: Broadcom Inc. and subsidiaries NetXtreme-E P2100D BCM57508 2x100G QSFP PCIE [14e4:d124]

Kernel driver in use: bnxt_en

Kernel modules: bnxt_en

8b:00.1 Ethernet controller [0200]: Broadcom Inc. and subsidiaries BCM57508 NetXtreme-E 10Gb/25Gb/40Gb/50Gb/100Gb/200Gb Ethernet [14e4:1750] (rev 11)

Subsystem: Broadcom Inc. and subsidiaries NetXtreme-E P2100D BCM57508 2x100G QSFP PCIE [14e4:d124]

Kernel driver in use: bnxt_en

Kernel modules: bnxt_en

9f:00.0 System peripheral [0880]: Intel Corporation Ice Lake Memory Map/VT-d [8086:09a2] (rev 20)

Subsystem: Intel Corporation Ice Lake Memory Map/VT-d [8086:0000]

9f:00.1 System peripheral [0880]: Intel Corporation Ice Lake Mesh 2 PCIe [8086:09a4] (rev 20)

Subsystem: Intel Corporation Ice Lake Mesh 2 PCIe [8086:0000]

9f:00.2 System peripheral [0880]: Intel Corporation Ice Lake RAS [8086:09a3] (rev 20)

Subsystem: Intel Corporation Ice Lake RAS [8086:0000]

9f:00.4 Generic system peripheral [0807]: Intel Corporation Device [8086:0b23]

Subsystem: Intel Corporation Device [8086:0000]

Kernel driver in use: pcieport

9f:01.0 PCI bridge [0604]: Intel Corporation Device [8086:352a] (rev 30)

DeviceName: SLOT 5

Subsystem: Intel Corporation Device [8086:0000]

Kernel driver in use: pcieport

a0:00.0 Ethernet controller [0200]: Broadcom Inc. and subsidiaries BCM57414 NetXtreme-E 10Gb/25Gb RDMA Ethernet Controller [14e4:16d7] (rev 01)

Subsystem: Broadcom Inc. and subsidiaries BCM57414 NetXtreme-E 10Gb/25Gb RDMA Ethernet Controller [14e4:4141]

Kernel driver in use: bnxt_en

Kernel modules: bnxt_en

a0:00.1 Ethernet controller [0200]: Broadcom Inc. and subsidiaries BCM57414 NetXtreme-E 10Gb/25Gb RDMA Ethernet Controller [14e4:16d7] (rev 01)

Subsystem: Broadcom Inc. and subsidiaries BCM57414 NetXtreme-E 10Gb/25Gb RDMA Ethernet Controller [14e4:4141]

Kernel driver in use: bnxt_en

Kernel modules: bnxt_en