We have configured 9 KVM images on a i7 server (2.67GHz, 12 GB RAM, 1,5TB Hardware RAID1: 3ware Inc 9650SE SATA-II RAID PCIe), they are running very good. Unfortunatly, the virtual machines are nearly unreachable if we try to make snapshot-backups. We are using a nfs-mount over a gigabit network-link, so bandwith is no problem. An example for a small KVM-image:

Nov 20 01:13:34 INFO: Total bytes written: 8826415616 (31.17 MiB/s)

Nov 20 01:13:40 INFO: archive file size: 8.22GB

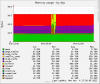

When the backup starts, the load on the KVM-image increases and goes up to 21-25. The local system status on the proxmox-webpage displays IO delays of 30 to 50%, so I guess there must be a problem with the speed of the local filesystem. We've tested the backup on the local disk (instead of the nfs-share) too, but with the same results. The KVM becomes unresponsive during the backup and works normal after that. File-compression is disabled, we use raw-images as harddisks for the KVM's.

Any ideas how we can improve this?

Nov 20 01:13:34 INFO: Total bytes written: 8826415616 (31.17 MiB/s)

Nov 20 01:13:40 INFO: archive file size: 8.22GB

When the backup starts, the load on the KVM-image increases and goes up to 21-25. The local system status on the proxmox-webpage displays IO delays of 30 to 50%, so I guess there must be a problem with the speed of the local filesystem. We've tested the backup on the local disk (instead of the nfs-share) too, but with the same results. The KVM becomes unresponsive during the backup and works normal after that. File-compression is disabled, we use raw-images as harddisks for the KVM's.

Any ideas how we can improve this?