Hello dear community.

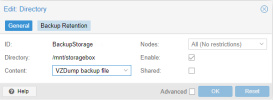

Could you help me with this case, I have a storage unit contracted in the cloud and I mounted it in a proxmox cluster, it contains 5 nodes, of which only 3 correctly detect the storage and the last 2 do not.Could you help me by indicating how to find the solution, I search and can't find how to solve it and have it correctly detect the size.

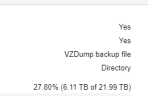

node 1, 2

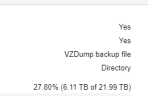

node 3

As you can see, despite being the same directory, it does not detect the correct size.

I have proxmox 7.

thx.

Could you help me with this case, I have a storage unit contracted in the cloud and I mounted it in a proxmox cluster, it contains 5 nodes, of which only 3 correctly detect the storage and the last 2 do not.Could you help me by indicating how to find the solution, I search and can't find how to solve it and have it correctly detect the size.

node 1, 2

node 3

As you can see, despite being the same directory, it does not detect the correct size.

I have proxmox 7.

thx.