Hi,

I've read a lot about replacing a hard drive in a ZFS raid, but most of the time is about replacing a failed hard drive.

(like: https://pve.proxmox.com/wiki/ZFS:_Tips_and_Tricks#Replacing_a_failed_disk_in_the_root_pool)

Followed by the commands:

But my ZFS Raid (raidz) has neither an error nor a failed hard drive.

my question is whether changing a healthy (very old) hard drive with a new one also requires all of these commands?

Or whether a single command is enough in this case, like:

where ata-WDC_WD60EZRZ-00GZ5B1_WD-WX44D55N' is the brand new hard drive

can you tell me which of the above commands are also required here?

For example is the creation of the same partition table needed in this scenario?

regards,

maxprox

I've read a lot about replacing a hard drive in a ZFS raid, but most of the time is about replacing a failed hard drive.

(like: https://pve.proxmox.com/wiki/ZFS:_Tips_and_Tricks#Replacing_a_failed_disk_in_the_root_pool)

Followed by the commands:

Code:

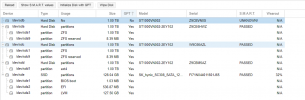

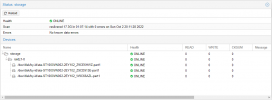

zpool status

zpool offline rpool /dev/source # (=failed disk)

## shutdown install the new disk or replace the disks

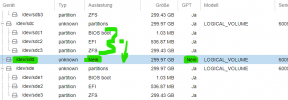

sgdisk --replicate=/dev/target /dev/source

sgdisk --randomize-guids /dev/target

zpool replace rpool /dev/source /dev/target

zpool status # => resilvering is workingBut my ZFS Raid (raidz) has neither an error nor a failed hard drive.

my question is whether changing a healthy (very old) hard drive with a new one also requires all of these commands?

Or whether a single command is enough in this case, like:

Code:

zpool replace rpool /dev/disk/by-id/old-disk /dev/disk/by-id/ata-WDC_WD60EZRZ-00GZ5B1_WD-WX44D55Ncan you tell me which of the above commands are also required here?

For example is the creation of the same partition table needed in this scenario?

regards,

maxprox

Last edited: