Hi

I have similar situation. I have installed proxmox with zfs by setup. So now, I have to replace a disk (/dev/sdd). How can I add the new disk with all partition safely? Have do I part it manually before zpool replace?

The Old disk is no more in system. Do I need zpool replace?

Tanks.

View attachment 43028

Theoretical part

As a general rule, sfdisk is used to manage MBR partition tables and sgdisk is used to manage GPT partition tables. Admittedly though

UEFI should fall back to using the /efi/boot/bootx64.efi (more accurately /efi/boot/boot{machine type short-name}.efi)

when the partition IDs change.

keep in mind that if the failed disk is part of a pool you want to boot from... you need to update grub or refresh uefi boot entries

with proxmox boot tool. And copy partition layout from old disk to a new one.

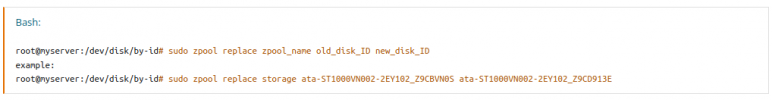

Changing a failed device

# zpool replace -f <pool> <old device> <new device>

Changing a failed bootable device

Depending on how Proxmox VE was installed it is either using proxmox-boot-tool [1] or plain grub as bootloader.

You can check by running:

# proxmox-boot-tool status

The first steps of copying the partition table, reissuing GUIDs and replacing the ZFS partition are the same.

To make the system bootable from the new disk, different steps are needed which depend on the bootloader in use.

# sgdisk <healthy bootable device> -R <new device in the /dev/disk/by-id/ format>

Logically replace command will created the appropriate partitions automatically instead of using (maybe needed though)

But if you need to create the same partition table as the healthy drive do one of the following

create an empty GPT Partition Table on the new hdd with parted:

parted /dev/new-disk

(parted)# print

(parted)# mklabel GPT

(parted)# Yes

(parted)# q

OR

Copy the partition table from a mirror member to the new one

newDisk= '/dev/sda'

healthyDisk='/dev/sdb'

sgdisk -R "$newDisk" "$healthyDisk"

sgdisk -G "$newDisk

# sgdisk -G <new device in the /dev/disk/by-id/ format format>

# zpool replace -f <pool> <old disk partition-probably part3> <new disk partition-probably part3>

Note Use the zpool status -v command to monitor how far the resilvering process of the new disk has progressed.

Afterwards with proxmox-boot-tool:

# proxmox-boot-tool format <new disk's ESP>

# proxmox-boot-tool init <new disk's ESP>

Note ESP stands for EFI System Partition, which is setup as partition #2 on bootable disks setup by the Proxmox VE installer since version 5.4.

For details, see Setting up a new partition for use as synced ESP.

With grub:

# grub-install <new disk>

/////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

Practical part

Remove failed disk and insert a new one (MY SCENARIO. ZFS MIRROR CONFIGURATION BOOT IN UEFI MODE)

CT250MX500SSD1_2028E2B4....

General rule of replacement

# sgdisk <healthy bootable device> -R <new device>

# sgdisk -G <new device>

# zpool replace -f <pool> <old zfs partition> <new zfs partition>memb

# Use the zpool status -v command to monitor how far the resilvering process of the new disk has progressed.

# proxmox-boot-tool format <new disk's ESP> (ESP stands for EFI System Partition, which is setup as partition #2 on bootable disks

setup by the Proxmox VE installer since version 5.4)

# proxmox-boot-tool init <new disk's ESP>

sgdisk /dev/disk/by-id/ata-CT250MX500SSD1_2028E2B4.... -R /dev/disk/by-id/ata-CT250MX500SSD1_2028C456....

lsblk (check afterwards if both disks have the same amount of partitions. sda/sda1,sda2,sda3 and sdb/sdb1,sdb2,sdb3)

sgdisk -G /dev/disk/by-id/ata-CT250MX500SSD1_2028E2B4.... (-G = generalize)

zpool replace -f rpool 872817340134134.... /dev/disk/by-id/ata-CT250MX500SSD1_2028E2B4....-part3

pve-efiboot-tool format /dev/disk/by-id/ata-CT250MX500SSD1_2028E2B4.....-part2 --force (else you get warning messages about begin a member of zfs filesystem)

pve-efiboot-tool init /dev/disk/by-id/ata-CT250MX500SSD1_2028E2B4....-part2

## ls -l /dev/disk/by-id/* if you want to see the id of the disks

Check and post your results