On a Hardware RAID configured system, we are having a hard time creating LVM2 groups on the RAID controlled drives.

Whenever the hardware undergoes a physical power cycle, any LVM2 groups created on these drives are lost and cannot be recovered. Commands like `pvscan`, `lvscan`, or `lgscan` only return the PVE default storage pool and do not detect anything we have done to the other drives. When attempting to restore the storage pool configurations, the system will error stating that the drive with the specified UUID cannot be located for the restoration.

I am unaware whether it is how I am making the LVM2 groups or if it is something to do with the RAID controller, so I figured I would come here for support. IT believes the system is operating as to be expected and suggest this is an OS or user error.

Steps used to create the LVM2 Groups:

1. Determining the partition intent:

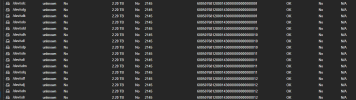

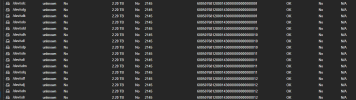

From the 16 physical drives supplied, we suspect that 4 RAID groups have been created. This suspicion arises from the Serial Numbers reported by the system. So, we are treating the system as if only 4 physical devices are actually in play, not the full 16.

We know that the end result is 2 storage pools:

- Archival: 75% of the available storage data

- Non-Archival: 25% of the available storage data

This would mean that one of the RAID pools would just become our Non-Archival storage pool, and the 3 remaining drive pools would become our Archival storage pool.

I wish to achieve this by creating one Logical Volume group, referred to as LVG, and two logical volumes, referred to as Archival and NonArchival.

2. Creating physical volumes:

Using the command `pvcreate /dev/sd{c..r}`, I created the Physical Volumes for each of the drives. This command completes without error or warning.

3. Creating the LVG:

I attempted to run the command `vgcreate LVG /dev/sd{c..r}`, but get the following error in return:

This is what leads me to believe that we only have access to 4 drives instead of 16. Notice the 4 missing errors: sdc, sdd, sde, and sdf. These are the 4 drives in which are the first of each serial number reported, so this makes sense to me. So, what if we attempt to only use those 4?

You can see that is successfully created the group, but than can not display any information about said group. I cannot move forward because of this.

However, when I first attempted this, I did managed to make the group and even the subsequent LVM members using:

- `lvcreate -n NonArchival -L 25%VG LVG`

- `lvcreate -n Archival -L 75%VG LVG`

These volume groups did not persist power cycle, and we ended up losing all data saved to these members.

Another odd thing I have noticed is that drives with specific serial numbers have changed what group they belong to. What I mean is that when I first created my LVM2 group on this system, `/dev/sdc` belonged to the serial number ending with `10` and not `f`.

All of this information is a lot, but it is everything I can possibly think of that would be considered relevant to this problem. I am seeking help to create these LVM members and have them survive a power cycle.

Whenever the hardware undergoes a physical power cycle, any LVM2 groups created on these drives are lost and cannot be recovered. Commands like `pvscan`, `lvscan`, or `lgscan` only return the PVE default storage pool and do not detect anything we have done to the other drives. When attempting to restore the storage pool configurations, the system will error stating that the drive with the specified UUID cannot be located for the restoration.

I am unaware whether it is how I am making the LVM2 groups or if it is something to do with the RAID controller, so I figured I would come here for support. IT believes the system is operating as to be expected and suggest this is an OS or user error.

Steps used to create the LVM2 Groups:

1. Determining the partition intent:

From the 16 physical drives supplied, we suspect that 4 RAID groups have been created. This suspicion arises from the Serial Numbers reported by the system. So, we are treating the system as if only 4 physical devices are actually in play, not the full 16.

We know that the end result is 2 storage pools:

- Archival: 75% of the available storage data

- Non-Archival: 25% of the available storage data

This would mean that one of the RAID pools would just become our Non-Archival storage pool, and the 3 remaining drive pools would become our Archival storage pool.

I wish to achieve this by creating one Logical Volume group, referred to as LVG, and two logical volumes, referred to as Archival and NonArchival.

2. Creating physical volumes:

Using the command `pvcreate /dev/sd{c..r}`, I created the Physical Volumes for each of the drives. This command completes without error or warning.

3. Creating the LVG:

I attempted to run the command `vgcreate LVG /dev/sd{c..r}`, but get the following error in return:

Bash:

root@fe:~# vgcreate LVG /dev/sd{c..r}

Failed to read lvm info for /dev/sdg PVID vgRDKw5AdWtBH7dAoT8cJe8Y4BZnzcS1.

Failed to read lvm info for /dev/sdh PVID wCV18TWd4jN8wR2MbMv0Pofsyumk64q4.

Failed to read lvm info for /dev/sdi PVID 0TD2Kd60AcNLFRX4gfTSYkNJVpytStdW.

Failed to read lvm info for /dev/sdj PVID gJv8XtVeQ9lwXXaOwNJqvlJJ0magNwv0.

Failed to read lvm info for /dev/sdk PVID vgRDKw5AdWtBH7dAoT8cJe8Y4BZnzcS1.

Failed to read lvm info for /dev/sdl PVID wCV18TWd4jN8wR2MbMv0Pofsyumk64q4.

Failed to read lvm info for /dev/sdm PVID 0TD2Kd60AcNLFRX4gfTSYkNJVpytStdW.

Failed to read lvm info for /dev/sdn PVID gJv8XtVeQ9lwXXaOwNJqvlJJ0magNwv0.

Failed to read lvm info for /dev/sdo PVID vgRDKw5AdWtBH7dAoT8cJe8Y4BZnzcS1.

Failed to read lvm info for /dev/sdp PVID wCV18TWd4jN8wR2MbMv0Pofsyumk64q4.

Failed to read lvm info for /dev/sdq PVID 0TD2Kd60AcNLFRX4gfTSYkNJVpytStdW.

Failed to read lvm info for /dev/sdr PVID gJv8XtVeQ9lwXXaOwNJqvlJJ0magNwv0.This is what leads me to believe that we only have access to 4 drives instead of 16. Notice the 4 missing errors: sdc, sdd, sde, and sdf. These are the 4 drives in which are the first of each serial number reported, so this makes sense to me. So, what if we attempt to only use those 4?

Bash:

root@fe:~# vgcreate LVG /dev/sd{c..f}

Volume group "LVG" successfully created

root@fe:~# vgdisplay LVG

Volume group "LVG" not found

Cannot process volume group LVGYou can see that is successfully created the group, but than can not display any information about said group. I cannot move forward because of this.

However, when I first attempted this, I did managed to make the group and even the subsequent LVM members using:

- `lvcreate -n NonArchival -L 25%VG LVG`

- `lvcreate -n Archival -L 75%VG LVG`

These volume groups did not persist power cycle, and we ended up losing all data saved to these members.

Bash:

root@fe:~# lspci -knn | grep 'RAID bus controller'

18:00.0 RAID bus controller [0104]: Broadcom / LSI MegaRAID SAS-3 3108 [Invader] [1000:005d] (rev 02)

Another odd thing I have noticed is that drives with specific serial numbers have changed what group they belong to. What I mean is that when I first created my LVM2 group on this system, `/dev/sdc` belonged to the serial number ending with `10` and not `f`.

All of this information is a lot, but it is everything I can possibly think of that would be considered relevant to this problem. I am seeking help to create these LVM members and have them survive a power cycle.