Hi Everyone,

I have an unusual problem with the ZFS virtual machine block size (zvol).

Proxmox version 7.3

I have a configured proxmox cluster consisting of two servers plus a qdevice device.

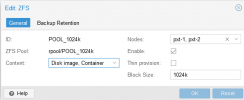

I set up a datastore (ZFS) from the webgui of proxmox called SSD_ZPOOl_1 and set its block size to 1024k for both nodes in the cluster, proxmox successfully added a datastore.

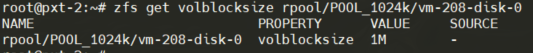

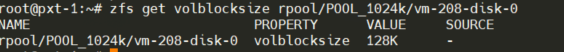

I added a new virtual machine to this SSD_ZPOOL_1 resource and checked the block size of this newly created machine with the command "zfs get volblocksize" the size of the block was correct, it showed 1M (1024k). Then I added a replication of this virtual machine from node A to B, the replication was done to the second node, I checked the block size of this machine after replication and it showed that the block size is 128k and now if it migrates the virtual machine from node A to B, it is in reverse migration from the node B on A throws an error and I don't want to migrate back.

I suppose it is the fault that the SSD_ZPOOL_1 resource is set to the block size of 1024k on one node and the block size is set to 128k on the other node after replication, but the question is why the block size is changed when replicating to the same resource on the second node?

Anyone know what's going on?

I will add that the Large Block option is enabled for the SSD_ZPOOL_1 pool.

Best Regards

Orion8888

I have an unusual problem with the ZFS virtual machine block size (zvol).

Proxmox version 7.3

I have a configured proxmox cluster consisting of two servers plus a qdevice device.

I set up a datastore (ZFS) from the webgui of proxmox called SSD_ZPOOl_1 and set its block size to 1024k for both nodes in the cluster, proxmox successfully added a datastore.

I added a new virtual machine to this SSD_ZPOOL_1 resource and checked the block size of this newly created machine with the command "zfs get volblocksize" the size of the block was correct, it showed 1M (1024k). Then I added a replication of this virtual machine from node A to B, the replication was done to the second node, I checked the block size of this machine after replication and it showed that the block size is 128k and now if it migrates the virtual machine from node A to B, it is in reverse migration from the node B on A throws an error and I don't want to migrate back.

I suppose it is the fault that the SSD_ZPOOL_1 resource is set to the block size of 1024k on one node and the block size is set to 128k on the other node after replication, but the question is why the block size is changed when replicating to the same resource on the second node?

Anyone know what's going on?

I will add that the Large Block option is enabled for the SSD_ZPOOL_1 pool.

Best Regards

Orion8888

Attachments

Last edited: